Chapter 58 Estimating Structural Regression Models Using Structural Equation Modeling

In this chapter, we will learn how to estimate structural regression models, which are part of the structural equation modeling (SEM) family of analyses. Specifically, we will learn how to integrate the measurement model and structural model into a single SEM.

58.1 Conceptual Overview

Structural equation modeling (SEM) is a latent variable modeling approach that can be used to estimate confirmatory factor analysis models, latent growth models, latent change score models, and structural regression models. We can also use SEM to simultaneously estimate multiple relations between observed variables by implementing path analysis model.

In this chapter, we will focus on estimating structural regression models (SRM), which is often what comes to mind when people think of SEM. An SRM allows us to integrate our measurement model (via confirmatory factor analysis) and structural model (i.e., direct relations, indirect relations). In doing so, when estimating direct and indirect relations involving latent factors, we can account for measurement error (e.g., unreliability) in the observed variables (e.g., multiple items) that serve as indicators of those latent factors.

58.1.1 Path Diagrams

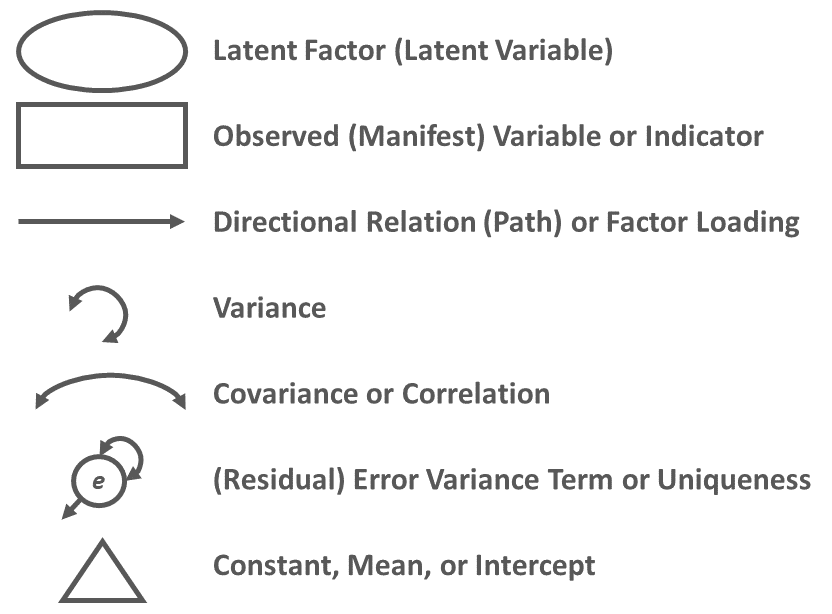

It is often helpful to visualize an SRM using a path diagram. A path diagram displays the model parameter specifications and can also include parameter estimates. Conventional path diagram symbols are shown in Figure 1.

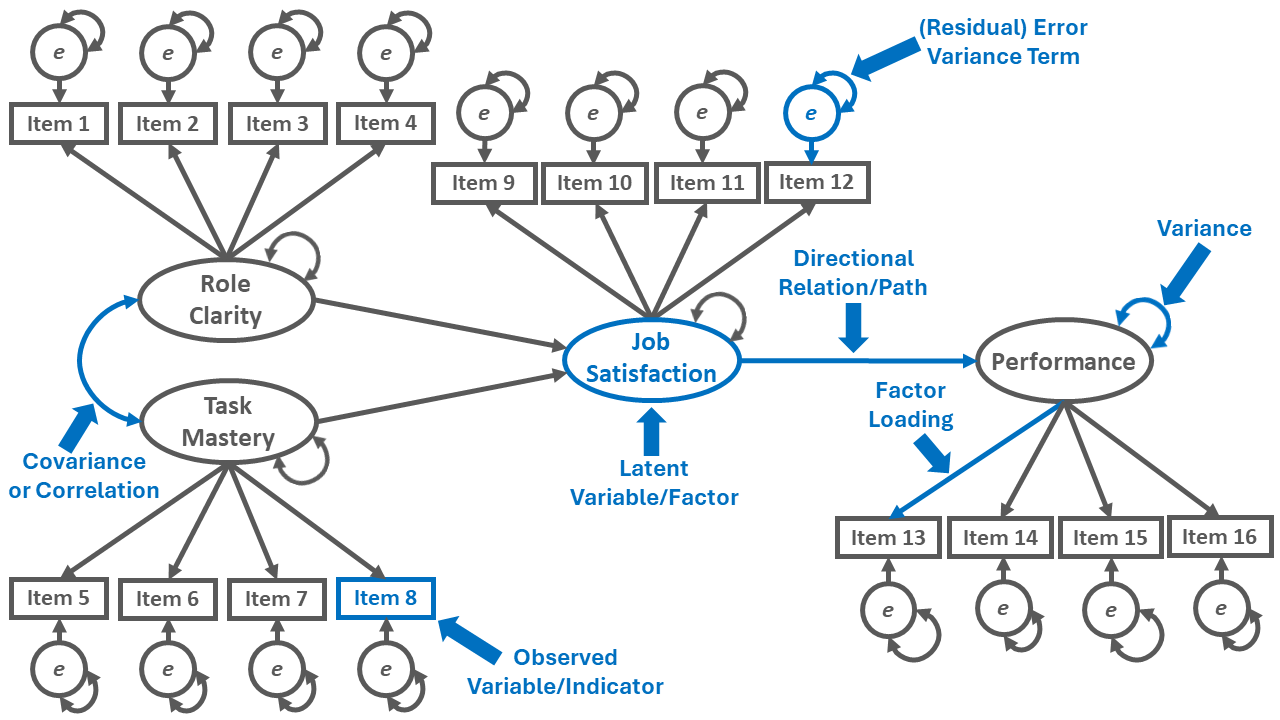

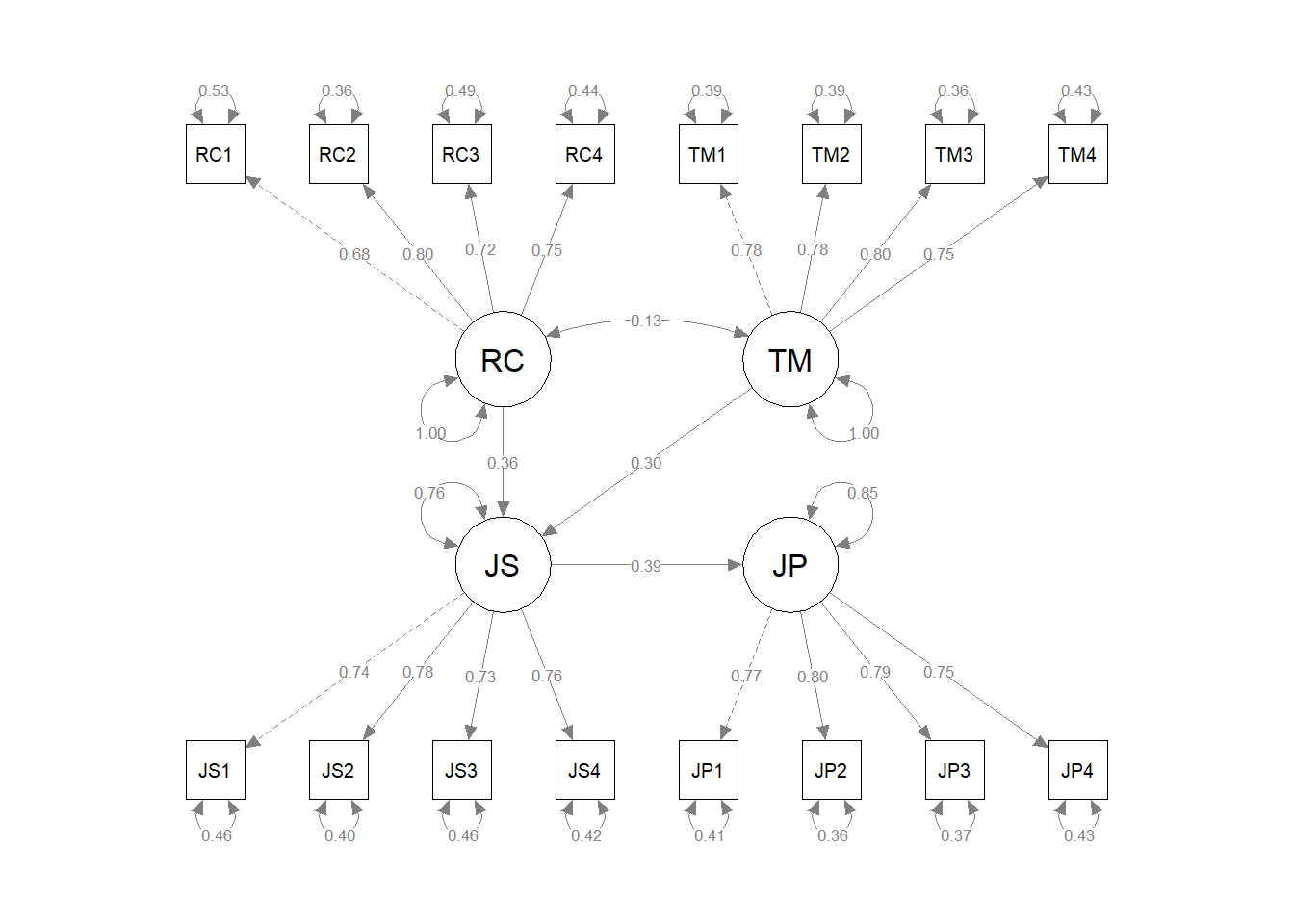

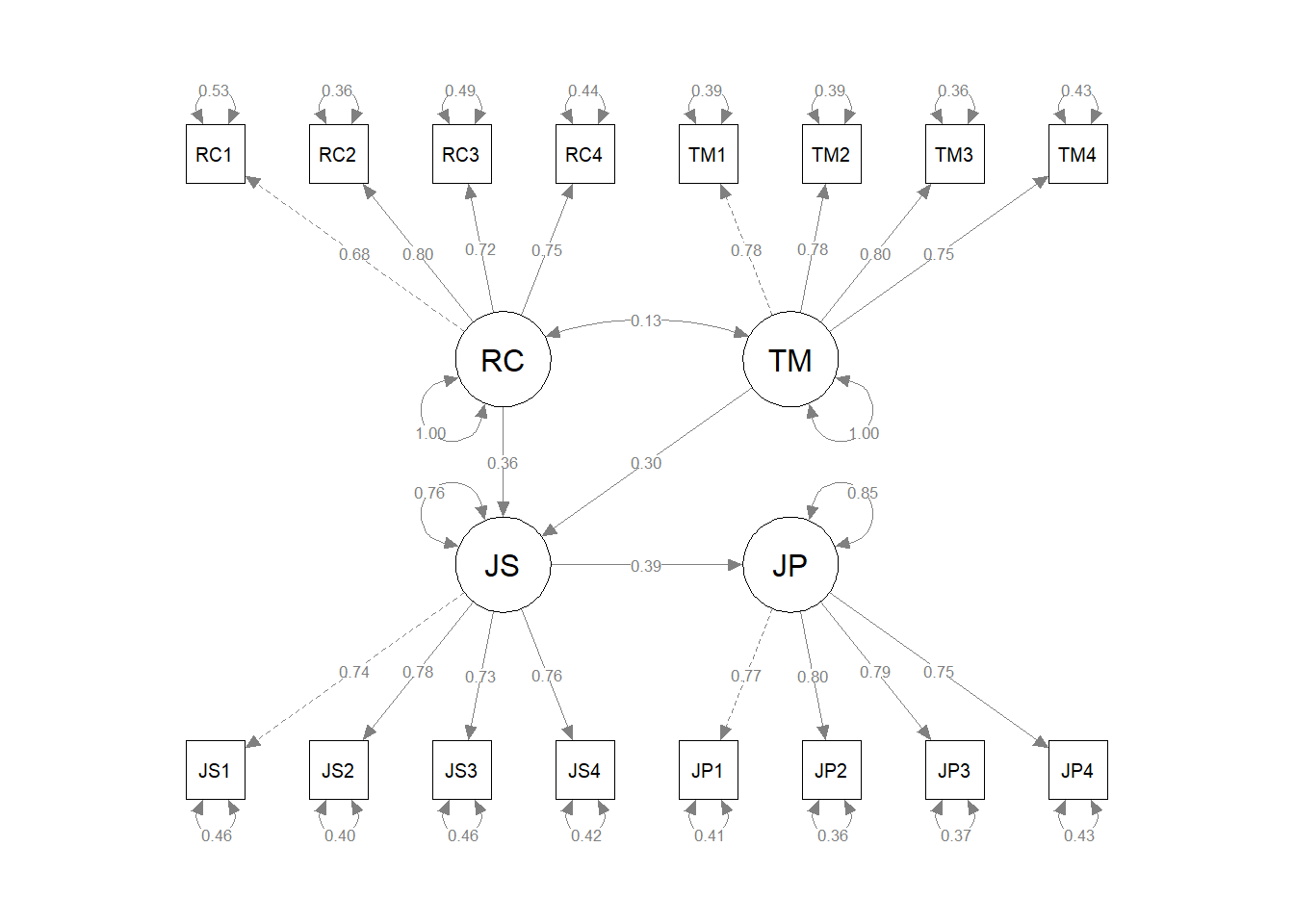

For an example of how the path diagram symbols can be used to construct a visual depiction of an SRM, please reference Figure 2. The path diagram depicts an SRM with four latent factors (i.e., role clarity, task mastery, job satisfaction, performance), their respective measurement structures, and their structural relations with each other.

By convention, the latent factors are represented by an oval or circle. Please note that the latent factor is not directly measured; rather, we infer information about each latent factor based on its indicators (i.e., observed variables), which in this example correspond to the items loading on the latent factor.

Latent factors have variance terms associated with them, which represent the latent factors’ variabilities; these variances terms, which are represented by double-headed arrows, reflect the extent to which the latent factor estimates vary between individuals.

A bidirectional association between latent factors is depicted with the double-headed arrow, which is referred to as a covariance – or if standardized, a correlation.

Each observed variable (indicator) is represented with a rectangle. A one-directional, single-sided arrow extending from a latent factor to an observed variable represents a factor loading.

Each observed variable has a (residual) error variance term, which represents the amount of variance left unexplained by the latent factor in relation to the observed variable.

A directional relation (path) from one latent factor to another is represented by a single-headed arrow, and represents a regression. Directional relations are often of most substantive interest in an SRM and often corresponding to formal hypotheses or questions that we are attempting to test or answer.

58.1.2 Model Identification

Model identification has to do with the number of (free or freely estimated) parameters specified in the model relative to the number of unique (non-redundant) sources of information available, and model implication has important implications for assessing model fit and estimating parameter estimates.

Just-identified: In a just-identified model (i.e., saturated model), the number of freely estimated parameters (e.g., factor loadings, covariances, variances) is equal to the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is equal to zero. In just-identified models, the model parameter standard errors can be estimated, but the model fit cannot be assessed in a meaningful way using traditional model fit indices.

Over-identified: In an over-identified model, the number of freely estimated parameters is less than the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is greater than zero. In over-identified models, traditional model fit indices and parameter standard errors can be estimated.

Under-identified: In an under-identified model, the number of freely estimated parameters is greater than the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is less than zero. In under-identified models, the model parameter standard errors and model fit cannot be estimated. Some might say under-identified models are overparameterized because they have more parameters to be estimated than unique sources of information.

Most (if not all) statistical software packages that allow structural equation modeling (and by extension, SRM) automatically compute the degrees of freedom for a model or, if the model is under-identified, provide an error message. As such, we don’t need to count the number of sources of unique (non-redundant) sources of information and free parameters by hand. With that said, to understand model identification and its various forms at a deeper level, it is often helpful to practice calculating the degrees freedom by hand when first learning.

The formula for calculating the number of unique (non-redundant) sources of information available for a particular model is as follows:

\(i = \frac{p(p+1)}{2}\)

where \(p\) is the number of observed variables to be modeled. This formula calculates the number of possible unique covariances and variances for the variables specified in the model – or in other words, it calculates the lower diagonal of a covariance matrix, including the variances.

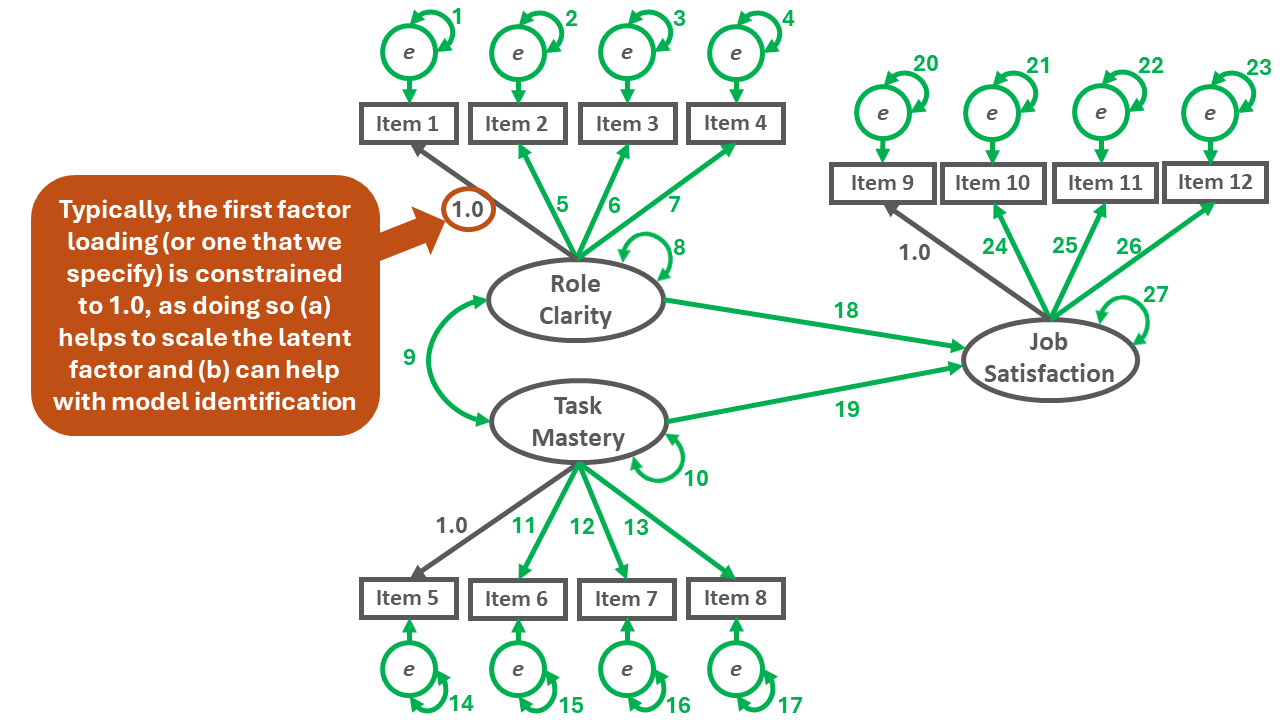

In the SRM shown in Figure 3 below, there are 12 observed variables: Items 1-12. Accordingly, in the following formula, \(p\) is equal to 12, and the number of unique (non-redundant) sources of information is 78.

\(i = \frac{12(12+1)}{2} = \frac{156}{2} = 78\)

To count the number of free parameters (\(k\)), simply add up the number of the specified unconstrained (freely estimated) factor loadings, variances, covariances, directional relations, and (residual) error variance terms in the SRM. Please note that for latent variable scaling and model identification purposes, for each latent factor, we typically constrain one of the factor loadings to 1.0, which means that it is not freely estimated and thus doesn’t count as one of the free parameters. The example SRM shown in Figure 3 has 27 free parameters.

\(k = 27\)

To calculate the degrees of freedom (df) for the model, we need to subtract the number of free parameters from the number unique (non-redundant) sources of information, which in this example equates to 78 minus 28. Thus, the degrees of freedom for the model is 50, which means the model is over-identified.

\(df = i - k = 78 - 27 = 51\)

58.1.3 Model Fit

When a model is over-identified (df > 0), the extent to which the specified model fits the data can be assessed using a variety of model fit indices, such as the chi-square (\(\chi^{2}\)) test, comparative fit index (CFI), Tucker-Lewis index (TLI), root mean square error of approximation (RMSEA), and standardized root mean square residual (SRMR). For a commonly cited reference on cutoffs for fit indices, please refer to Hu and Bentler (1999), and for a concise description of common guidelines regarding interpreting model fit indices, including differences between stringent and relaxed interpretations of common fit indices, I recommend checking out Nye (2023). With that being said, those papers focus on confirmatory factor analyses as opposed to SRM, but confirmatory factor analyses are integrated into SRM, so they still have some relevance. Regardless of which cutoffs we apply when interpreting fit indices, we must remember that such cutoffs are merely guidelines, and it’s possible to estimate an acceptable model that meets some but not all of the cutoffs given the limitations of some fit indices. Further, in light of the limitations of conventional model fit index cutoffs, McNeish and Wolf (2023) developed model- and data-specific dynamic fit index cutoffs, which we will cover later in the chapter tutorial.

Chi-square test. The chi-square (\(\chi^{2}\)) test can be used to assess whether the model fits the data adequately, where a statistically significant \(\chi^{2}\) value (e.g., p \(<\) .05) indicates that the model does not fit the data well and a nonsignificant chi-square value (e.g., p \(\ge\) .05) indicates that the model fits the data reasonably well (Bagozzi and Yi 1988). The null hypothesis for the \(\chi^{2}\) test is that the model fits the data perfectly, and thus failing to reject the null model provides some confidence that the model fits the data reasonably close to perfectly. Of note, the \(\chi^{2}\) test is sensitive to sample size and non-normal variable distributions.

Comparative fit index (CFI). As the name implies, the comparative fit index (CFI) is a type of comparative (or incremental) fit index, which means that CFI compares the focal model to a baseline model, which is commonly referred to as the null or independence model. CFI is generally less sensitive to sample size than the chi-square test. A CFI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90.

Tucker-Lewis index (TLI). Like CFI, Tucker-Lewis index (TLI) is another type of comparative (or incremental) fit index. TLI is generally less sensitive to sample size than the chi-square test and tends to work well with smaller sample sizes; however, as Hu and Bentler (1999) noted, TLI may be not be the best choice for smaller sample sizes (e.g., N \(<\) 250). A TLI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90.

Root mean square error of approximation (RMSEA). The root mean square error of approximation (RMSEA) is an absolute fit index that penalizes model complexity (e.g., models with a larger number of estimated parameters) and thus ends up effectively rewarding more parsimonious models. RMSEA values tend to upwardly biased when the model degrees of freedom are fewer (i.e., when the model is closer to being just-identified); further, Hu and Bentler (1999) noted that RMSEA may not be the best choice for smaller sample sizes (e.g., N \(<\) 250). In general, an RMSEA value that is less than or equal to .06 indicates good model fit to the data, although some relax that cutoff to .08 or even .10.

Standardized root mean square residual (SRMR). Like the RMSEA, the standardized root mean square residual (SRMR) is an example of an absolute fit index. An SRMR value that is less than or equal to .06 generally indicates good fit to the data, although some relax that cutoff to .08.

Summary of model fit indices. The conventional cutoffs for the aforementioned model fit indices – like any rule of thumb – should be applied with caution and with good judgment and intention. Further, these indices don’t always agree with one another, which means that we often look across multiple fit indices and come up with our best judgment of whether the model adequately fits the data. A poorly fitting model may be due to model misspecification, an inappropriate model estimator, a large amount of error around slope estimates, or other factors that need to be addressed. With that being said, we should also be careful to not toss out a model entirely if one or more of the model fit indices suggest less than acceptable levels of fit to the data. The table below contains the conventional stringent and more relaxed cutoffs for the model fit indices.

| Fit Index | Stringent Cutoffs for Acceptable Fit | Relaxed Cutoffs for Acceptable Fit |

|---|---|---|

| \(\chi^{2}\) | \(p \ge .05\) | \(p \ge .01\) |

| CFI | \(\ge .95\) | \(\ge .90\) |

| TLI | \(\ge .95\) | \(\ge .90\) |

| RMSEA | \(\le .06\) | \(\le .08\) |

| SRMR | \(\le .06\) | \(\le .08\) |

58.1.4 Parameter Estimates

In SRM, there are various types of parameter estimates, which correspond to the path diagram symbols covered earlier (e.g., covariance, variance, mean, factor loading). When a model is just-identified or over-identified, we can estimate the standard errors for freely estimated parameters, which allows us to evaluate statistical significance. With most software applications, we can request standardized parameter estimates, which can facilitate the interpretation of some parameters.

Factor loadings. When we standardize factor loadings, we obtain estimates for each directional relation between the latent factor and an indicator, including for the factor loading that we likely constrained to 1.0 for latent factor scaling and model identification purposes (see above). When standardized, factor loadings can be interpreted like correlations, and generally we want to see standardized estimate values between .50 and .95 (Bagozzi and Yi 1988). If a standardized factor loading falls outside of that range, we typically investigate whether there is a theoretical or empirical reason for the out-of-range estimate, and we may consider removing the associated indicator if warranted.

Variances. The variance estimate of the latent factor is generally not a focus when evaluating parameter estimates in an SRM, as the variance of a latent factor depends on the factor loadings and scaling.

Covariances. In an SRM, covariances between latent factors help us understand the extent to which they are related (or unrelated). When standardized, a covariance can be interpreted as a correlation.

Directional paths. Directional paths can be between two latent factors, between two observed variables, or between a latent factor and an observed variable. Directional paths represent structural components of an SRM and can be thought of as regressions. Further, they are often tied to hypotheses or research questions, meaning that we tend to focus on their statistical significance and practical significance.

(Residual) error variance terms. The (residual) error variance terms, which are also known as disturbance terms or uniquenesses, indicate how much variance is left unexplained by the latent factor in relation to the indicators. When standardized, error variance terms represent the proportion (percentage) of variance that remains unexplained by the latent factor. Ideally, we want to see standardized error variance terms that are less than or equal to .50.

58.1.5 Model Comparisons

When evaluating an SRM, we often wish to evaluate whether the focal model performs better (or worse) than an alternative model. Comparing models can help us arrive at a more parsimonious model that still fits the data well.

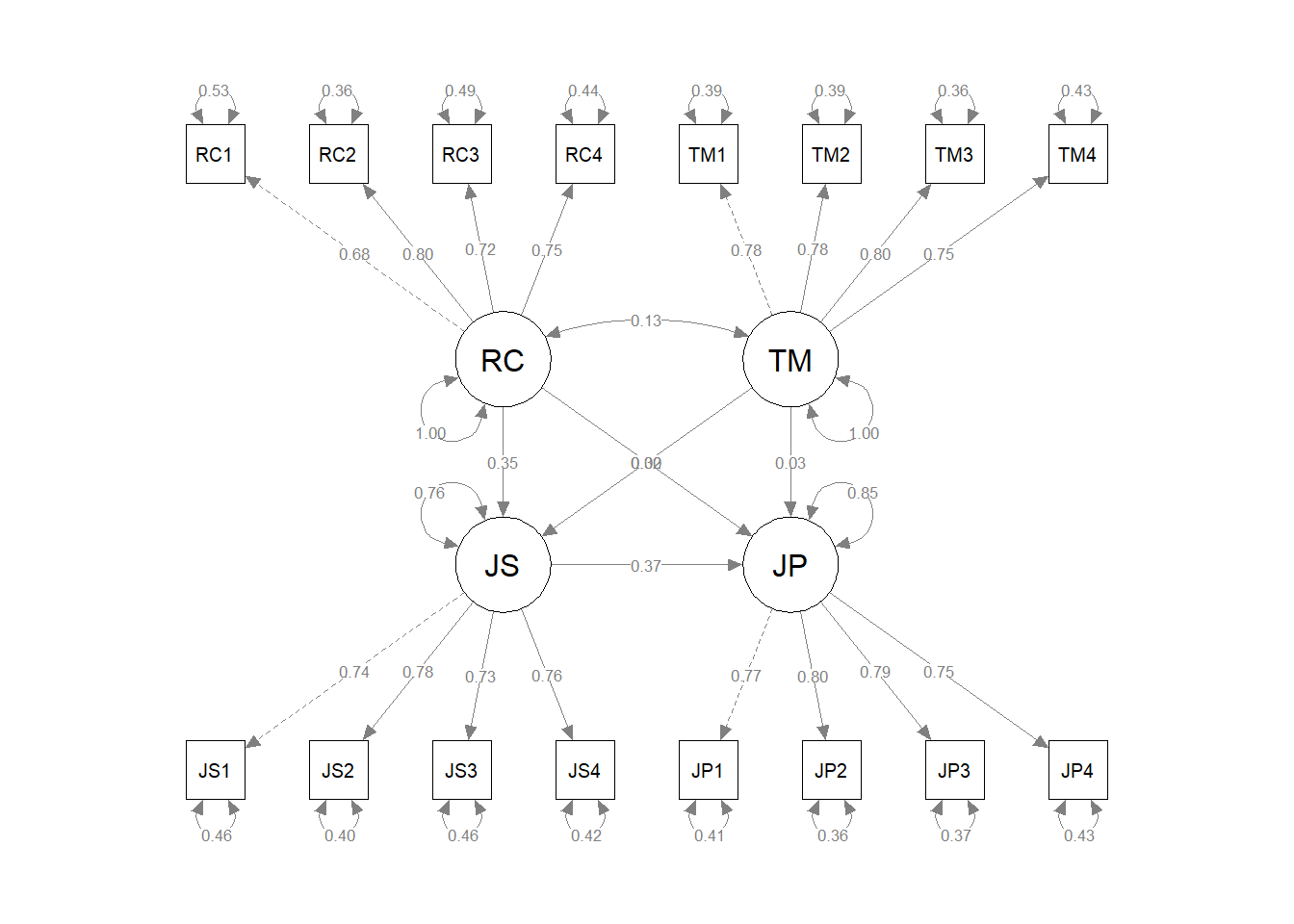

As an example, imagine our focal model is the one shown in Figure 2 above. Now imagine that we specify an alternative model that has two extra freely estimated directional paths from Role Clarity to Performance and from Task Mastery to Performance. We can compare those two models to determine whether our focal model fits the data about as well as or worse than the less parsimonious alternative model with two extra directional paths.

When two models are nested, we can perform nested model comparisons. As a reminder, a nested model has all the same parameter estimates of a full model but has additional parameter constraints in place. If two models are nested, we can compare them using model fit indices like CFI, TLI, RMSEA, and SRMR. We can also use the chi-square difference (\(\Delta \chi^{2}\)) test (likelihood ratio test) to compare nested models, which provides a statistical test for nested-model comparisons.

When two models are not nested, we can use other model fit indices like Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC). With respect to these indices, the best fitting model will have lower AIC and BIC values.

58.1.6 Statistical Assumptions

The statistical assumptions that should be met prior to estimating and/or interpreting an SRM will depend on the type of estimation method. Common estimation methods for SRM models include (but are not limited to) maximum likelihood (ML), maximum likelihood with robust standard errors (MLM or MLR), weighted least squares (WLS), and diagonally weighted least squares (DWLS). WLS and DWLS estimation methods are used when there are observed variables with nominal or ordinal (categorical) measurement scales. In this chapter, we will focus on ML estimation, which is a common method when observed variables have interval or ratio (continuous) measurement scales. As Kline (2011) notes, ML estimation carries with it the following assumptions: “The statistical assumptions of ML estimation include independence of the scores, multivariate normality of the endogenous variables, and independence of the exogenous variables and error terms” (p. 159). When multivariate non-normality is a concern, the MLM or MLR estimator is a better choice than ML estimator, where the MLR estimator allows for missing data and the MLM estimator does not.

58.1.6.1 Sample Write-Up

After their respective start dates, we assessed 550 new employees’ role clarity (1 month post-hire), task mastery (1 month post-hire), job satisfaction (6 months post-hire), and supervisor-rated job satisfaction (6 months post-hire); missing data were not a concern. Each construct’s associated multi-item measure included four items. We hypothesized that higher role clarity and task mastery is associated with higher job satisfaction, and that higher job satisfaction is associated with higher job performance. Before estimating a structural regression model to test those hypotheses, we evaluated the measurement structure of the four measures using a multi-factor confirmatory factor analysis model. We evaluated the model’s fit to the data using the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 120.274 df = 98, p = .063). Further, the CFI and TLI estimates were .994 and .993, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .020, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .024, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that the four-factor measurement model fit the data acceptably. Further, standardized factor loadings ranged from .682 to .802, which all fell well within the recommended .50-.95 acceptability range. The standardized error variances for items ranged from .358 to .534, and only one estimate associated with the first role clarity item was above the the target threshold of .50, thereby indicating that it was unlikely that an unmodeled construct had an outsized influence on the items. The average variance extracted (AVE) estimates for role clarity, task mastery, job satisfaction, and job performance were .546, .608, .567, and .606, respectively, which all exceeded the .50 cutoff; thus, all four latent factors showed acceptable AVE levels. The composite reliability (CR) estimates for role clarity, task mastery, job satisfaction, and job performance were were .827, .861, .840, and .860, and all indicated acceptable levels of internal consistency reliability. In sum, the updated four-factor measurement model also showed acceptable fit to the data and acceptable parameter estimates, acceptable AVE estimates, and acceptable CR estimates.

Using the four-factor measurement model, we proceeded with estimating a structural regression model to test our hypotheses. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 120.858 df = 100, p = .076). Further, the CFI and TLI estimates were .994 and .993, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .019, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .025, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that the SRM fit the data acceptably. As hypothesized, the standardized directional path from: (a) role clarity to job satisfaction was statistically significant and positive (.356, p < .001); (b) from task mastery to job satisfaction was statistically significant and positive (.300, p < .001); and (c) from job satisfaction to job performance was statistically significant and positive (.389, p < .001).

58.2 Tutorial

This chapter’s tutorial demonstrates how to estimate structural regression models using structural equation modeling in R.

58.2.2 Functions & Packages Introduced

| Function | Package |

|---|---|

cfa |

lavaan |

summary |

base R |

AVE |

semTools |

compRelSEM |

semTools |

semPaths |

semPlot |

sem |

lavaan |

anova |

base R |

options |

base R |

parameterEstimates |

lavaan |

inspect |

lavaan |

cbind |

base R |

rbind |

base R |

t |

base R |

ampute |

mice |

58.2.3 Initial Steps

If you haven’t already, save the file called “sem.csv” into a folder that you will subsequently set as your working directory. Your working directory will likely be different than the one shown below (i.e., "H:/RWorkshop"). As a reminder, you can access all of the data files referenced in this book by downloading them as a compressed (zipped) folder from the my GitHub site: https://github.com/davidcaughlin/R-Tutorial-Data-Files; once you’ve followed the link to GitHub, just click “Code” (or “Download”) followed by “Download ZIP”, which will download all of the data files referenced in this book. For the sake of parsimony, I recommend downloading all of the data files into the same folder on your computer, which will allow you to set that same folder as your working directory for each of the chapters in this book.

Next, using the setwd function, set your working directory to the folder in which you saved the data file for this chapter. Alternatively, you can manually set your working directory folder in your drop-down menus by going to Session > Set Working Directory > Choose Directory…. Be sure to create a new R script file (.R) or update an existing R script file so that you can save your script and annotations. If you need refreshers on how to set your working directory and how to create and save an R script, please refer to Setting a Working Directory and Creating & Saving an R Script.

Next, read in the .csv data file called “sem.csv” using your choice of read function. In this example, I use the read_csv function from the readr package (Wickham, Hester, and Bryan 2024). If you choose to use the read_csv function, be sure that you have installed and accessed the readr package using the install.packages and library functions. Note: You don’t need to install a package every time you wish to access it; in general, I would recommend updating a package installation once ever 1-3 months. For refreshers on installing packages and reading data into R, please refer to Packages and Reading Data into R.

# Install readr package if you haven't already

# [Note: You don't need to install a package every

# time you wish to access it]

install.packages("readr")# Access readr package

library(readr)

# Read data and name data frame (tibble) object

df <- read_csv("sem.csv")

# Print the names of the variables in the data frame (tibble) object

names(df)## [1] "EmployeeID" "RC1" "RC2" "RC3" "RC4" "TM1" "TM2" "TM3" "TM4"

## [10] "JS1" "JS2" "JS3" "JS4" "JP1" "JP2" "JP3" "JP4" "Gender"## [1] 550## # A tibble: 6 × 18

## EmployeeID RC1 RC2 RC3 RC4 TM1 TM2 TM3 TM4 JS1 JS2 JS3 JS4 JP1 JP2 JP3 JP4 Gender

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 EE1001 4 4 3 4 3 4 5 4 2 3 4 4 6 7 7 7 1

## 2 EE1002 5 4 3 6 5 6 5 5 5 3 5 3 7 6 6 7 1

## 3 EE1003 4 4 5 2 3 4 4 5 5 5 5 6 7 9 7 7 0

## 4 EE1004 5 4 5 5 2 3 3 4 3 4 4 3 8 8 8 10 0

## 5 EE1005 2 3 2 2 2 3 3 1 3 3 2 4 6 6 7 6 1

## 6 EE1006 4 5 5 3 3 2 4 2 5 4 5 3 6 7 6 6 0The data frame includes data from a new-employee onboarding survey administered 1-month after employees’ respective start dates, an engagement survey administered 6 months after employees’ respective start dates, and a supervisor-rated job performance measure administered 12 months after employees’ respective start dates. The sample includes 550 employees, and the data have already been joined for you. In total, employees responded to four multi-item measures: role clarity (1 month), task mastery (1 month), job satisfaction (6 months), and job performance (12 months). As part of initial survey, employees employees self-reported their gender identities (Gender). Employees responded to items from the role clarity, task mastery, and job satisfaction measures using a 7-point agreement Likert-type response format, ranging from Strongly Disagree (1) to Strongly Agree (7). Employees’ supervisors responded to items from the supervisor-rated job performance measure using a 10-point response format, ranging from Does Not Meet Expectations (1) to Exceeds Expectations (10). For all items, higher scores indicate higher levels of the construct being measured.

The first multi-item measure is employee-rated and is designed to measure role clarity, which is conceptually defined as “the extent to which an individual understands what is expected of them in their job or role.” The measure includes the following four items.

RC1(“I understand what my job-related responsibilities are.”)RC2(“I understand what the organization expects of me in my job.”)RC3(“My job responsibilities have been clearly communicated to me.”)RC4(“My role in the organization is clear to me.”)

The second multi-item measure is employee-rated and is designed to measure task mastery, which is conceptually defined as “the extent to which an individual feels self-efficacious in their role and feels confident in performing their job responsibilities.” The measure includes the following four items.

TM1(“I am confident I can perform my job responsibilities effectively.”)TM2(“I am able to address unforeseen job-related challenges.”)TM3(“When I apply effort at work, I perform well.”)TM4(“I am proficient in the skills needed to perform my job.”)

The third multi-item measure is employee-rated and is designed to measure job satisfaction, which is conceptually defined as “the extent to which an individual favorably evaluates their job and components of their job.” The measure includes the following four items.

JS1(“In general, I am satisfied with my job.”)JS2(“I enjoy completing my work tasks.”)JS3(“I like all aspects of my job.”)JS4(“It is a pleasure to fulfill my job responsibilities.”)

The fourth multi-item measure is supervisor-rated and is designed to measure employees’ job performance, which is conceptually defined as “the extent to which an individual effectively fulfills their tasks, duties, and responsibilities.” The measure includes the following four items.

JP1(“This employee completes administrative tasks successfully.”)JP2(“This employee provides high-quality service to customers.”)JP3(“This employee collaborates effectively with team members.”)JP4(“This employee applies rigorous problem solving on the job.”)

58.2.4 Evaluate the Measurement Model Using Confirmatory Factor Analysis

Prior to estimating a structural regression model (SRM), it is customary to evaluate the measurement model using confirmatory factor analysis (CFA). More specifically, we will be estimating a multi-factor CFA because we have four multi-item measures of interest. Doing so allows us to evaluate the latent factor structure and whether our empirical evidence supports the theoretically distinguishable constructs we attempted to measure; in other words, multi-factor CFA models are useful for evaluating whether theoretically distinguishable constructs are in fact empirically distinguishable based on the acquired data. Because an earlier chapter provides an in-depth introduction to CFA, for the most part, we will breeze through evaluating the measurement model in this chapter. If you need a refresher on CFA, please check out that earlier chapter.

As a reminder, our data frame (df) includes responses to four multi-item measures: role clarity (1 month post-hire), task mastery (1 month post-hire), job satisfaction (6 months post-hire), and supervisor-rated job satisfaction (6 months post-hire). In this section we will specify a four-factor CFA model with four latent factors corresponding to role clarity, task mastery, job satisfaction, and job performance, with each measure’s items loading on its respective latent factor. In doing so, we can determine whether this four-factor model fits the data acceptably.

First, because CFA is a specific application of structural equation modeling (SEM), we will use functions from an R package developed for SEM called lavaan (latent variable analysis) to estimate our CFA model. If you haven’t already, begin by installing and accessing the lavaan package.

Second, we will specify a four-factor CFA model and assign it to an object that we can subsequently reference. To do so, we will do the following.

- Specify a name for the model object (e.g.,

cfa_mod), followed by the<-assignment operator. - To the right of the

<-assignment operator and within quotation marks (" "):- Specify a name for the role clarity latent factor (e.g.,

RC), followed by the=~operator, which is used to indicate how a latent factor is measured. Anything that comes to the right of the=~operator is an indicator (e.g., item) of the latent factor. Please note that the latent factor is not something that we directly observe, so it will not have a corresponding variable in our data frame object. After the=~operator, specify each indicator (i.e., item) associated with the latent factor, and to separate the indicators, insert the+operator. In this example, the four indicators of the role clarity latent factor (RC) are:RC1 + RC2 + RC3 + RC4. These are our observed variables, which conceptually are influenced by the underlying latent factor. - Repeat the previous step for the task mastery (e.g.,

TM), job satisfaction (e.g.,JS), and job performance (e.g.,JP) latent factors.

- Specify a name for the role clarity latent factor (e.g.,

# Specify four-factor CFA model & assign to object

cfa_mod <- "

RC =~ RC1 + RC2 + RC3 + RC4

TM =~ TM1 + TM2 + TM3 + TM4

JS =~ JS1 + JS2 + JS3 + JS4

JP =~ JP1 + JP2 + JP3 + JP4

"Third, now that we have specified the model object (cfa_mod), we are ready to estimate the model using the cfa function from the lavaan package. To do so, we will do the following.

- Specify a name for the fitted model object (e.g.,

cfa_fit), followed by the<-assignment operator. - To the right of the

<-assignment operator, type the name of thecfafunction, and within the function parentheses include the following arguments.- As the first argument, insert the name of the model object that we specified above (

cfa_mod). - As the second argument, insert the name of the data frame object to which the indicator variables in our model belong. That is, after

data=, insert the name of the data frame object (df). - Note: The

cfafunction includes model estimation defaults, which explains why we had relatively few model specifications. For example, the function defaults to constraining the first indicator’s unstandardized factor loading to 1.0 for model fitting purposes, and constrains covariances between indicator error terms (i.e., uniquenesses) to zero (or in other words, specifies the error terms as uncorrelated).

- As the first argument, insert the name of the model object that we specified above (

# Estimate four-factor CFA model & assign to fitted model object

cfa_fit <- cfa(cfa_mod, # name of specified model object

data=df) # name of data frame objectFourth, we will use the summary function from base R to to print the model results. To do so, we will apply the following arguments in the summary function parentheses.

- As the first argument, specify the name of the fitted model object that we created above (

cfa_fit). - As the second argument, set

fit.measures=TRUEto obtain the model fit indices (e.g., CFI, TLI, RMSEA, SRMR). - As the third argument, set

standardized=TRUEto request the standardized parameter estimates for the model.

# Print summary of model results

summary(cfa_fit, # name of fitted model object

fit.measures=TRUE, # request model fit indices

standardized=TRUE) # request standardized estimates## lavaan 0.6.15 ended normally after 36 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 38

##

## Number of observations 550

##

## Model Test User Model:

##

## Test statistic 120.274

## Degrees of freedom 98

## P-value (Chi-square) 0.063

##

## Model Test Baseline Model:

##

## Test statistic 3851.181

## Degrees of freedom 120

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.994

## Tucker-Lewis Index (TLI) 0.993

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -12611.728

## Loglikelihood unrestricted model (H1) -12551.591

##

## Akaike (AIC) 25299.456

## Bayesian (BIC) 25463.233

## Sample-size adjusted Bayesian (SABIC) 25342.605

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.020

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.032

## P-value H_0: RMSEA <= 0.050 1.000

## P-value H_0: RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.024

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## RC =~

## RC1 1.000 0.746 0.682

## RC2 1.213 0.080 15.227 0.000 0.905 0.801

## RC3 1.038 0.073 14.127 0.000 0.774 0.716

## RC4 1.110 0.076 14.587 0.000 0.828 0.747

## TM =~

## TM1 1.000 0.923 0.783

## TM2 1.051 0.058 18.231 0.000 0.970 0.783

## TM3 0.998 0.054 18.646 0.000 0.921 0.802

## TM4 0.985 0.056 17.521 0.000 0.909 0.753

## JS =~

## JS1 1.000 0.908 0.737

## JS2 1.048 0.063 16.585 0.000 0.951 0.777

## JS3 0.994 0.063 15.793 0.000 0.902 0.734

## JS4 1.033 0.063 16.368 0.000 0.937 0.764

## JP =~

## JP1 1.000 1.168 0.766

## JP2 1.103 0.061 18.202 0.000 1.289 0.801

## JP3 0.980 0.054 18.031 0.000 1.145 0.793

## JP4 0.994 0.058 17.123 0.000 1.161 0.752

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## RC ~~

## TM 0.087 0.035 2.478 0.013 0.127 0.127

## JS 0.266 0.040 6.665 0.000 0.392 0.392

## JP 0.148 0.045 3.273 0.001 0.170 0.170

## TM ~~

## JS 0.287 0.046 6.225 0.000 0.343 0.343

## JP 0.174 0.055 3.199 0.001 0.162 0.162

## JS ~~

## JP 0.409 0.060 6.820 0.000 0.386 0.386

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .RC1 0.639 0.047 13.652 0.000 0.639 0.534

## .RC2 0.458 0.044 10.494 0.000 0.458 0.359

## .RC3 0.571 0.044 13.023 0.000 0.571 0.488

## .RC4 0.545 0.044 12.272 0.000 0.545 0.443

## .TM1 0.538 0.044 12.283 0.000 0.538 0.387

## .TM2 0.594 0.048 12.289 0.000 0.594 0.387

## .TM3 0.472 0.040 11.698 0.000 0.472 0.358

## .TM4 0.631 0.048 13.049 0.000 0.631 0.433

## .JS1 0.692 0.053 12.988 0.000 0.692 0.457

## .JS2 0.596 0.050 11.972 0.000 0.596 0.397

## .JS3 0.696 0.053 13.053 0.000 0.696 0.461

## .JS4 0.625 0.051 12.321 0.000 0.625 0.416

## .JP1 0.961 0.075 12.727 0.000 0.961 0.413

## .JP2 0.925 0.079 11.682 0.000 0.925 0.358

## .JP3 0.773 0.065 11.957 0.000 0.773 0.371

## .JP4 1.035 0.079 13.056 0.000 1.035 0.434

## RC 0.557 0.066 8.373 0.000 1.000 1.000

## TM 0.852 0.083 10.305 0.000 1.000 1.000

## JS 0.824 0.088 9.381 0.000 1.000 1.000

## JP 1.364 0.137 9.992 0.000 1.000 1.000Evaluating model fit. Now that we have the summary of our model results, we will begin by evaluating key pieces of the model fit information provided in the output.

- Estimator. The function defaulted to using the maximum likelihood (ML) model estimator. When there are deviations from multivariate normality or categorical variables, the function may switch to another estimator.

- Number of parameters. Thirty-eight parameters were estimated, which as we will see later correspond to factor loadings, covariances, and (residual error) variances.

- Number of observations. Our effective sample size is 550. Had there been missing data on the observed variables, this portion of the output would have indicated how many of the observations were retained for the analysis given the missing data. How missing data are handled during estimation will depend on the type of missing data approach we apply, which is covered in more default in the section called Estimating Models with Missing Data. By default, the

cfafunction applies listwise deletion in the presence of missing data. - Chi-square test. The chi-square (\(\chi^{2}\)) test assesses whether the model fits the data adequately, where a statistically significant \(\chi^{2}\) value (e.g., p \(<\) .05) indicates that the model does not fit the data well and a nonsignificant chi-square value (e.g., p \(\ge\) .05) indicates that the model fits the data reasonably well (Bagozzi and Yi 1988). The null hypothesis for the \(\chi^{2}\) test is that the model fits the data perfectly, and thus failing to reject the null model provides some confidence that the model fits the data reasonably close to perfectly. Of note, the \(\chi^{2}\) test is sensitive to sample size and non-normal variable distributions. For this model, we find the \(\chi^{2}\) test in the output section labeled Model Test User Model. Because the p-value is equal to or greater than .05, we fail to reject the null hypothesis that the mode fits the data perfectly and thus conclude that the model fits the data acceptably (\(\chi^{2}\) = 120.274, df = 98, p = .063). Finally, note that because the model’s degrees of freedom (i.e., 98) is greater than zero, we can conclude that the model is over-identified.

- Comparative fit index (CFI). As the name implies, the comparative fit index (CFI) is a type of comparative (or incremental) fit index, which means that CFI compares our estimated model to a baseline model, which is commonly referred to as the null or independence model. CFI is generally less sensitive to sample size than the chi-square (\(\chi^{2}\)) test. A CFI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90. For this model, CFI is equal to .994, which indicates that the model fits the data acceptably.

- Tucker-Lewis index (TLI). Like CFI, Tucker-Lewis index (TLI) is another type of comparative (or incremental) fit index. TLI is generally less sensitive to sample size than the chi-square test and tends to work well with smaller sample sizes; however, as Hu and Bentler (1999) noted, TLI may be not be the best choice for smaller sample sizes (e.g., N \(<\) 250). A TLI value greater than or equal to .95 generally indicates good model fit to the data, although like CFI, some might relax that cutoff to .90. For this model, TLI is equal to .993, which indicates that the model fits the data acceptably.

- Loglikelihood and Information Criteria. The section labeled Loglikelihood and Information Criteria contains model fit indices that are not directly interpretable on their own (e.g., loglikelihood, AIC, BIC). Rather, they become more relevant when we wish to compare the fit of two or more non-nested models. Given that, we will will ignore this section in this tutorial.

- Root mean square error of approximation (RMSEA). The root mean square error of approximation (RMSEA) is an absolute fit index that penalizes model complexity (e.g., models with a larger number of estimated parameters) and thus effectively rewards models that are more parsimonious. RMSEA values tend to upwardly biased when the model degrees of freedom are fewer (i.e., when the model is closer to being just-identified); further, Hu and Bentler (1999) noted that RMSEA may not be the best choice for smaller sample sizes (e.g., N \(<\) 250). In general, an RMSEA value that is less than or equal to .06 indicates good model fit to the data, although some relax that cutoff to .08 or even .10. For this model, RMSEA is .020, which indicates that the model fits the data acceptably.

- Standardized root mean square residual. Like the RMSEA, the standardized root mean square residual (SRMR) is an example of an absolute fit index. An SRMR value that is less than or equal to .06 generally indicates good fit to the data, although some relax that cutoff to .08. For this model, SRMR is equal to .024, which indicates that the model fits the data acceptably.

In sum, the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices all indicate that our model fit the data acceptably based on conventional rules and thresholds. This level of agreement, however, is not always going to occur. For instance, it is relatively common for the \(\chi^{2}\) test to indicate a lack of acceptable fit while one or more of the relative or absolute fit indices indicates that fit is acceptable given the limitations of the \(\chi^{2}\) test. Further, there may be instances where only two or three out of five of these model fit indices indicate acceptable model fit. In such instances, we should not necessarily toss out the model entirely, but we should consider whether there are model misspecifications. Of course, if all five model indices are well beyond the conventional thresholds (in a bad way), then our model likely has major issues, and we should not proceed with interpreting the parameter estimates. Fortunately, for our model, all five model fit indices signal that the model fit the data acceptably, and thus we should feel confident proceeding forward with interpreting and evaluating the parameter estimates.

Evaluating parameter estimates. As noted above, our model showed acceptable fit to the data, so we can feel comfortable interpreting the parameter estimates. By default, the cfa function provides unstandardized parameter estimates, but if you recall, we also requested standardized parameter estimates. In the output, the unstandardized parameter estimates fall under the column titled Estimates, whereas the standardized factor loadings we’re interested in fall under the column titled Std.all.

- Factor loadings. The output section labeled Latent Variables contains our factor loadings. For this model, the loadings represent the effects of the latent factors for role clarity, task mastery, job satisfaction, and job performance on the their respective items. By default, the

cfafunction constrains the factor loading associated with the first indicator of each latent factor to 1 for model estimation purposes. Note, however, that there are still substantive standardized factor loadings for those first indicators, but they lack standard error (SE), z-value, and p-value estimates. We can still evaluate those standardized factor loadings, though. First, regarding the role clarity (RC) latent factor all standardized factor loadings (.682-.802) fell within Bagozzi and Yi’s (1988) recommended range of .50-.95. Second, regarding the task mastery (TM) latent factor, all standardized factor loadings (.753-.802) fell within recommended range of .50-.95. Third, regarding the job satisfaction (JS) latent factor, all standardized factor loadings (.734-.777) fell within recommended range of .50-.95. Fourth, regarding the job performance (JP) latent factor, all standardized factor loadings (.752-.801) fell within recommended range of .50-.95. - Covariances. The output section labeled Covariances contains the pairwise covariance estimates for the four latent factors. As was the case with the factor loadings, we can view the standardized and unstandardized parameter estimates, where the standardized covariances can be interpreted as correlations. All six correlations are statistically significant (p < .05). In terms of practical significance, the correlations between

RCandJS(.392), betweenTMandJS(.343), and betweenJSandJP(.386) are medium in magnitude. The correlations betweenRCandTM(.127), betweenRCandJP(.170), and betweenTMandJP(.162) are small in magnitude. - Variances The output section labeled Variances contains the (residual error) variance estimates for each observed indicator (i.e., item) of the latent factor and for the latent factor itself. As was the case with the factor loadings, we can view the standardized and unstandardized parameter estimates.

- Residual error variances for indicators. The estimates associated with the 16 indicator variables represent the residual error variances. Sometimes these are referred to as residual variances, disturbance terms, or uniquenesses. The standardized error variances ranged from .358 to .534, which can be interpreted as proportions of the variance not explained by the latent factor. For example, the latent factor

RCfailed to explain 53.4% of the variance in the indicatorRC1, which is just outside the limit of what we conventionally consider to be acceptable; this suggests that 46.6% (100% - 53.4%) of the variance in indicatorRC1was explained by the latent factorRC. With the exception of theRC1item’s error variance (.534), the indicator error variances were less than the recommended .50 threshold, which means that unmodeled constructs did not likely have a notable impact on the vast majority of the indicators. The standardized error variance for theRC1item was just above the .50 recommended cutoff, and if we check the item’s content (“I understand what my job-related responsibilities are.”) and the construct’s conceptual definition (“the extent to which an individual understands what is expected of them in their job or role”), we see that the item fits within the conceptual definition boundaries; thus, we should retain theRC1item. - Variance of the latent factors. The variance estimate for the latent factor provides can provide an indication of the latent factors’ level variability; however, its value depends on the scaling of factor loadings, and generally it is not a point of interest when evaluating CFA models. By default, the standardized variance for the latent factor will be equal to 1.000, and thus if we wished to evaluate the latent factor variance, we would interpret the unstandardized variance in this instance.

- Residual error variances for indicators. The estimates associated with the 16 indicator variables represent the residual error variances. Sometimes these are referred to as residual variances, disturbance terms, or uniquenesses. The standardized error variances ranged from .358 to .534, which can be interpreted as proportions of the variance not explained by the latent factor. For example, the latent factor

Within the semTools package, there are two additional diagnostic tools that we can apply to our model. Specifically, the AVE and compRelSEM functions allow us to estimate the average variance extracted (AVE) (Fornell and Larcker 1981) and the composite (construct) reliability (CR) (Bentler 1968). If you haven’t already, please install and access the semTools package.

To estimate AVE, we simply specify the name of the AVE function, and within the function parentheses, we insert the name of our fitted CFA model estimate.

## RC TM JS JP

## 0.546 0.608 0.567 0.606Average variance extracted (AVE). The AVE estimates were .546 (RC), .608 (TM), .567 (JS), and .606 (JP), which are above the conventional threshold (\(\ge\) .50). We can conclude that AVE estimates fare in the acceptable range.

## RC TM JS JP

## 0.827 0.861 0.840 0.860Composite reliability (CR). The CR estimates were .827 (RC), .861 (TM), .840 (JS), and .860 (JP), which exceeded the conventional threshold for acceptable reliability (\(\ge\) .70) as well as the more relaxed “questionable” threshold (\(\ge\) .60). We can conclude that the four latent factors and associated indicators showed acceptably high reliability and specifically acceptably high internal consistency reliability.

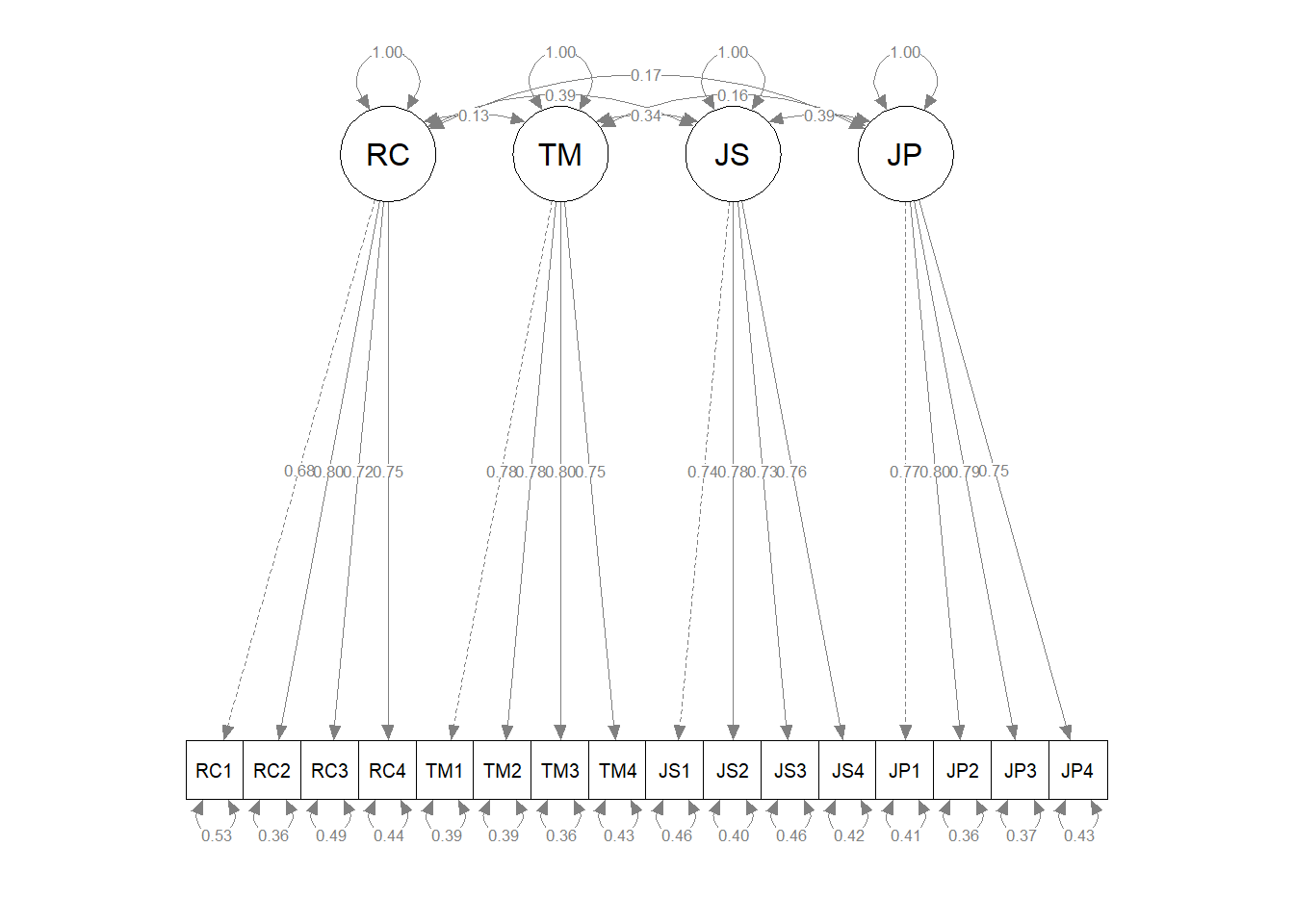

Visualize the path diagram. To visualize our four-factor CFA measurement model as a path diagram, we can use the semPaths function from the semPlot package. If you haven’t already, please install and access the semPlot package.

While there are many arguments that can be used to refine the path diagram visualization, we will focus on just four to illustrate how the semPaths function works.

- As the first argument, insert the name of the fitted CFA model object (

cfa_fit). - As the second argument, specify

what="std"to display just the standardized parameter estimates. - As the third argument, specify

weighted=FALSEto request that the visualization not weight the edges (e.g., lines) and other plot features. - As the fourth argument, specify

nCharNodes=0in order to use the full names of latent and observed indicator variables instead of abbreviating them.

# Visualize the measurement model

semPaths(cfa_fit, # name of fitted model object

what="std", # display standardized parameter estimates

weighted=FALSE, # do not weight plot features

nCharNodes=0) # do not abbreviate names

The resulting CFA path diagram can be useful for interpreting the model specifications and the parameter estimates.

In sum, the model fit indices suggested acceptable fit of the model to the data, and for the most part, the parameter estimates fell within acceptable ranges. The AVE and CR estimates also were acceptable. Given that our measurement model is acceptable, we are ready to specify and estimate a structural regression model by introducing direction paths (relations) into our model. Please note, however that we would typically take additional steps to compare different nested factor structures to evaluate whether a more parsimonious but equal-fitting measurement model exists. You can how to compare CFA nested model comparisons in a previous chapter.

58.2.5 Estimate a Structural Regression Model

In the previous section, we evaluated the measurement model of our four constructs (i.e., role clarity, task mastery, job satisfaction, job performance) by estimating a four-factor confirmatory factor analysis (CFA) model. In this section, we will add direction paths (relations) to the measurement model, which will result in a structural regression model (SRM). More specifically, we will specify an SRM that resembles Figure 3 that was presented earlier in the chapter; we will regress the job satisfaction (JS) latent factor onto the role clarity (RC) and task mastery (TM) latent factors, and we will regress the job performance (JP) latent factor onto the job satisfaction (JS) latent factor. Let’s assume that these model specifications are informed by extant theory.

To do so, we will use the sem function from the lavaan package. So, if you haven’t already, be sure that you have installed and accessed the lavaan package.

First, we will specify the SRM and assign it to an object that we can subsequently reference. To do so, we will do the following.

- Specify a name for the model object (e.g.,

srm_mod), followed by the<-assignment operator. - To the right of the

<-assignment operator and within quotation marks (" "): - Include the measurement model that we specified in the previous section.

- Add direction paths (relations):

- To specify the direct paths from

RCtoJSand fromTMtoJS, we will use the regression (~) operator. Specifically, we will specifyJSfollowed by the~operator, theRClatent factor, the+operator, and the theTMlatent factor, which looks like this:JS ~ RC + TM. In other words, we are regressingJSontoRCandTM. - To specify the direct path from

JStoJP, we will use the regression (~) operator again. Specifically, we will specifyJPfollowed by the~operator and then theJSlatent factor, which looks like this:JP ~ JS. In other words, we are regressingJPontoJS.

# Specify four-factor SRM & assign to object

srm_mod <- "

# Measurement model

RC =~ RC1 + RC2 + RC3 + RC4

TM =~ TM1 + TM2 + TM3 + TM4

JS =~ JS1 + JS2 + JS3 + JS4

JP =~ JP1 + JP2 + JP3 + JP4

# Directional paths/relations

JS ~ RC + TM

JP ~ JS

"Second, now that we have specified the model object (srm_mod), we are ready to estimate the model using the sem function from the lavaan package. To do so, we will do the following.

- Specify a name for the fitted model object (e.g.,

srm_fit), followed by the<-assignment operator. - To the right of the

<-assignment operator, type the name of thesemfunction, and within the function parentheses include the following arguments.

- As the first argument, insert the name of the model object that we specified above (

srm_mod). - As the second argument, insert the name of the data frame object to which the indicator (observed) variables in our model belong. That is, after

data=, insert the name of the data frame object (df).

# Estimate four-factor SRM model & assign to fitted model object

srm_fit <- sem(srm_mod, # name of specified model object

data=df) # name of data frame objectThird, we will use the summary function from base R to to print the model results. To do so, we will apply the following arguments in the summary function parentheses.

- As the first argument, specify the name of the fitted model object that we created above (

srm_fit). - As the second argument, set

fit.measures=TRUEto obtain the model fit indices (e.g., CFI, TLI, RMSEA, SRMR). - As the third argument, set

standardized=TRUEto request the standardized parameter estimates for the model. - As the fourth argument, set

rsquare=TRUEto request the R2 values for endogenous variables, i.e., outcome variables associated with each of the directional paths we specified; in this SRM, our substantive endogenous variables areJSandJP.

# Print summary of model results

summary(srm_fit, # name of fitted model object

fit.measures=TRUE, # request model fit indices

standardized=TRUE, # request standardized estimates

rsquare=TRUE) # request R-squared estimates## lavaan 0.6.15 ended normally after 31 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 36

##

## Number of observations 550

##

## Model Test User Model:

##

## Test statistic 120.858

## Degrees of freedom 100

## P-value (Chi-square) 0.076

##

## Model Test Baseline Model:

##

## Test statistic 3851.181

## Degrees of freedom 120

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.994

## Tucker-Lewis Index (TLI) 0.993

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -12612.020

## Loglikelihood unrestricted model (H1) -12551.591

##

## Akaike (AIC) 25296.040

## Bayesian (BIC) 25451.197

## Sample-size adjusted Bayesian (SABIC) 25336.918

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.019

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.031

## P-value H_0: RMSEA <= 0.050 1.000

## P-value H_0: RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.025

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## RC =~

## RC1 1.000 0.746 0.682

## RC2 1.213 0.080 15.227 0.000 0.905 0.801

## RC3 1.037 0.073 14.124 0.000 0.774 0.715

## RC4 1.110 0.076 14.586 0.000 0.828 0.747

## TM =~

## TM1 1.000 0.923 0.783

## TM2 1.051 0.058 18.232 0.000 0.970 0.783

## TM3 0.998 0.054 18.643 0.000 0.921 0.801

## TM4 0.985 0.056 17.520 0.000 0.909 0.753

## JS =~

## JS1 1.000 0.907 0.737

## JS2 1.048 0.063 16.582 0.000 0.951 0.776

## JS3 0.994 0.063 15.790 0.000 0.902 0.734

## JS4 1.033 0.063 16.362 0.000 0.937 0.764

## JP =~

## JP1 1.000 1.168 0.766

## JP2 1.102 0.061 18.192 0.000 1.288 0.801

## JP3 0.981 0.054 18.044 0.000 1.146 0.794

## JP4 0.994 0.058 17.122 0.000 1.161 0.752

##

## Regressions:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## JS ~

## RC 0.433 0.063 6.871 0.000 0.356 0.356

## TM 0.295 0.048 6.177 0.000 0.300 0.300

## JP ~

## JS 0.501 0.067 7.529 0.000 0.389 0.389

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## RC ~~

## TM 0.087 0.035 2.478 0.013 0.127 0.127

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .RC1 0.639 0.047 13.654 0.000 0.639 0.535

## .RC2 0.458 0.044 10.487 0.000 0.458 0.359

## .RC3 0.571 0.044 13.026 0.000 0.571 0.488

## .RC4 0.545 0.044 12.272 0.000 0.545 0.443

## .TM1 0.538 0.044 12.283 0.000 0.538 0.387

## .TM2 0.594 0.048 12.285 0.000 0.594 0.387

## .TM3 0.472 0.040 11.699 0.000 0.472 0.358

## .TM4 0.631 0.048 13.048 0.000 0.631 0.433

## .JS1 0.693 0.053 13.005 0.000 0.693 0.457

## .JS2 0.596 0.050 11.989 0.000 0.596 0.397

## .JS3 0.696 0.053 13.064 0.000 0.696 0.461

## .JS4 0.626 0.051 12.340 0.000 0.626 0.416

## .JP1 0.960 0.075 12.723 0.000 0.960 0.413

## .JP2 0.927 0.079 11.699 0.000 0.927 0.359

## .JP3 0.771 0.065 11.939 0.000 0.771 0.370

## .JP4 1.036 0.079 13.057 0.000 1.036 0.434

## RC 0.556 0.066 8.372 0.000 1.000 1.000

## TM 0.852 0.083 10.305 0.000 1.000 1.000

## .JS 0.623 0.069 8.979 0.000 0.756 0.756

## .JP 1.158 0.119 9.736 0.000 0.849 0.849

##

## R-Square:

## Estimate

## RC1 0.465

## RC2 0.641

## RC3 0.512

## RC4 0.557

## TM1 0.613

## TM2 0.613

## TM3 0.642

## TM4 0.567

## JS1 0.543

## JS2 0.603

## JS3 0.539

## JS4 0.584

## JP1 0.587

## JP2 0.641

## JP3 0.630

## JP4 0.566

## JS 0.244

## JP 0.151Evaluating model fit. Now that we have the summary of our model results, we will begin by evaluating key pieces of the model fit information provided in the output.

- Estimator. The function defaulted to using the maximum likelihood (ML) model estimator. When there are deviations from multivariate normality or categorical variables, the function may switch to another estimator; alternatively, we can manually switch to another estimator using the

estimator=argument in thesemfunction. - Number of parameters. Thirty-six parameters were estimated, which, as we will see later, correspond to the factor loadings, directional paths (i.e., regressions), covariances between exogenous latent factors (i.e.,

RCandTM), latent factor variances, and (residual) error term variances of the observed variables (i.e., indicators) and the endogenous latent factors (i.e.,JS,JP). - Number of observations. Our effective sample size is 550. Had there been missing data on the observed variables, this portion of the output would have indicated how many of the observations were retained for the analysis given the missing data. How missing data are handled during estimation will depend on the type of missing data approach we apply, which is covered in more default in the section called Estimating Models with Missing Data. By default, the

semfunction applies listwise deletion in the presence of missing data. - Chi-square test. The chi-square (\(\chi^{2}\)) test assesses whether the model fits the data adequately, where a statistically significant \(\chi^{2}\) value (e.g., p \(<\) .05) indicates that the model does not fit the data well and a nonsignificant chi-square value (e.g., p \(\ge\) .05) indicates that the model fits the data reasonably well. The null hypothesis for the \(\chi^{2}\) test is that the model fits the data perfectly, and thus failing to reject the null model provides some confidence that the model fits the data reasonably close to perfectly. Of note, the \(\chi^{2}\) test is sensitive to sample size and non-normal variable distributions. For this model, we find the \(\chi^{2}\) test in the output section labeled Model Test User Model. Because the p-value is equal to or greater than .05, we fail to reject the null hypothesis that the mode fits the data perfectly and thus conclude that the model fits the data acceptably (\(\chi^{2}\) = 120.858, df = 100, p = .076). Finally, note that because the model’s degrees of freedom (i.e., 100) is greater than zero, we can conclude that the model is over-identified.

- Comparative fit index (CFI). As the name implies, the comparative fit index (CFI) is a type of comparative (or incremental) fit index, which means that CFI compares our estimated model to a baseline model, which is commonly referred to as the null or independence model. CFI is generally less sensitive to sample size than the chi-square (\(\chi^{2}\)) test. A CFI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90. For this model, CFI is equal to .994, which indicates that the model fits the data acceptably.

- Tucker-Lewis index (TLI). Like CFI, Tucker-Lewis index (TLI) is another type of comparative (or incremental) fit index. TLI is generally less sensitive to sample size than the chi-square test and tends to work well with smaller sample sizes; however, TLI may be not be the best choice for smaller sample sizes (e.g., N \(<\) 250). A TLI value greater than or equal to .95 generally indicates good model fit to the data, although like CFI, some might relax that cutoff to .90. For this model, TLI is equal to .993, which indicates that the model fits the data acceptably.

- Loglikelihood and Information Criteria. The section labeled Loglikelihood and Information Criteria contains model fit indices that are not directly interpretable on their own (e.g., loglikelihood, AIC, BIC). Rather, they become more relevant when we wish to compare the fit of two or more non-nested models. Given that, we will will ignore this section in this tutorial.

- Root mean square error of approximation (RMSEA). The root mean square error of approximation (RMSEA) is an absolute fit index that penalizes model complexity (e.g., models with a larger number of estimated parameters) and thus effectively rewards models that are more parsimonious. RMSEA values tend to upwardly biased when the model degrees of freedom are fewer (i.e., when the model is closer to being just-identified); further, RMSEA may not be the best choice for smaller sample sizes (e.g., N \(<\) 250). In general, an RMSEA value that is less than or equal to .06 indicates good model fit to the data, although some relax that cutoff to .08 or even .10. For this model, RMSEA is .019, which indicates that the model fits the data acceptably.

- Standardized root mean square residual. Like the RMSEA, the standardized root mean square residual (SRMR) is an example of an absolute fit index. An SRMR value that is less than or equal to .06 generally indicates good fit to the data, although some relax that cutoff to .08. For this model, SRMR is equal to .025, which indicates that the model fits the data acceptably.

In sum, the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices all indicate that our model fit the data acceptably based on conventional rules of thumb and thresholds. This level of agreement, however, is not always going to occur. For instance, it is relatively common for the \(\chi^{2}\) test to indicate a lack of acceptable fit while one or more of the relative or absolute fit indices indicates that fit is acceptable given the limitations of the \(\chi^{2}\) test. Further, there may be instances where only two or three out of five of these model fit indices indicate acceptable model fit. In such instances, we should not necessarily toss out the model entirely, but we should consider whether there are model misspecifications. Of course, if all five model indices are well beyond the conventional thresholds (in a bad way), then our model may have some major misspecification issues, and we should proceed thoughtfully when interpreting the parameter estimates.

Evaluating parameter estimates. As noted above, our model showed acceptable fit to the data, so we can feel comfortable interpreting the parameter estimates. By default, the sem function provides unstandardized parameter estimates, but if you recall, we also requested standardized parameter estimates. In the output, the unstandardized parameter estimates fall under the column titled Estimates, whereas the standardized factor loadings we’re interested in fall under the column titled Std.all. Assuming we have already evaluated the measurement structure using a CFA model, with an SRM, we are often most interested in reviewing the directional paths (relations) and covariances involving the latent factors, so we’ll begin with those parameter estimates.

- Directional paths (relations). The output section labeled Regressions contains the directional path (relation) estimates that we specified. We can view the standardized and unstandardized parameter estimates, where the unstandardized estimates can be interpreted as unstandardized regression coefficients and the standardized estimates can be interpreted as standardized regression coefficients. First, the standardized directional path from

RCtoJSis statistically significant and positive, indicating that those with higherRCtend to have higherJS(.356, p < .001). Second, the standardized directional path fromTMtoJSis statistically significant and positive, indicating that those with higherTMtend to have higherJS(.300, p < .001). Third, the standardized directional path fromJStoJPis statistically significant and positive, indicating that those with higherJStend to have higherJP(.389, p < .001). - Covariances. The output section labeled Covariances contains the covariance estimate for the two exogenous latent factors, which are those latent factors that do not serve as an outcome (i.e.,

JS,JP). As was the case with the directional paths, we can view the standardized and unstandardized parameter estimate, where the standardized covariance can be interpreted as a correlation. The correlation betweenJSandJP(.127) is statistically significant (p = .013), positive, and small in terms of practical significance. - Factor loadings. The output section labeled Latent Variables contains our factor loadings. For this model, the loadings represent the effects of the latent factors for role clarity, task mastery, job satisfaction, and job performance on the their respective items. By default, the

semfunction constrains the factor loading associated with the first indicator of each latent factor to 1 for model estimation purposes. Note, however, that there are still substantive standardized factor loadings for those first indicators, but they lack standard error (SE), z-value, and p-value estimates. We can still evaluate those standardized factor loadings, though. First, regarding the role clarity (RC) latent factor all standardized factor loadings (.682-.801) fell within Bagozzi and Yi’s (1988) recommended range of .50-.95. Second, regarding the task mastery (TM) latent factor, all standardized factor loadings (.753-.801) fell within recommended range of .50-.95. Third, regarding the job satisfaction (JS) latent factor, all standardized factor loadings (.734-.776) fell within recommended range of .50-.95. Fourth, regarding the job performance (JP) latent factor, all standardized factor loadings (.752-.801) fell within recommended range of .50-.95. - Variances The output section labeled Variances contains the (residual error) variance estimates for each observed indicator (i.e., item) of the latent factor and for the latent factor itself. As was the case with the factor loadings, we can view the standardized and unstandardized parameter estimates.

- (Residual error) variances for indicators. The estimates associated with the 16 indicator variables represent the residual error variances. Sometimes these are referred to as residual variances, disturbance terms, or uniquenesses. The standardized error variances ranged from .359 to .535, which can be interpreted as proportions of the variance not explained by the latent factor. For example, the latent factor

RCfailed to explain 53.5% of the variance in the indicatorRC1, which is just outside the limit of what we conventionally consider to be acceptable; this suggests that 46.5% (100% - 53.5%) of the variance in indicatorRC1was explained by the latent factorRC. With the exception of theRC1item’s error variance (.535), the indicator error variances were less than the recommended .50 threshold, which means that unmodeled constructs did not likely have a notable impact on the vast majority of the indicators. The standardized error variance for theRC1item was just above the .50 recommended cutoff, and if we check the item’s content (“I understand what my job-related responsibilities are.”) and the construct’s conceptual definition (“the extent to which an individual understands what is expected of them in their job or role”), we see that the item fits within the conceptual definition boundaries; thus, we should retain theRC1item. - Variance of the latent factors. The variance estimate for the latent factor provides can provide an indication of the latent factors’ level variability; however, its value depends on the scaling of factor loadings, and generally it is not a point of interest when evaluating CFA models. By default, the standardized variance for the latent factor will be equal to 1.000, and thus if we wished to evaluate the latent factor variance, we would interpret the unstandardized variance in this instance.

- R-squared. For all endogenous variables (i.e., those variables that serve as an outcome variable of some kind), an R2 estimate is provided. The R2 estimates associated with the indicator variables are equal to 1 minus the standardized (residual error) variance (see above), and thus they do not provide additional information. The R2 estimates associated with the endogenous latent factors, however, are of substantive interest. Specifically, for

JS, R2 equaled .244, which indicates that collectivelyRCandTMexplained 24.4% of the variance inJS, which is a small or small/medium effect. ForJP, R2 equaled .151, which indicates thatJSexplained 15.1% of the variance inJP, which is a small effect.

- (Residual error) variances for indicators. The estimates associated with the 16 indicator variables represent the residual error variances. Sometimes these are referred to as residual variances, disturbance terms, or uniquenesses. The standardized error variances ranged from .359 to .535, which can be interpreted as proportions of the variance not explained by the latent factor. For example, the latent factor

Visualize the path diagram. To visualize our unconditional unconstrained LGM as a path diagram, we can use the semPaths function from the semPlot package. If you haven’t already, please install and access the semPlot package. While there are many arguments that can be used to refine the path diagram visualization, we will focus on just four to illustrate how the semPaths function works.

- As the first argument, insert the name of the fitted LGM object (

lgm_fit). - As the second argument, specify

what="est"to display just the unstandardized parameter estimates. - As the third argument, specify

weighted=FALSEto request that the visualization not weight the edges (e.g., lines) and other plot features. - As the fourth argument, specify

nCharNodes=0in order to use the full names of latent and observed indicator variables instead of abbreviating them.

# Visualize the four-factor SRM

semPaths(srm_fit, # name of fitted model object

what="std", # display standardized parameter estimates

weighted=FALSE, # do not weight plot features

nCharNodes=0) # do not abbreviate names

The resulting path diagram can be useful for interpreting the model specifications and the parameter estimates.

Results write-up for the SRM. After their respective start dates, we assessed 550 new employees’ role clarity (1 month post-hire), task mastery (1 month post-hire), job satisfaction (6 months post-hire), and supervisor-rated job satisfaction (6 months post-hire); missing data were not a concern. Each construct’s associated multi-item measure included four items. We hypothesized that higher role clarity and task mastery is associated with higher job satisfaction, and that higher job satisfaction is associated with higher job performance. Before estimating a structural regression model to test those hypotheses, we evaluated the measurement structure of the four measures using a multi-factor confirmatory factor analysis model. We evaluated the model’s fit to the data using the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 120.274 df = 98, p = .063). Further, the CFI and TLI estimates were .994 and .993, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .020, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .024, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that the four-factor measurement model fit the data acceptably. Further, standardized factor loadings ranged from .682 to .802, which all fell well within the recommended .50-.95 acceptability range. The standardized error variances for items ranged from .358 to .534, and only one estimate associated with the first role clarity item was above the the target threshold of .50, thereby indicating that it was unlikely that an unmodeled construct had an outsized influence on the items. The average variance extracted (AVE) estimates for role clarity, task mastery, job satisfaction, and job performance were .546, .608, .567, and .606, respectively, which all exceeded the .50 cutoff; thus, all four latent factors showed acceptable AVE levels. The composite reliability (CR) estimates for role clarity, task mastery, job satisfaction, and job performance were were .827, .861, .840, and .860, and all indicated acceptable levels of internal consistency reliability. In sum, the updated four-factor measurement model also showed acceptable fit to the data and acceptable parameter estimates, acceptable AVE estimates, and acceptable CR estimates; thus using the four-factor measurement model, we proceeded with estimating a structural regression model to test our hypotheses. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 120.858 df = 100, p = .076). Further, the CFI and TLI estimates were .994 and .993, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .019, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .025, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that the SRM fit the data acceptably. As hypothesized, the standardized directional path from: (a) role clarity to job satisfaction was statistically significant and positive (.356, p < .001); (b) from task mastery to job satisfaction was statistically significant and positive (.300, p < .001); and (c) from job satisfaction to job performance was statistically significant and positive (.389, p < .001).

58.2.6 Nested Model Comparisons