Chapter 59 Estimating Change Using Latent Growth Modeling

In this chapter, we will learn how to estimate change using latent growth modeling (LGM), which is part of the structural equation modeling (SEM) family of analyses. Specifically, we will learn how to estimate change trajectories to understand new employees’ adjustment into an organization.

59.1 Conceptual Overview

Latent growth modeling (LGM) (or latent growth model (LGM)) is a latent variable modeling approach and is part of the structural equation modeling (SEM) family of analyses (Meredith and Tisak 1990). LGM also goes by other names such as latent curve analysis, latent growth curve modeling, or latent curve modeling. LGM is a useful statistical tool for estimating the extent to which employees’ levels of a particular construct change over time. Specifically, LGM helps us understand the functional form of change (e.g., linear, quadratic, cubic) and variation in change between employees. Although not the focus of this chapter, measurement models can be integrated into LGM through the implementation of confirmatory factor analysis (CFA); CFA was introduced in a previous chapter. For a comprehensive introduction to LGM (as well as other types of longitudinal SEM, e.g., latent state-trait models, growth mixture models), I recommend checking out the following book by Newsom (2024).

In LGM, the intercept (or initial value) and slope (or functional form of change) are represented as latent variables (i.e., latent factors), which by nature are not directly measured. Instead, observed (manifest) variables serve as indicators of the latent factors. Further, the intercept and slopes latent factors provide information about the average intercept and slope for the population, as well as the between-person variation for the intercept and slope.

59.1.1 Path Diagrams

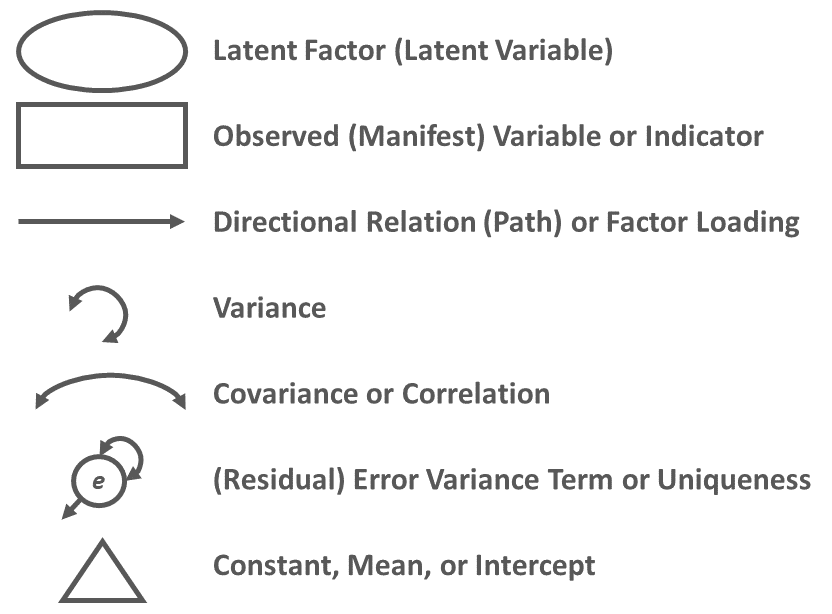

It is often helpful to visualize an LGM using a path diagram. A path diagram displays the model parameter specifications and can also include parameter estimates. Conventional path diagram symbols are shown in Figure 1.

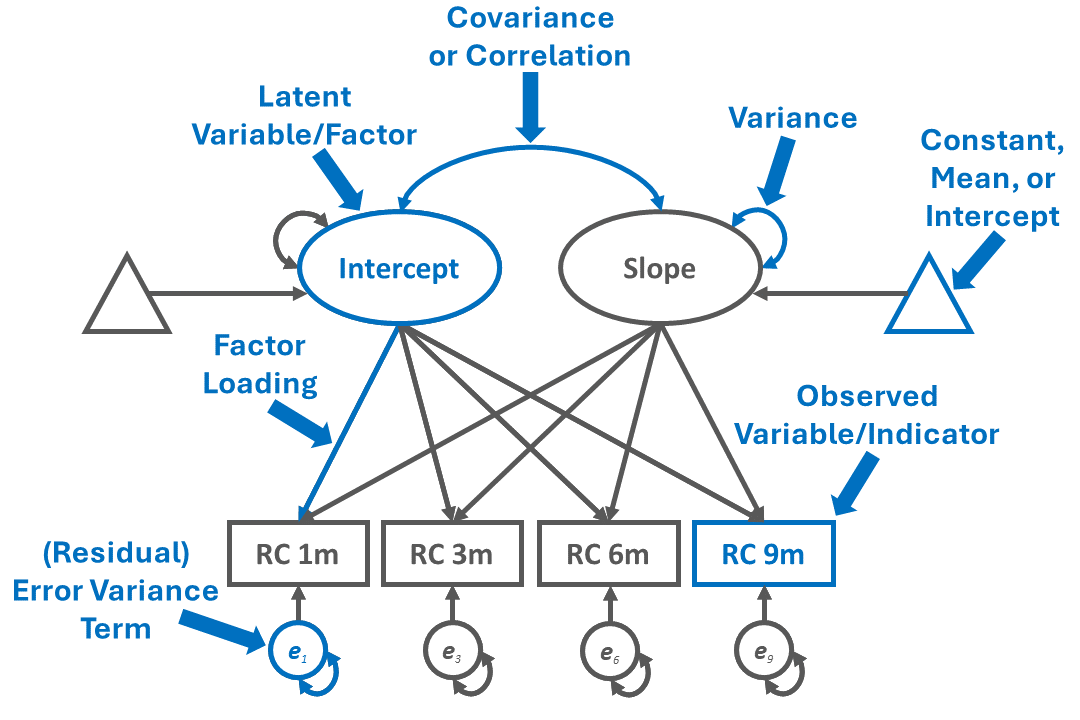

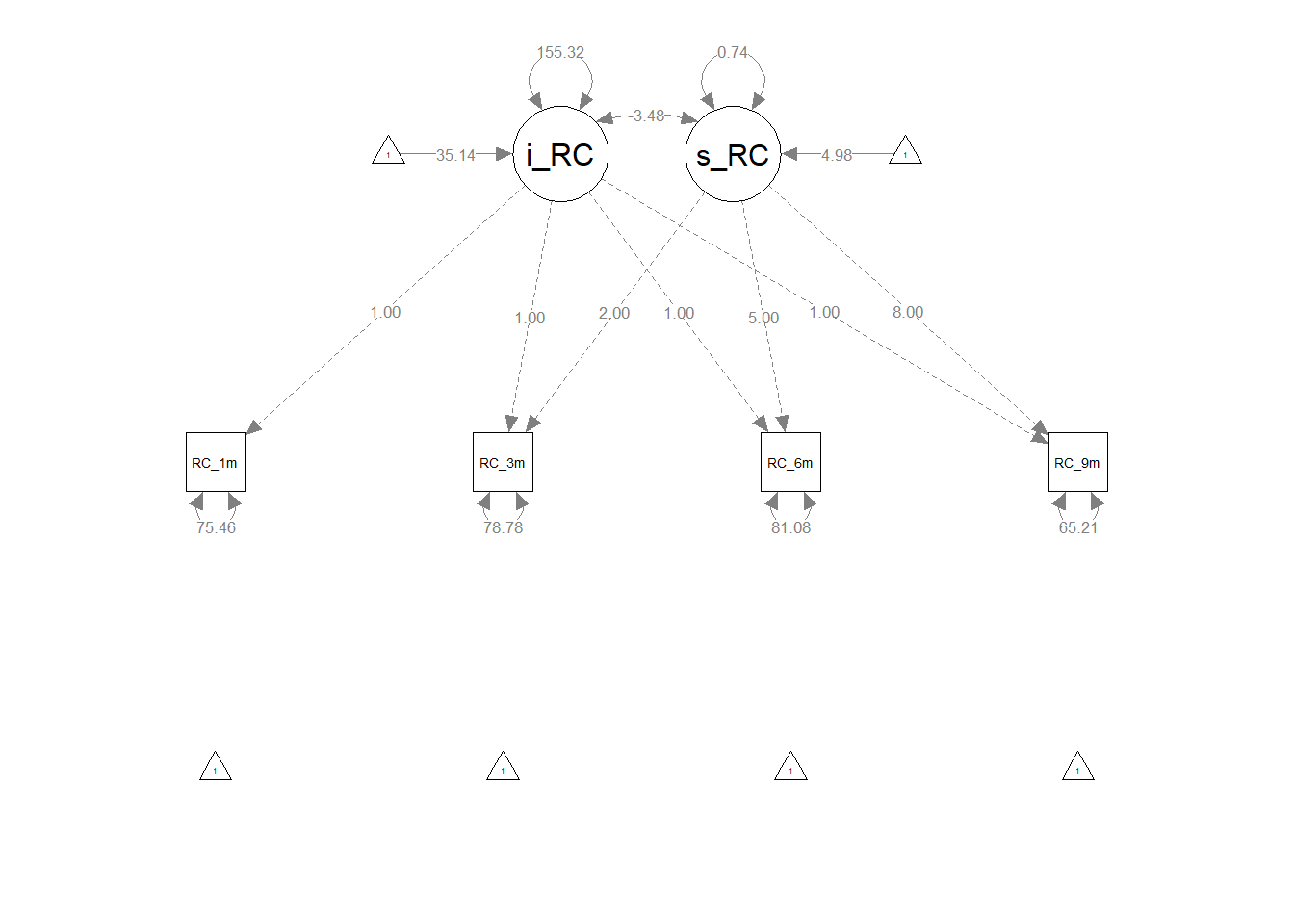

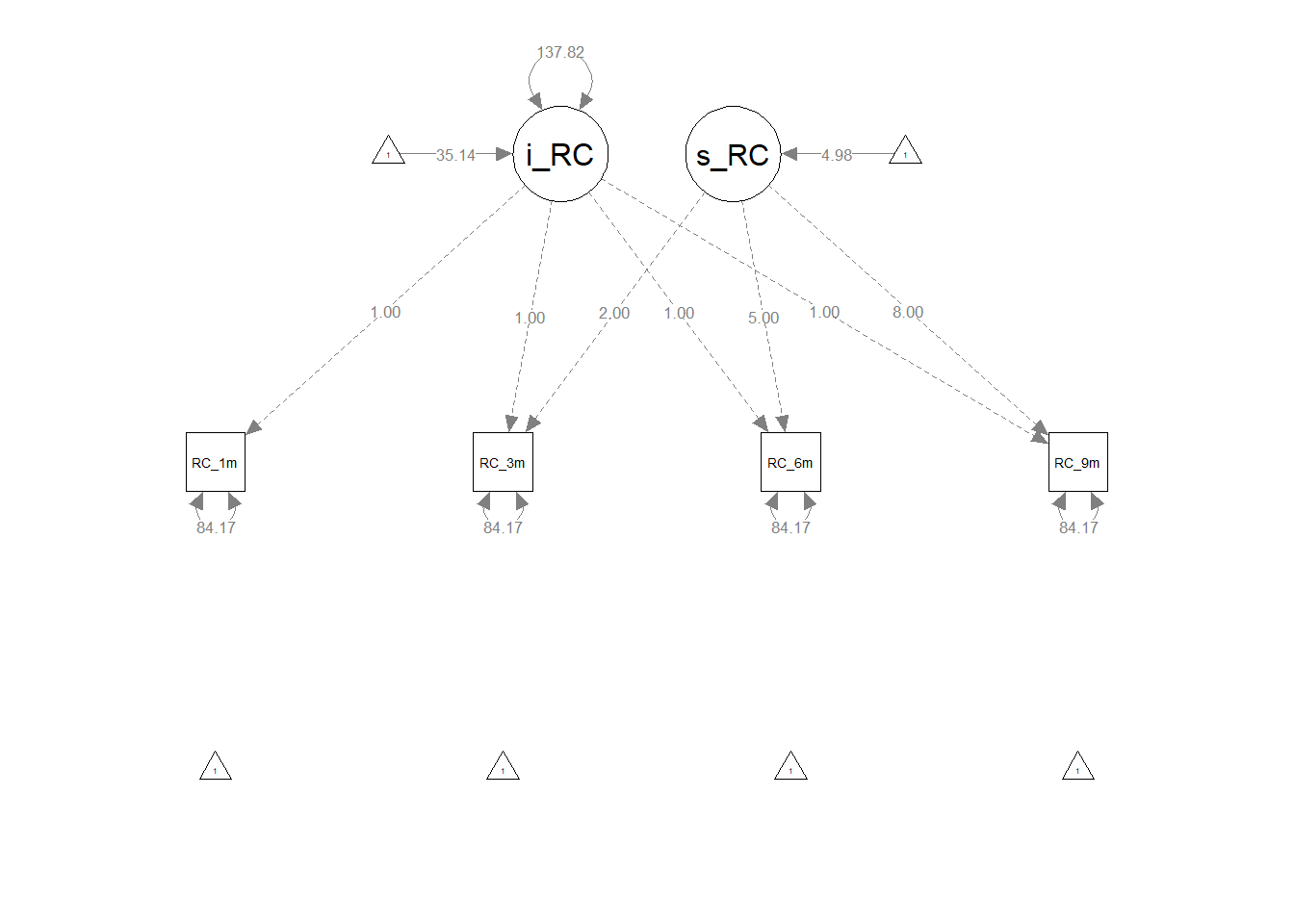

For an example of how the path diagram symbols can be used to construct a visual depiction of an LGM, please reference Figure 2. The path diagram depicts an unconditional unconstrained LGM with composite role clarity (RC) variables as observed measures (i.e., indicators), which means that the following parameters are freely estimated: means and variances for the intercept and slope latent factors, covariance between the intercept and slope latent factors, and variances for the observed variables. The model is considered unconditional because it is not conditioned on another variable, such as a time-invariant predictor variable. Further, role clarity (RC) measured at 1-month, 3-months, 6-months, and 9-months post-start-date serve as observed variables (i.e., RC 1m, RC 3m, RC 6m, RC 9m, respectively). That is, the RC observed measures serve as indicators of the intercept and slope latent factors, such that the indicators are reflective of the latent factors. Putting it all together, the unconditional unconstrained LGM can offer a glimpse into (a) whether employees’ levels of role clarity change on average in a particular direction (i.e., increase, decrease, no change) and (b) whether employees’ vary in terms of how their role clarity changes (if at all).

By convention, the latent factors for the role clarity intercept and slope are represented by an oval or circle. Please note that the latent factor is not directly measured; rather, we infer information about the latent factor from its four indicators, which in this example correspond to the four measurement occasions for role clarity.

The intercept and slope latent factors have variance terms associated with them, which represent the latent factors’ variabilities; these variances terms, which are represented by double-headed arrows, reflect the extent to which the intercept and slope estimates vary between individuals.

The intercept and slope latent factors also have mean (i.e., intercept, constant) terms associated with them, which are represented by triangles; these means reflect the average intercept and slope for the population.

The association between the intercept and slope latent factors is depicted using the double-headed arrow, which is referred to as a covariance – or if standardized, a correlation.

Each of the four observed variables (indicators) is represented with a rectangle. The one-directional, single-sided arrows extending from the latent factors to the observed variables represent the factor loadings. Each indicator has a (residual) error variance term, which represents the amount of variance left unexplained by the latent factor in relation to each indicator.

59.1.2 Model Identification

Model identification has to do with the number of (free or freely estimated) parameters specified in the model relative to the number of unique (non-redundant) sources of information available, and model implication has important implications for assessing model fit and estimating parameter estimates.

Just-identified: In a just-identified model (i.e., saturated model), the number of freely estimated parameters (e.g., factor loadings, covariances, variances) is equal to the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is equal to zero. In just-identified models, the model parameter standard errors can be estimated, but the model fit cannot be assessed in a meaningful way using traditional model fit indices.

Over-identified: In an over-identified model, the number of freely estimated parameters is less than the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is greater than zero. In over-identified models, traditional model fit indices and parameter standard errors can be estimated.

Under-identified: In an under-identified model, the number of freely estimated parameters is greater than the number of unique (non-redundant) sources of information, which means that the degrees of freedom (df) is less than zero. In under-identified models, the model parameter standard errors and model fit cannot be estimated. Some might say under-identified models are overparameterized because they have more parameters to be estimated than unique sources of information.

Most (if not all) statistical software packages that allow structural equation modeling (and by extension, latent growth modeling) automatically compute the degrees of freedom for a model or, if the model is under-identified, provide an error message. As such, we don’t need to count the number of sources of unique (non-redundant) sources of information and free parameters by hand. With that said, to understand model identification and its various forms at a deeper level, it is often helpful to practice calculating the degrees freedom by hand when first learning.

The formula for calculating the number of unique (non-redundant) sources of information available for a particular model is as follows, which differs from the formula for confirmatory factor analysis and other structural equation models because it incorporates repeated measurement occasions over time:

\(i = \frac{p(p+1)}{2} + t\)

where \(p\) is the number of observed variables to be modeled, and \(t\) is the number of measurement occasions (i.e., time points).

In unconditional unconstrained path diagram specified above, there are four observed variables: RC 1m, RC 3m, RC 6m, and RC 9m. Accordingly, in the following formula, \(p\) is equal to 4. There are four measurement occasions (i.e., 1 month, 3 months, 6 months, 9 months), so \(t\) is equal to 4. Thus, the number of unique (non-redundant) sources of information is 14.

\(i = \frac{4(4+1)}{2} + t = \frac{20}{2} + 4 = 14\)

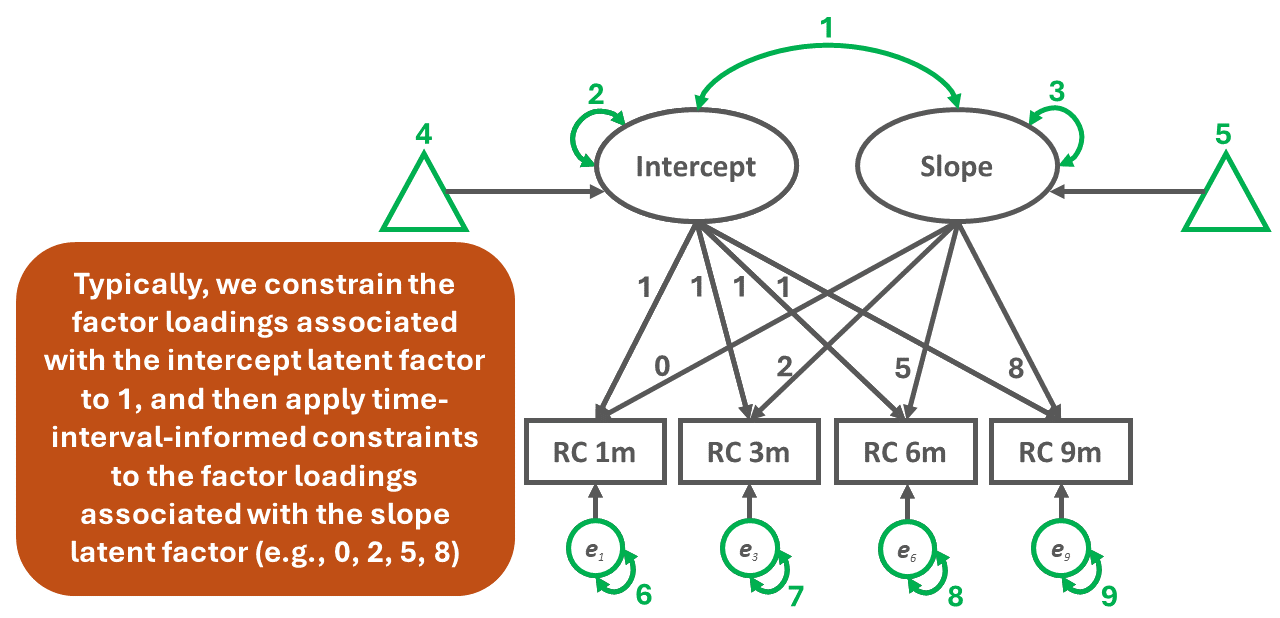

To count the number of free parameters (\(k\)), simply add up the number of the specified unconstrained factor loadings, variances, means, intercepts, covariances, and (residual) error variance terms in the unconditional unconstrained LGM. Please note that for latent factor scaling and time coding purposes, we typically (a) constrain all of the factor loadings associated with the intercept latent factor to 1.0 and (b) constrain the factor loadings associated with the slope latent factor to values that represent the time intervals (e.g., 0, 2, 5, 8), where zero represents the value of role clarity where the slope crosses the intercept. Had there been equal intervals for the measurement occasions for role clarity, we could have scaled the slope latent factor with factor loadings equal to 0, 1, 2, and 3; however, because the interval between RC 1m (1 month) and RC 3m (3 months) is not equal to the intervals between the other adjacent measurement occasions, we need to adjust the factor loadings by, in this instance, subtracting 1 from each measurement occasion month, so that RC 1m has a factor loading of 0, RC 3m has a factor loading of 2, RC 6m has a factor loading of 5, and RC 9m has a factor loading of 8. As shown in Figure 3 below, the example unconditional unconstrained LGM has 9 free parameters.

\(k = 9\)

To calculate the degrees of freedom (df) for the model, we need to subtract the number of free parameters from the number unique (non-redundant) sources of information, which in this example equates to 14 minus 9. Thus, the degrees of freedom for the model is 5, which means the model is over-identified.

\(df = i - k = 14 - 9 = 5\)

59.1.3 Model Fit

When a model is over-identified (df > 0), the extent to which the specified model fits the data can be assessed using a variety of model fit indices, such as the chi-square (\(\chi^{2}\)) test, comparative fit index (CFI), Tucker-Lewis index (TLI), root mean square error of approximation (RMSEA), and standardized root mean square residual (SRMR). As noted by Newsom, in the context of an LGM, these model fit indices reflect the amount of error around estimated slopes, and a poorly fitted model does not necessarily imply the absence of change over time or the accuracy of the functional form of change. For a commonly cited reference on cutoffs for fit indices, please refer to Hu and Bentler (1999), and for a concise description of common guidelines regarding interpreting model fit indices, including differences between stringent and relaxed interpretations of common fit indices, I recommend checking out Nye (2023). With that being said, those papers focus on confirmatory factor analyses as opposed to LGM. Regardless of which cutoffs we apply when interpreting fit indices, we must remember that such cutoffs are merely guidelines, and it’s possible to estimate an acceptable model that meets some but not all of the cutoffs given the limitations of some fit indices.

Chi-square test. The chi-square (\(\chi^{2}\)) test can be used to assess whether the model fits the data adequately, where a statistically significant \(\chi^{2}\) value (e.g., p \(<\) .05) indicates that the model does not fit the data well and a nonsignificant chi-square value (e.g., p \(\ge\) .05) indicates that the model fits the data reasonably well (Bagozzi and Yi 1988). The null hypothesis for the \(\chi^{2}\) test is that the model fits the data perfectly, and thus failing to reject the null model provides some confidence that the model fits the data reasonably close to perfectly. Of note, the \(\chi^{2}\) test is sensitive to sample size and non-normal variable distributions.

Comparative fit index (CFI). As the name implies, the comparative fit index (CFI) is a type of comparative (or incremental) fit index, which means that CFI compares the focal model to a baseline model, which is commonly referred to as the null or independence model. CFI is generally less sensitive to sample size than the chi-square test. A CFI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90.

Tucker-Lewis index (TLI). Like CFI, Tucker-Lewis index (TLI) is another type of comparative (or incremental) fit index. TLI is generally less sensitive to sample size than the chi-square test and tends to work well with smaller sample sizes; however, as Hu and Bentler (1999) noted, TLI may be not be the best choice for smaller sample sizes (e.g., N \(<\) 250). A TLI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90.

Root mean square error of approximation (RMSEA). The root mean square error of approximation (RMSEA) is an absolute fit index that penalizes model complexity (e.g., models with a larger number of estimated parameters) and thus ends up effectively rewarding more parsimonious models. RMSEA values tend to upwardly biased when the model degrees of freedom are fewer (i.e., when the model is closer to being just-identified); further, Hu and Bentler (1999) noted that RMSEA may not be the best choice for smaller sample sizes (e.g., N \(<\) 250). In general, an RMSEA value that is less than or equal to .06 indicates good model fit to the data, although some relax that cutoff to .08 or even .10.

Standardized root mean square residual (SRMR). Like the RMSEA, the standardized root mean square residual (SRMR) is an example of an absolute fit index. An SRMR value that is less than or equal to .06 generally indicates good fit to the data, although some relax that cutoff to .08.

Summary of model fit indices. The conventional cutoffs for the aforementioned model fit indices – like any rule of thumb – should be applied with caution and with good judgment and intention. Further, these indices don’t always agree with one another, which means that we often look across multiple fit indices and come up with our best judgment of whether the model adequately fits the data. A poorly fitting model may be due to model misspecification, an inappropriate model estimator, a large amount of error around slope estimates, or other factors that need to be addressed. With that being said, we should also be careful to not toss out a model entirely if one or more of the model fit indices suggest less than acceptable levels of fit to the data. The table below contains the conventional stringent and more relaxed cutoffs for the model fit indices.

| Fit Index | Stringent Cutoffs for Acceptable Fit | Relaxed Cutoffs for Acceptable Fit |

|---|---|---|

| \(\chi^{2}\) | \(p \ge .05\) | \(p \ge .01\) |

| CFI | \(\ge .95\) | \(\ge .90\) |

| TLI | \(\ge .95\) | \(\ge .90\) |

| RMSEA | \(\le .06\) | \(\le .08\) |

| SRMR | \(\le .06\) | \(\le .08\) |

59.1.4 Parameter Estimates

In LGM, there are various types of parameter estimates, which correspond to the path diagram symbols covered earlier (e.g., covariance, variance, mean, factor loading). When a model is just-identified or over-identified, we can estimate the standard errors for freely estimated parameters, which allows us to evaluate statistical significance. With most software applications, we can request standardized parameter estimates, which can facilitate the interpretation of some parameters.

Factor loadings. In LGM, it is common to apply constraints to all factor loadings extending from the intercept and slope latent factors to the observed variables; with that said, we can freely estimate some factor loadings associated with the slope factor, but a discussion of that topic is beyond the scope of this introductory chapter. For our purposes, we will constrain all factor loadings associated with the intercept latent factor to 1, whereas the factor loadings associated with the slope latent factor will reflect the intervals between measurement occasions (i.e., time coding). If we were to have four measurement occasions with equally spaced time intervals between adjacent occasions, then we could apply the following factor loadings to the slope factor: 0, 1, 2, and 3. Note, however, that we have a great deal of freedom when it comes to constraining the slope factor loadings, and the factor loading that we constrain to zero represents the value of our construct (e.g., role clarity) where the slope crosses the intercept. Alternatively, we could constrain our slope factor loadings as -3, -2, -1, and 0 if our goal were to estimate the intercept as the last measurement occasion. With respect to the LGM path diagram that has been our focus thus far (see Figure 2), we constrained our slope factor loadings to 0, 2, 5, and 8 because the intervals is not equal between adjacent measurement occasions. Namely, the interval between the 1-month and 3-months role clarity measurement occasions is 2 months (3 - 1 = 2), whereas the intervals between all other adjacent measurement occasions is 3 months (6 - 3 = 3 and 9 - 6 = 3). To account for the unequal intervals, I simply subtracted one from each measurement occasion month to arrive at the 0, 2, 5, and 8 factor loadings.

Variances. The variances of the latent factors represent the amount of between-person variability associated with intercept and slope estimates. A significant and large variance associated with the intercept latent factor suggests that individuals’ intercept estimates vary considerably from one another, whereas a significant or large variance associated with the slope latent factor suggests that individuals’ slope estimates vary considerably from one another.

Means. The means of the latent factors represent the average intercept and slope estimates. For example, with respect to the slope factor, a significant and positive mean indicates that, on average, individuals’ slopes were positive (e.g., increase in the measured construct over time), a nonsignificant mean indicates that, on average, individuals’ slopes were approximately zero (e.g., no change in the measured construct over time), and a significant and negative mean indicates that, on average, individuals’ slopes were negative (e.g., decrease in the measured construct over time).

Covariances. The covariance between the latent factors help us understand the extent to which intercept estimates are associated with slope estimates. For example, a negative covariance between the intercept and slope latent factors indicates that individuals who have higher intercept estimates tend to less positive slope estimates, whereas a positive covariance indicates that individuals who have higher intercept estimates tend to have more positive slope estimates. When standardized, a covariance can be interpreted as a correlation.

(Residual) error variance terms. The (residual) error variance terms, which are also known as disturbance terms or uniquenesses, indicate how much variance is left unexplained by the latent factors in relation to the observed variables (indicators). When standardized, error variance terms represent the proportion (percentage) of variance that remains unexplained by the latent factors.

59.1.5 Model Comparisons

When evaluating an LGM, we may wish to evaluate whether a focal LGM performs better (or worse) than an alternative LGM. Comparing models can help us arrive at a more parsimonious model that still fits the data well, as well as help us understand the extent to which construct demonstrates measurement invariance (or measurement equivalence) over time.

As an example, imagine we are focusing on our unconditional unconstrained LGM for role clarity (see Figure 2). Now imagine that we specify an alternative model in which we constrain the (residual) error variances to be equal, which could be used as a test for homogeneity of error variances. We can compare those two models to determine whether the alternative model fits the data about the same as our focal model or worse.

When two models are nested, we can perform nested model comparisons. As a reminder, a nested model has all the same parameter estimates of a full model but has additional parameter constraints in place. If two models are nested, we can compare them using model fit indices like CFI, TLI, RMSEA, and SRMR. We can also use the chi-square difference (\(\Delta \chi^{2}\)) test (likelihood ratio test) to compare nested models, which provides a statistical test for nested-model comparisons.

When two models are not nested, we can use other model fit indices like Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC). With respect to these indices, the best fitting model will have lower AIC and BIC values.

59.1.6 Statistical Assumptions

The statistical assumptions that should be met prior to estimating and/or interpreting an LGM will depend on the type of estimation method. Common estimation methods for an LGM include (but are not limited to) maximum likelihood (ML), maximum likelihood with robust standard errors (MLM or MLR), weighted least squares (WLS), and diagonally weighted least squares (DWLS). WLS and DWLS estimation methods are used when there are observed variables with nominal or ordinal (categorical) measurement scales. In this chapter, we will focus on ML estimation, which is a common method when observed variables have interval or ratio (continuous) measurement scales. As Kline (2011) notes, ML estimation carries with it the following assumptions: “The statistical assumptions of ML estimation include independence of the scores, multivariate normality of the endogenous variables, and independence of the exogenous variables and error terms” (p. 159). When multivariate non-normality is a concern, the MLM or MLR estimator is a better choice than ML estimator, where the MLR estimator allows for missing data and the MLM estimator does not.

59.1.6.1 Sample Write-Up

As part of a new-employee onboarding longitudinal study, surveys were administered 1 month, 3 months, 6 months, and 9 months after employees’ respective start dates. Each survey included a 15-item measure of role clarity, and a composite variable based on the employees’ average responses was created at each survey measurement occasion. Using an unconditional unconstrained latent growth model (LGM), we investigated whether new employees’ levels of role clarity showed linear change over time and whether role-clarity change varied between new employees. When specifying the model, we constrained the intercept factor loadings to 1, and constrained the slope factor loadings to 0, 2, 5, and 8 to account for the unequal time intervals between adjacent measurement occasions. We estimated the model using the maximum likelihood (ML) estimator and a sample size of 650 new employees. Missing data were not a concern. We evaluated the model’s fit to the data using the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 3.760 df = 5, p = .584). Further, the CFI and TLI estimates were 1.000 and 1.001, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .00, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .019, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that model fit the data acceptably.

Regarding the unstandardized parameter estimates, the intercept latent factor mean of 35.143 (p < .001) indicated that, on average, new employees’ level of role clarity was 35.143 (out of a possible 100) 1 month after their respective start dates. The slope latent factor mean of 4.975 (p < .001) indicated that, on average, new employees’ level of role clarity increased by 4.975 each month. The variances associated with the intercept and slope latent factors, however, indicated that there was a statistically significant amount of between-employee intercept and slope variation (\(\sigma_{intercept}\) = 155.324, p < .001; \(\sigma_{slope}\) = .744, p < .001). The statistically significant and negative covariance between the intercept and slope latent factors indicated that employees with higher levels of role clarity 1 month after their respective start dates tended to have less positive role clarity change trajectories (\(\psi\) = -3.483, p = .002). Finally, the standardized (residual) error terms associated with the four observed role clarity measurement occasions ranged from .307 to .368, indicating that a proportionally small amount of variance was left unexplained by the intercept and slope latent factors.

In sum, the estimated unconditional constrained LGM showed that new employees’ role clarity, on average, increased in a linear manner between 1 month and 9 months after employees’ respective start dates. Further, new employees varied with respect to their level of role clarity 1 month after their start dates, and their role clarity change trajectories varied in terms of slope. A conditional model with a time-invariant predictor or time-variant predictors may help explain between-employee differences in intercepts and slopes.

59.2 Tutorial

This chapter’s tutorial demonstrates how to estimate change using latent growth modeling (LGM) in R.

59.2.2 Functions & Packages Introduced

| Function | Package |

|---|---|

pivot_longer |

tidyr |

mutate |

dplyr |

case_match |

dplyr |

ggplot |

ggplot2 |

aes |

ggplot2 |

geom_line |

ggplot2 |

labs |

ggplot2 |

scale_x_continuous |

ggplot2 |

theme_classic |

ggplot2 |

slice |

dplyr |

growth |

lavaan |

summary |

base R |

semPaths |

semPlot |

anova |

base R |

options |

base R |

inspect |

lavaan |

cbind |

base R |

rbind |

base R |

t |

base R |

ampute |

mice |

59.2.3 Initial Steps

If you haven’t already, save the file called “lgm.csv” into a folder that you will subsequently set as your working directory. Your working directory will likely be different than the one shown below (i.e., "H:/RWorkshop"). As a reminder, you can access all of the data files referenced in this book by downloading them as a compressed (zipped) folder from the my GitHub site: https://github.com/davidcaughlin/R-Tutorial-Data-Files; once you’ve followed the link to GitHub, just click “Code” (or “Download”) followed by “Download ZIP”, which will download all of the data files referenced in this book. For the sake of parsimony, I recommend downloading all of the data files into the same folder on your computer, which will allow you to set that same folder as your working directory for each of the chapters in this book.

Next, using the setwd function, set your working directory to the folder in which you saved the data file for this chapter. Alternatively, you can manually set your working directory folder in your drop-down menus by going to Session > Set Working Directory > Choose Directory…. Be sure to create a new R script file (.R) or update an existing R script file so that you can save your script and annotations. If you need refreshers on how to set your working directory and how to create and save an R script, please refer to Setting a Working Directory and Creating & Saving an R Script.

Next, read in the .csv data file called “lgm.csv” using your choice of read function. In this example, I use the read_csv function from the readr package (Wickham, Hester, and Bryan 2024). If you choose to use the read_csv function, be sure that you have installed and accessed the readr package using the install.packages and library functions. Note: You don’t need to install a package every time you wish to access it; in general, I would recommend updating a package installation once ever 1-3 months. For refreshers on installing packages and reading data into R, please refer to Packages and Reading Data into R.

# Install readr package if you haven't already

# [Note: You don't need to install a package every

# time you wish to access it]

install.packages("readr")# Access readr package

library(readr)

# Read data and name data frame (tibble) object

df <- read_csv("lgm.csv")## Rows: 650 Columns: 7

## ── Column specification ────────────────────────────────────────────────────────────────────────────────────────────────────────────

## Delimiter: ","

## chr (1): EmployeeID

## dbl (6): RC_1m, RC_3m, RC_6m, RC_9m, ProPers_d1, JobPerf_12m

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.## [1] "EmployeeID" "RC_1m" "RC_3m" "RC_6m" "RC_9m" "ProPers_d1" "JobPerf_12m"## [1] 650## # A tibble: 6 × 7

## EmployeeID RC_1m RC_3m RC_6m RC_9m ProPers_d1 JobPerf_12m

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 EE1001 13.0 18.1 31.2 42.4 50.6 47.8

## 2 EE1002 39.5 58.6 68.4 71.8 62.8 55.0

## 3 EE1003 41.3 45.6 66.4 79.3 43.8 61.7

## 4 EE1004 38.5 65.2 47.8 64.4 47.8 33.3

## 5 EE1005 34.2 37.7 57.1 72.7 64.8 46.9

## 6 EE1006 42.3 54.9 74.3 96.0 100 71.0The data frame includes data from identical new employee onboarding surveys administered 1 month, 3 months, 6 months, and 9 months after employees’ respective start dates. The sample includes 650 employees. The data frame includes a unique identifier variable called EmployeeID, such that each employee has their own unique ID. For each survey, new employees responded to a 15-item role clarity measure using a 100-point (1 = strongly disagree and 100 = strongly agree) response format, where higher scores indicate higher role clarity. A composite variable based on employees’ average scores across the 15 items has already been created for each measurement occasion. The data frame also includes employees responses to a 10-item proactive personality measure, which was administered on their respective start dates. The proactive personality measure was also assessed using a 100-point (1 = strongly disagree and 100 = strongly agree) response format, where higher scores indicate higher proactive personality. A composite variable has already been created based on new employees’ average responses to the 10 items. Employees’ direct supervisors rated their job performance along eight dimensions at 12 months after their respective start dates using a 100-point (1 = does not meet expectations and 100 = exceeds expectations) response format. A composite variable has already been created based on new supervisors’ ratings on the eight performance dimensions. The three data sources have been joined/merged for you.

59.2.4 Visualizing Change

Prior to estimating a latent growth model (LGM), we will visualize the role clarity trajectories of our sample of 650 new employees. To do so, we need to begin by creating a second data frame in which the data are restructured from wide-to-long format. For more information on manipulating data from wide-to-long format, please see the chapter on that topic.

To restructure the data and to ultimately create our data visualization, we will use the functions from the tidyr, dplyr, and ggplot2 packages, so let’s begin by installing and accessing those packages (if you haven’t already).

We will begin by restructuring the data from wide-to-long format by doing the following.

- Create a name for a new data frame object to which we will eventually assign a long-format data frame object; here, I name the new data frame object

df_long. - Use the

<-operator to assign the new long-form data frame object to the object nameddf_longin the step above. - Type the name of the original data frame object (

df), followed by the pipe (%>%) operator. - Type the name of the

pivot_longerfunction from thetidyrpackage.

- As the first argument in the

pivot_longerfunction, typecols=followed by thec(combine) function. As the arguments within thecfunction, list the names of the variables that you wish to pivot from separate variables (wide) to levels or categories of a new variable, effectively stacking them vertically. In this example, let’s list the names of role clarity variables from the four measurement occasions:RC_1m,RC_3m,RC_6m, andRC_9m. - As the second argument in the

pivot_longerfunction, typenames_to=followed by what you would like to name the new stacked variable (see previous) created from the four survey measure variables. Let’s call the new variable containing the names of the role clarity variables from the four measurement occasions as follows: “Time”. - As the third argument in the

pivot_longerfunction, typevalues_to=followed by what you would like to name the new variable that contains the scores for the four role clarity variables that are now stacked vertically for each case. Let’s call the new variable containing the scores from the four variables the following: “RoleClarity”.

# Apply pivot_longer function to restructure data to long format (using pipe)

df_long <- df %>%

pivot_longer(cols=c(RC_1m, RC_3m, RC_6m, RC_9m),

names_to="Time",

values_to="RoleClarity")

# Print first 8 rows of new data frame

head(df_long, n=8)## # A tibble: 8 × 5

## EmployeeID ProPers_d1 JobPerf_12m Time RoleClarity

## <chr> <dbl> <dbl> <chr> <dbl>

## 1 EE1001 50.6 47.8 RC_1m 13.0

## 2 EE1001 50.6 47.8 RC_3m 18.1

## 3 EE1001 50.6 47.8 RC_6m 31.2

## 4 EE1001 50.6 47.8 RC_9m 42.4

## 5 EE1002 62.8 55.0 RC_1m 39.5

## 6 EE1002 62.8 55.0 RC_3m 58.6

## 7 EE1002 62.8 55.0 RC_6m 68.4

## 8 EE1002 62.8 55.0 RC_9m 71.8Using the long-format df_long data frame object as input, we will create a line chart, where each line represents an individual employee’s change in role clarity from 1 months to 9 months. We will create the line chart by doing the following operations.

- Type the name of the long-format data frame object (

df_long), followed by the pipe (%>%) operator. - To recode the

Timevariable from character to numeric, type the name of themutatefunction from thedplyrpackage.

- As the sole parenthetical argument, begin by typing the name of the existing

Timevariable followed by=so that we can overwrite the existing variable. - Type the name of the

case_matchfunction from thedplyrpackage. As the first argument, type the name of theTimevariable we wish to recode. As the second argument, type the name of the first character level for theTimevariable in quotation marks followed by the~operator and the numeral 1, which will signify one month ("RC_1m" ~ 1). As the third argument, type the name of the second character level for theTimevariable in quotation marks followed by the~operator and the numeral 3, which will signify one month ("RC_3m" ~ 3). As the fourth argument, type the name of the third character level for theTimevariable in quotation marks followed by the~operator and the numeral 6, which will signify one month ("RC_6m" ~ 6). As the fifth argument, type the name of the fourth character level for theTimevariable in quotation marks followed by the~operator and the numeral 9, which will signify one month ("RC_9m" ~ 9). - After the last parenthesis (

)) of thecase_matchfunction, insert the pipe (%>%) operator.

- To declare the aesthetics for the plot, type the name of the

ggplotfunction from theggplot2package.

- As the sole argument in the

ggplotfunction, type the name of theaesfunction. As the first argument within theaesfunction, typex=followed by the name of the x-axis variable, which isTimein this example. As the second argument, typey=followed by the name of the y-axis variable, which isRoleClarityin this example. As the third and final argument, typegroup=followed by the name of the grouping variable, which is the unique identifier variable for employees calledEmployeeID(given that each employee now has four rows of data in the long-format data frame object). - After the ending parenthesis (

)) of theggplotfunction, type the+operator.

- To request a line chart, type the name of the

geom_linefunction.

- We will leave the

geom_linefunction parentheses empty. - After the ending parenthesis (

)) of thegeom_linefunction, type the+operator.

- To add/change axis labels, type the name of the

labsfunction.

- As the first argument in the

labsfunction, typex=followed by what we would like to label the x-axis. Let’s label the x-axis “Time”. - As the second argument in the

labsfunction, typey=followed by what we would like to label the y-axis. Let’s label the y-axis “Role Clarity”. - After the ending parenthesis (

)) of thelabsfunction, type the+operator.

- To change the scaling of the x-axis to reflect the unequal interval for 1 month (1) and 3 months (3) as compared to the adjacent intervals for 3 months (3) and 6 months (6) and for 6 months (6) and 9 months (9), type the name of the

scale_x_continuousfunction.

- As the first argument within the

scale_x_continuousfunction, typebreaks=followed by where the x-axis labels should appear along the axis, which for this example will correspond to months 1, 3, 6, and 9. We’ll use thecfunction to create a vector of those breaks:breaks=c(1, 3, 6, 9). - As the second argument, type

labels=followed by thecfunction. Within thecfunction parentheses, create a vector of four descriptive names for the measurement occasions. In this example, I chose: “1 month”, “3 months”, “6 months”, and “9 months”:labels=c("1 month", "3 months", "6 months", "9 months"). - After the ending parenthesis (

)) of thescale_x_discretefunction, type the+operator.

- Type the name of the

theme_classicfunction, and leave the function parentheses empty. This will apply a simple theme to the line chart, which includes removing background gridlines.

# Create a line chart representing employees' change trajectories

df_long %>%

# Recode the Time character levels to numeric values

mutate(Time = case_match(Time,

"RC_1m" ~ 1,

"RC_3m" ~ 3,

"RC_6m" ~ 6,

"RC_9m" ~ 9)) %>%

# Declare aesthetics for plot

ggplot(aes(x=Time,

y=RoleClarity,

group=EmployeeID)) +

# Create line chart

geom_line() +

# Create x- and y-axis labels

labs(x="Time",

y="Role Clarity") +

# Specify the breaks and labels for the x-axis

scale_x_continuous(breaks=c(1,

3,

6,

9),

labels=c("1 month",

"3 months",

"6 months",

"9 months")) +

# Request classic, minimal look without gridlines

theme_classic()

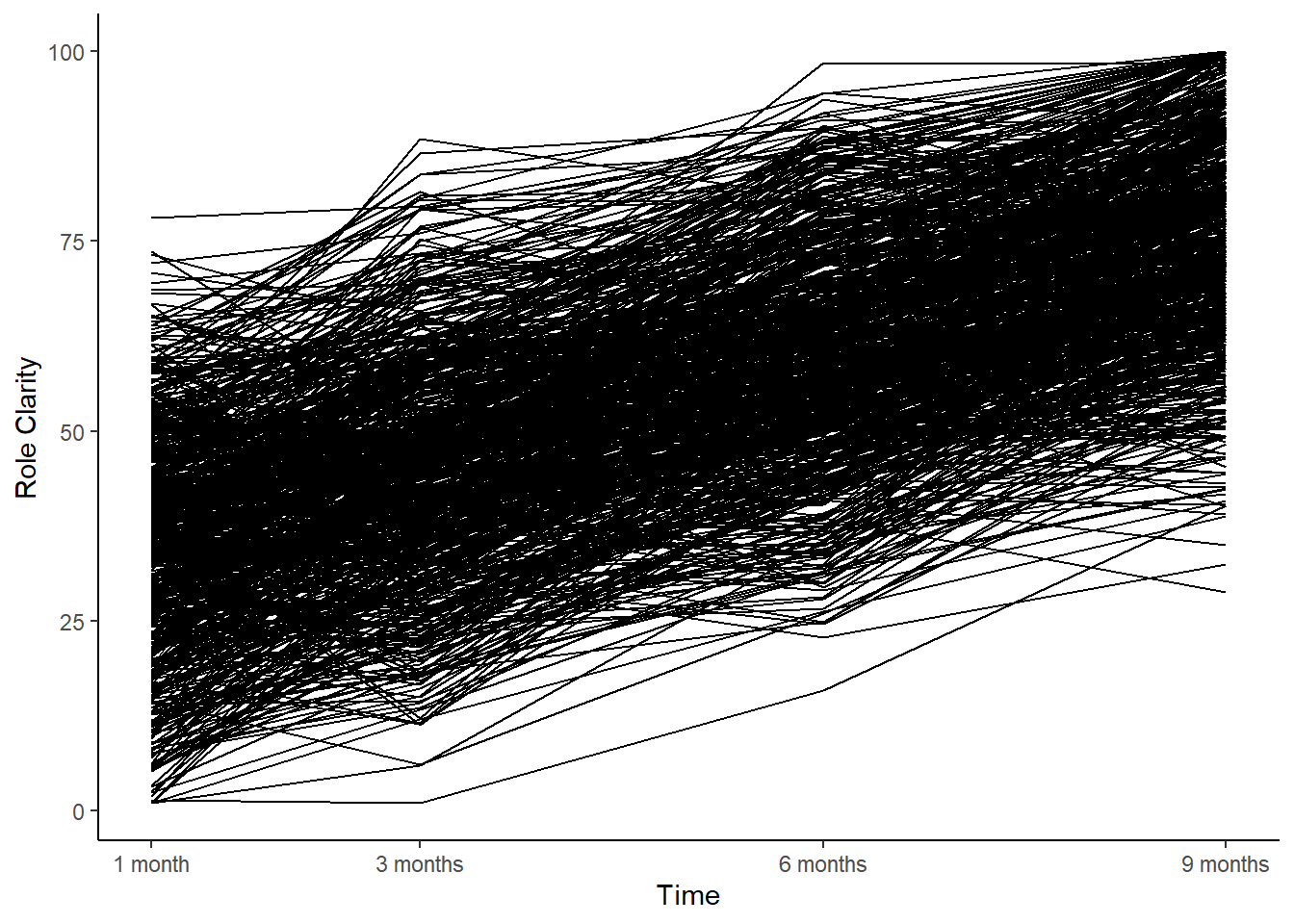

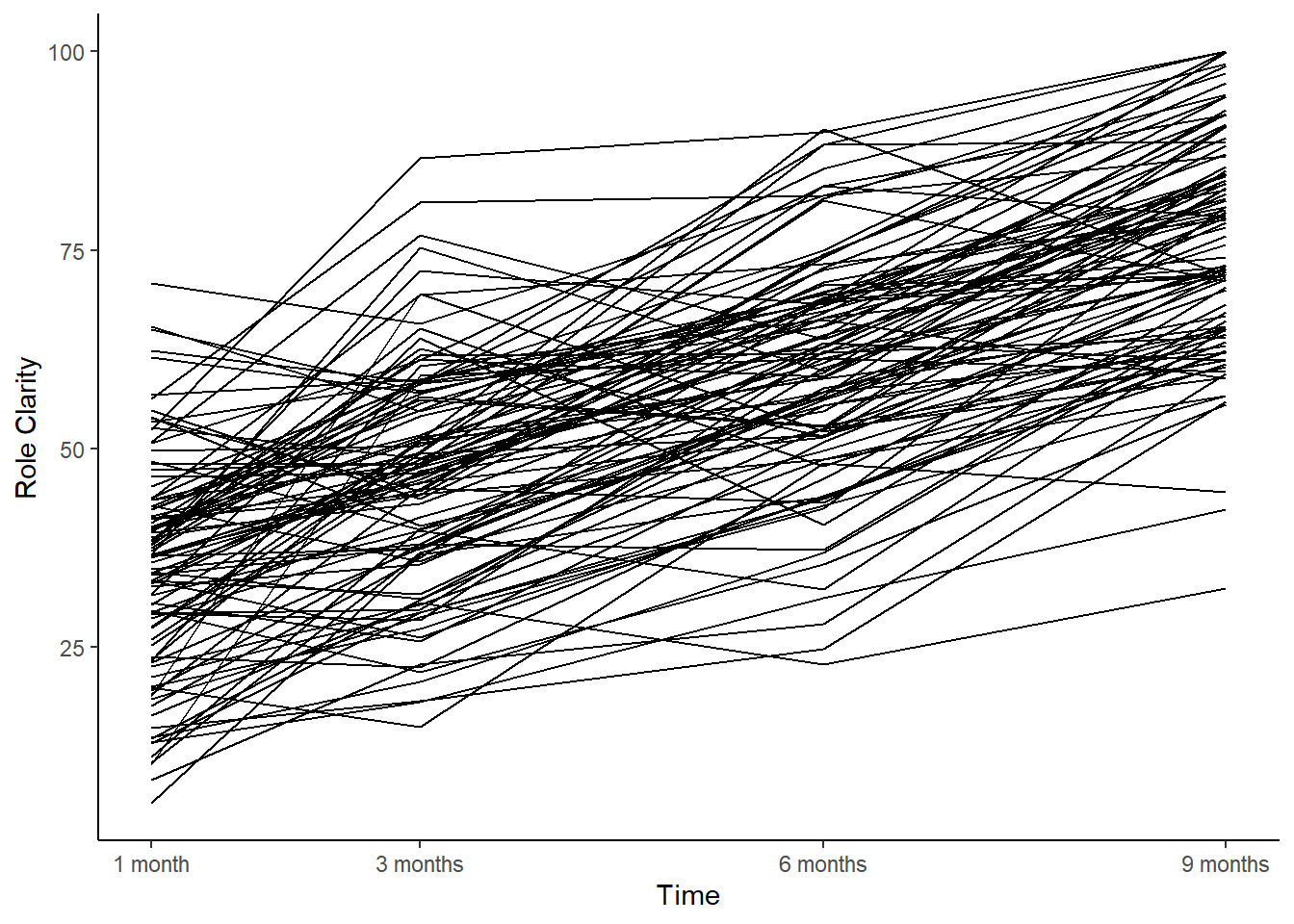

In the line chart, take note of the general upward linear trend across the 650 employees; visually, it seems clear that there is likely a linear functional form and that, on average, employees’ levels of role clarity tended to increase from 1 month to 9 months.

To view a reduced set of employees, which may give us clearer depiction of between-employee variation, we will use the slice function from the dplyr package. We will adapt the previous data visualization code by inserting the slice function after piping in the df_long data frame object. Because each employee has four rows of data in the long-format df_long data frame object, retaining the first 400 rows will represent 100 employees’ role clarity data. As the sole parenthetical argument in the slice function, let’s reference the first 400 rows of data by typing 1:400.

# Create a line chart representing a *subset* of employees' change trajectories

df_long %>%

# Recode the Time character levels to numeric values

mutate(Time = case_match(Time,

"RC_1m" ~ 1,

"RC_3m" ~ 3,

"RC_6m" ~ 6,

"RC_9m" ~ 9)) %>%

# Retain just the first 400 rows of data

slice(1:400) %>%

# Declare aesthetics for plot

ggplot(aes(x=Time,

y=RoleClarity,

group=EmployeeID)) +

# Create line chart

geom_line() +

# Create x- and y-axis labels

labs(x="Time",

y="Role Clarity") +

# Specify the breaks and labels for the x-axis

scale_x_continuous(breaks=c(1,

3,

6,

9),

labels=c("1 month",

"3 months",

"6 months",

"9 months")) +

# Request classic, minimal look without gridlines

theme_classic()

The “thinned out” line chart better captures the between-employee variation in role clarity trajectories but still suggests a positive linear function form.

59.2.5 Estimate Unconditional Unconstrained Latent Growth Model

We will begin by estimating what is referred to as an unconditional unconstrained latent growth model (LGM). An unconditional model is not conditional on an exogenous variable or variables (e.g., predictor variables, covariates). In other words, an unconditional model just includes the intercept and slope latent factors along with their respective indicators (i.e., observed repeated-measures variables). An unconstrained model lacks any constraints placed on intercept and slope means and variances and on (residual) error variances. With so many freely estimated parameters, unconstrained models tend to be the best fitting models. Finally, please note that we will be working with our data frame object that is in the original wide format: df.

Because LGM is a specific application of structural equation modeling (SEM), we will use functions from an R package developed for SEM called lavaan (latent variable analysis) to estimate our CFA models. Let’s begin by installing and accessing the lavaan package (if you haven’t already).

First, we must specify the LGM and assign it to an object that we can subsequently reference. To do so, we will do the following.

- Specify a name for the model object (e.g.,

lgm_mod), followed by the<-assignment operator. - To the right of the

<-assignment operator and within quotation marks (" "): - Specify a name for the intercept latent factor (e.g.,

i_RC), followed by the=~operator, which is used to indicate how a latent factor is measured. Anything that comes to the right of the=~operator is an observed variable (i.e., indicator) of the latent factor. Please note that the latent factor is not something that we directly observe, so it will not have a corresponding variable in our data frame object.

- After the

=~operator, specify each observed variable associated with the intercept latent factor, and to separate the observed variables, insert the+operator. In this example, the four observed variables are:RC_1m,RC_3m,RC_6m, andRC_9m. These are our observed variables, which conceptually are influenced by the underlying latent factor. - To set the

i_RClatent factor as the intercept, we also need to constrain each observed variable’s factor loading to 1 by preceding each observed variable by1*. As a result, the end result should look like this:i_RC =~ 1*RC_1m + 1*RC_3m + 1*RC_6m + 1*RC_9m.

- Specify a name for the slope latent factor (e.g.,

s_RC), followed by the=~operator, which is used to indicate how a latent factor is measured.

- After the

=~operator, specify each observed variable associated with the slope latent factor, which will be the same observed variables that serve as indicators for the intercept latent factor. To separate the observed variables, insert the+operator. - To set the

s_RClatent factor as the slope, we also need to constrain each observed variable’s factor loading to account for the time intervals between measurement occasions, which is also known as time coding. Because role clarity was measured at 1 month, 3 months, 6 months, and 9 months, we will subtract 1 from each month, which will result in the 1-month measurement occasion becoming 0 and thus setting a measurement occasion to serve as the intercept value; in other words, conceptually, the measurement occasion coded as 0 represents the value of role clarity when its slope crosses time equal to 0. Subtracting 1 from each measurement occasion in this example also allows for us to account for the unequal time intervals associated with the measurement occasions. As a result, the end result should look like this:s_RC =~ 0*RC_1m + 2*RC_3m + 5*RC_6m + 8*RC_9m. - Note: We could have specified a different measurement occasion slope factor loading as 0 by simply changing the time coding; for example, if we were to set the last measurement occasion slope factor loading as 0, our time coding would be:

s_RC =~ -8*RC_1m + -6*RC_3m + -3*RC_6m + 0*RC_9m. Finally, if the time intervals between measurement occasions had been equal, we would have been able to apply a 0, 1, 2, and 3 time coding for the slope factor loadings to set the intercept at the first measurement occasion, or -3, -2, -1, and 0 to set the intercept at the last measurement occasion.

# Specify unconditional unconstrained LGM & assign to object

lgm_mod <- "

# Specify and constrain intercept factor loadings

i_RC =~ 1*RC_1m + 1*RC_3m + 1*RC_6m + 1*RC_9m

# Specify and constrain slope factor loadings

s_RC =~ 0*RC_1m + 2*RC_3m + 5*RC_6m + 8*RC_9m

"Second, now that we have specified the model object (lgm_mod), we are ready to estimate the model using the growth function from the lavaan package. To do so, we will do the following.

- Specify a name for the fitted model object (e.g.,

lgm_fit), followed by the<-assignment operator. - To the right of the

<-assignment operator, type the name of thegrowthfunction, and within the function parentheses include the following arguments.

- As the first argument, insert the name of the model object that we specified above (

lgm_mod). - As the second argument, insert the name of the data frame object to which the indicator variables in our model belong. That is, after

data=, insert the name of the original wide-format data frame object (df). - Note: The

growthfunction includes model estimation defaults, which explains why we had relatively few model specifications.

# Estimate unconditional unconstrained LGM & assign to fitted model object

lgm_fit <- growth(lgm_mod, # name of specified model object

data=df) # name of wide-format data frame objectThird, we will use the summary function from base R to to print the model results. To do so, we will apply the following arguments in the summary function parentheses.

- As the first argument, specify the name of the fitted model object that we created above (

lgm_fit). - As the second argument, set

fit.measures=TRUEto obtain the model fit indices (e.g., CFI, TLI, RMSEA, SRMR). - As the third argument, set

standardized=TRUEto request the standardized parameter estimates for the model.

# Print summary of model results

summary(lgm_fit, # name of fitted model object

fit.measures=TRUE, # request model fit indices

standardized=TRUE) # request standardized estimates## lavaan 0.6.15 ended normally after 96 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 9

##

## Number of observations 650

##

## Model Test User Model:

##

## Test statistic 3.760

## Degrees of freedom 5

## P-value (Chi-square) 0.584

##

## Model Test Baseline Model:

##

## Test statistic 1226.041

## Degrees of freedom 6

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 1.000

## Tucker-Lewis Index (TLI) 1.001

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -10100.582

## Loglikelihood unrestricted model (H1) -10098.702

##

## Akaike (AIC) 20219.165

## Bayesian (BIC) 20259.458

## Sample-size adjusted Bayesian (SABIC) 20230.883

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.000

## 90 Percent confidence interval - lower 0.000

## 90 Percent confidence interval - upper 0.047

## P-value H_0: RMSEA <= 0.050 0.963

## P-value H_0: RMSEA >= 0.080 0.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.019

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC =~

## RC_1m 1.000 12.463 0.820

## RC_3m 1.000 12.463 0.834

## RC_6m 1.000 12.463 0.840

## RC_9m 1.000 12.463 0.855

## s_RC =~

## RC_1m 0.000 0.000 0.000

## RC_3m 2.000 1.725 0.116

## RC_6m 5.000 4.314 0.291

## RC_9m 8.000 6.902 0.474

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC ~~

## s_RC -3.483 1.147 -3.036 0.002 -0.324 -0.324

##

## Intercepts:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .RC_1m 0.000 0.000 0.000

## .RC_3m 0.000 0.000 0.000

## .RC_6m 0.000 0.000 0.000

## .RC_9m 0.000 0.000 0.000

## i_RC 35.143 0.559 62.824 0.000 2.820 2.820

## s_RC 4.975 0.064 77.667 0.000 5.767 5.767

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## .RC_1m 75.459 7.345 10.273 0.000 75.459 0.327

## .RC_3m 78.779 5.811 13.557 0.000 78.779 0.353

## .RC_6m 81.083 5.791 14.001 0.000 81.083 0.368

## .RC_9m 65.214 7.998 8.154 0.000 65.214 0.307

## i_RC 155.324 11.703 13.273 0.000 1.000 1.000

## s_RC 0.744 0.211 3.520 0.000 1.000 1.000Evaluating model fit. Now that we have the summary of our model results, we will begin by evaluating key pieces of the model fit information provided in the output.

- Estimator. The function defaulted to using the maximum likelihood (ML) model estimator. When there are deviations from multivariate normality or categorical variables, the function may switch to another estimator; alternatively, we can manually switch to another estimator using the

estimator=argument in thegrowthfunction. - Number of parameters. Nine parameters were estimated, which, as we will see later, correspond to the covariance between latent factors, latent factor means, latent factor variances, and (residual) error term variances of the observed variables (i.e., indicators).

- Number of observations. Our effective sample size is 650. Had there been missing data on the observed variables, this portion of the output would have indicated how many of the observations were retained for the analysis given the missing data. How missing data are handled during estimation will depend on the type of missing data approach we apply, which is covered in more default in the section called Estimating Models with Missing Data. By default, the

growthfunction applies listwise deletion in the presence of missing data. - Chi-square test. The chi-square (\(\chi^{2}\)) test assesses whether the model fits the data adequately, where a statistically significant \(\chi^{2}\) value (e.g., p \(<\) .05) indicates that the model does not fit the data well and a nonsignificant chi-square value (e.g., p \(\ge\) .05) indicates that the model fits the data reasonably well. The null hypothesis for the \(\chi^{2}\) test is that the model fits the data perfectly, and thus failing to reject the null model provides some confidence that the model fits the data reasonably close to perfectly. Of note, the \(\chi^{2}\) test is sensitive to sample size and non-normal variable distributions. For this model, we find the \(\chi^{2}\) test in the output section labeled Model Test User Model. Because the p-value is equal to or greater than .05, we fail to reject the null hypothesis that the mode fits the data perfectly and thus conclude that the model fits the data acceptably (\(\chi^{2}\) = 3.760, df = 5, p = .584). Finally, note that because the model’s degrees of freedom (i.e., 5) is greater than zero, we can conclude that the model is over-identified.

- Comparative fit index (CFI). As the name implies, the comparative fit index (CFI) is a type of comparative (or incremental) fit index, which means that CFI compares our estimated model to a baseline model, which is commonly referred to as the null or independence model. CFI is generally less sensitive to sample size than the chi-square (\(\chi^{2}\)) test. A CFI value greater than or equal to .95 generally indicates good model fit to the data, although some might relax that cutoff to .90. For this model, CFI is equal to 1.000, which indicates that the model fits the data acceptably.

- Tucker-Lewis index (TLI). Like CFI, Tucker-Lewis index (TLI) is another type of comparative (or incremental) fit index. TLI is generally less sensitive to sample size than the chi-square test and tends to work well with smaller sample sizes; however, TLI may be not be the best choice for smaller sample sizes (e.g., N \(<\) 250). A TLI value greater than or equal to .95 generally indicates good model fit to the data, although like CFI, some might relax that cutoff to .90. For this model, TLI is equal to 1.001, which indicates that the model fits the data acceptably.

- Loglikelihood and Information Criteria. The section labeled Loglikelihood and Information Criteria contains model fit indices that are not directly interpretable on their own (e.g., loglikelihood, AIC, BIC). Rather, they become more relevant when we wish to compare the fit of two or more non-nested models. Given that, we will will ignore this section in this tutorial.

- Root mean square error of approximation (RMSEA). The root mean square error of approximation (RMSEA) is an absolute fit index that penalizes model complexity (e.g., models with a larger number of estimated parameters) and thus effectively rewards models that are more parsimonious. RMSEA values tend to upwardly biased when the model degrees of freedom are fewer (i.e., when the model is closer to being just-identified); further, RMSEA may not be the best choice for smaller sample sizes (e.g., N \(<\) 250). In general, an RMSEA value that is less than or equal to .06 indicates good model fit to the data, although some relax that cutoff to .08 or even .10. For this model, RMSEA is .000, which indicates that the model fits the data acceptably.

- Standardized root mean square residual. Like the RMSEA, the standardized root mean square residual (SRMR) is an example of an absolute fit index. An SRMR value that is less than or equal to .06 generally indicates good fit to the data, although some relax that cutoff to .08. For this model, SRMR is equal to .019, which indicates that the model fits the data acceptably.

In sum, the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices all indicate that our model fit the data acceptably based on conventional rules of thumb and thresholds. This level of agreement, however, is not always going to occur. For instance, it is relatively common for the \(\chi^{2}\) test to indicate a lack of acceptable fit while one or more of the relative or absolute fit indices indicates that fit is acceptable given the limitations of the \(\chi^{2}\) test. Further, there may be instances where only two or three out of five of these model fit indices indicate acceptable model fit. In such instances, we should not necessarily toss out the model entirely, but we should consider whether there are model misspecifications. Of course, if all five model indices are well beyond the conventional thresholds (in a bad way), then our model may have some major misspecification issues, and we should proceed thoughtfully when interpreting the parameter estimates. With all that said, with an LGM, the lack of model fit indicates that there is a large amount of residual error around estimated slopes, and importantly, a lack of model fit does not necessarily indicate that there is a lack of linear (or nonlinear) change. For our model, all five model fit indices signal that the model fit the data acceptably, and thus we should feel confident proceeding forward with interpreting and evaluating the parameter estimates.

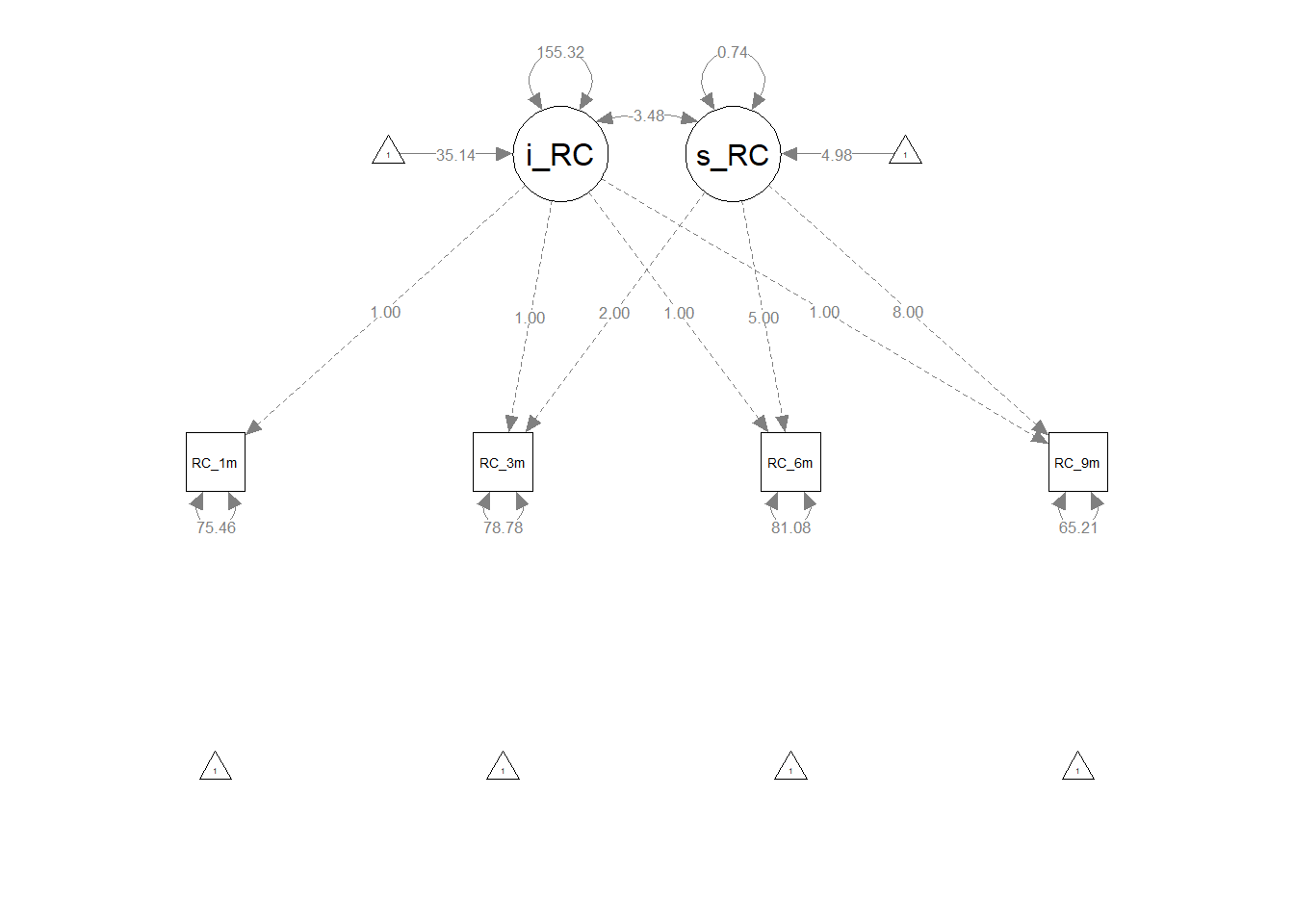

Evaluating parameter estimates. As noted above, our model showed acceptable fit to the data, so we can feel comfortable interpreting the parameter estimates. By default, the growth function provides unstandardized parameter estimates, but if you recall, we also requested standardized parameter estimates. In the output, the unstandardized parameter estimates fall under the column titled Estimates, whereas the standardized factor loadings we’re interested in fall under the column titled Std.all.

- Factor loadings. The output section labeled Latent Variables contains our factor loadings for the intercept and slope latent factors.

- With respect to the unstandardized factor loadings for the intercept latent factor (

i_RC; see Estimates column), the constraints we applied are reported. Specifically, we constrained each of the intercept factor loadings to 1. - With respect to the unstandardized factor loadings for the slope latent factor (

s_RC; see Estimates column), the constraints we applied are reported. Specifically, we constrained slope factor loadings to 0, 2, 5, and 8 to correspond with measurement occasions 1 month, 3 months, 6 months, and 9 months.

- With respect to the unstandardized factor loadings for the intercept latent factor (

- Covariances. The output section labeled Covariances contains the covariance between the intercept and slope latent factors. The unstandardized estimate is -3.483 (p = .002), and the standardized estimate, which can be interpreted as a correlation, is -.324.

- Means & Intercepts. The output section labeled Intercepts contains the means for the latent factors and the intercepts for the observed variables. By default, the intercepts for the observed variables are constrained to zero, so those are not of substantive interest. The means for the intercept and slope latent factors are, however, of substantive interest. The unstandardized intercept latent factor mean of 35.143 (p < .001) indicates that, on average, new employees’ level of role clarity was 35.143 (out of a possible 100) 1 month after their respective start dates. The unstandardized slope latent factor mean of 4.975 (p < .001) indicates that, on average, new employees’ level of role clarity increased by 4.975 each month.

- Variances. The output section labeled Variances contains the (residual) error variances for each observed variable (i.e., indicator) of the latent factors and the variances of the latent factors. The unstandardized variance associated with the intercept latent factor indicates that there is a statistically significant amount of between-employee intercept variation (155.324, p < .001), and the unstandardized variance associated with the slope latent factor indicates that there is a statistically significant amount of between-employee slope variation (.744, p < .001). The standardized (residual) error variances associated with the four observed role clarity measurement occasions ranged from .307 to .368, indicating that a proportionally small amount of variance was left unexplained by the intercept and slope latent factors and that the error variances are similar in magnitude, potentially suggesting homogeneity of residual error variances.

Visualize the path diagram. To visualize our unconditional unconstrained LGM as a path diagram, we can use the semPaths function from the semPlot package. If you haven’t already, please install and access the semPlot package.

While there are many arguments that can be used to refine the path diagram visualization, we will focus on just four to illustrate how the semPaths function works.

- As the first argument, insert the name of the fitted LGM object (

lgm_fit). - As the second argument, specify

what="est"to display just the unstandardized parameter estimates. - As the third argument, specify

weighted=FALSEto request that the visualization not weight the edges (e.g., lines) and other plot features. - As the fourth argument, specify

nCharNodes=0in order to use the full names of latent and observed indicator variables instead of abbreviating them.

# Visualize the unconditional unconstrained LGM

semPaths(lgm_fit, # name of fitted model object

what="est", # display unstandardized parameter estimates

weighted=FALSE, # do not weight plot features

nCharNodes=0) # do not abbreviate names

The resulting LGM path diagram can be useful for interpreting the model specifications and the parameter estimates.

Results write-up for the unconditional unconstrained LGM. As part of a new-employee onboarding longitudinal study, surveys were administered 1 month, 3 months, 6 months, and 9 months after employees’ respective start dates. Each survey included a 15-item measure of role clarity, and a composite variable based on the employees’ average responses was created at each survey measurement occasion. Using an unconditional unconstrained latent growth model (LGM), we investigated whether new employees’ levels of role clarity showed linear change over time and whether role-clarity change varied between new employees. When specifying the model, we constrained the intercept factor loadings to 1, and constrained the slope factor loadings to 0, 2, 5, and 8 to account for the unequal time intervals between adjacent measurement occasions. We estimated the model using the maximum likelihood (ML) estimator and a sample size of 650 new employees. Missing data were not a concern. We evaluated the model’s fit to the data using the chi-square (\(\chi^{2}\)) test, CFI, TLI, RMSEA, and SRMR model fit indices. The \(\chi^{2}\) test indicated that the model fit the data as well as a perfectly fitting model (\(\chi^{2}\) = 3.760 df = 5, p = .584). Further, the CFI and TLI estimates were 1.000 and 1.001, respectively, which exceeded the more stringent threshold of .95, thereby indicating that model showed acceptable fit to the data. Similarly, the RMSEA estimate was .00, which was below the more stringent threshold of .06, thereby indicating that model showed acceptable fit to the data. The SRMR estimate was .019, which was below the stringent threshold of .06, thereby indicating acceptable model fit to the data. Collectively, the model fit information indicated that model fit the data acceptably. Regarding the unstandardized parameter estimates, the intercept latent factor mean of 35.143 (p < .001) indicated that, on average, new employees’ level of role clarity was 35.143 (out of a possible 100) 1 month after their respective start dates. The slope latent factor mean of 4.975 (p < .001) indicated that, on average, new employees’ level of role clarity increased by 4.975 each month. The variances associated with the intercept and slope latent factors, however, indicated that there was a statistically significant amount of between-employee intercept and slope variation (\(\sigma_{intercept}\) = 155.324, p < .001; \(\sigma_{slope}\) = .744, p < .001). The statistically significant and negative covariance between the intercept and slope latent factors indicated that employees with higher levels of role clarity 1 month after their respective start dates tended to have less positive role clarity change trajectories (\(\psi\) = -3.483, p = .002). Finally, the standardized (residual) error terms associated with the four observed role clarity measurement occasions ranged from .307 to .368, indicating that a proportionally small amount of variance was left unexplained by the intercept and slope latent factors. In sum, the estimated unconditional constrained LGM showed that new employees’ role clarity, on average, increased in a linear manner between 1 month and 9 months after employees’ respective start dates. Further, new employees varied with respect to their level of role clarity 1 month after their start dates, and their role clarity change trajectories varied in terms of slope. A conditional model with a time-invariant predictor or time-variant predictors may help explain between-employee differences in intercepts and slopes.

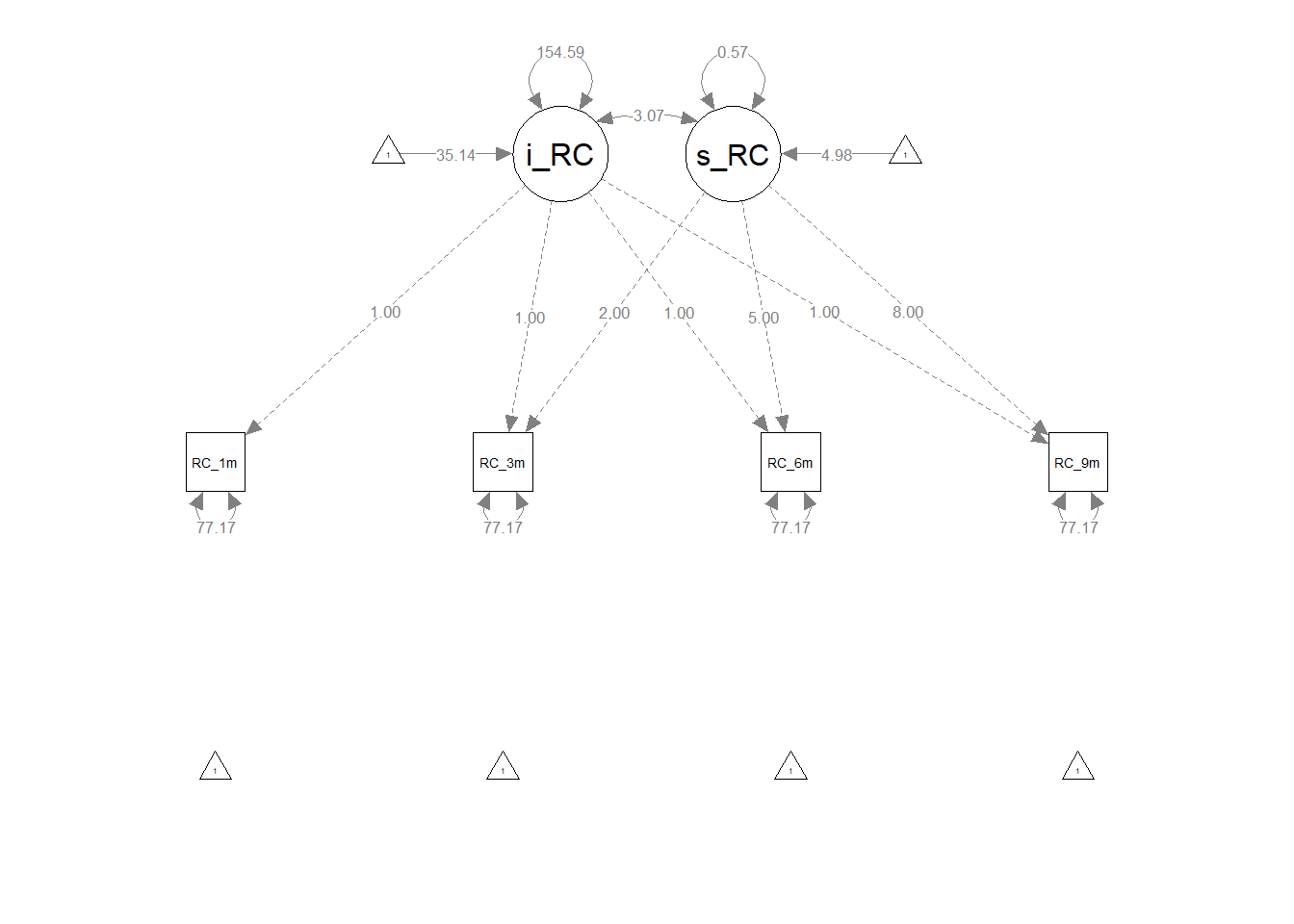

59.2.6 Nested Model Comparisons

When possible, we typically prefer to arrive at a model that not only fits the data but also is parsimonious in nature (i.e., less complex). With respect to LGM, there are variety of circumstances in which we might wish to compare nested models to arrive at a well-fitting yet parsimonious model. A nested model has all the same parameter estimates of a full model but has additional parameter constraints in place; a nested model will have more degrees of freedom (df) than the full model, thereby indicating that the nested model is less complex.

In this section, we will build up to the unconditional unconstrained LGM from the previous section by gradually relaxing constraints. In general, our goal will be to retain the most parsimonious model that fits the data acceptably.

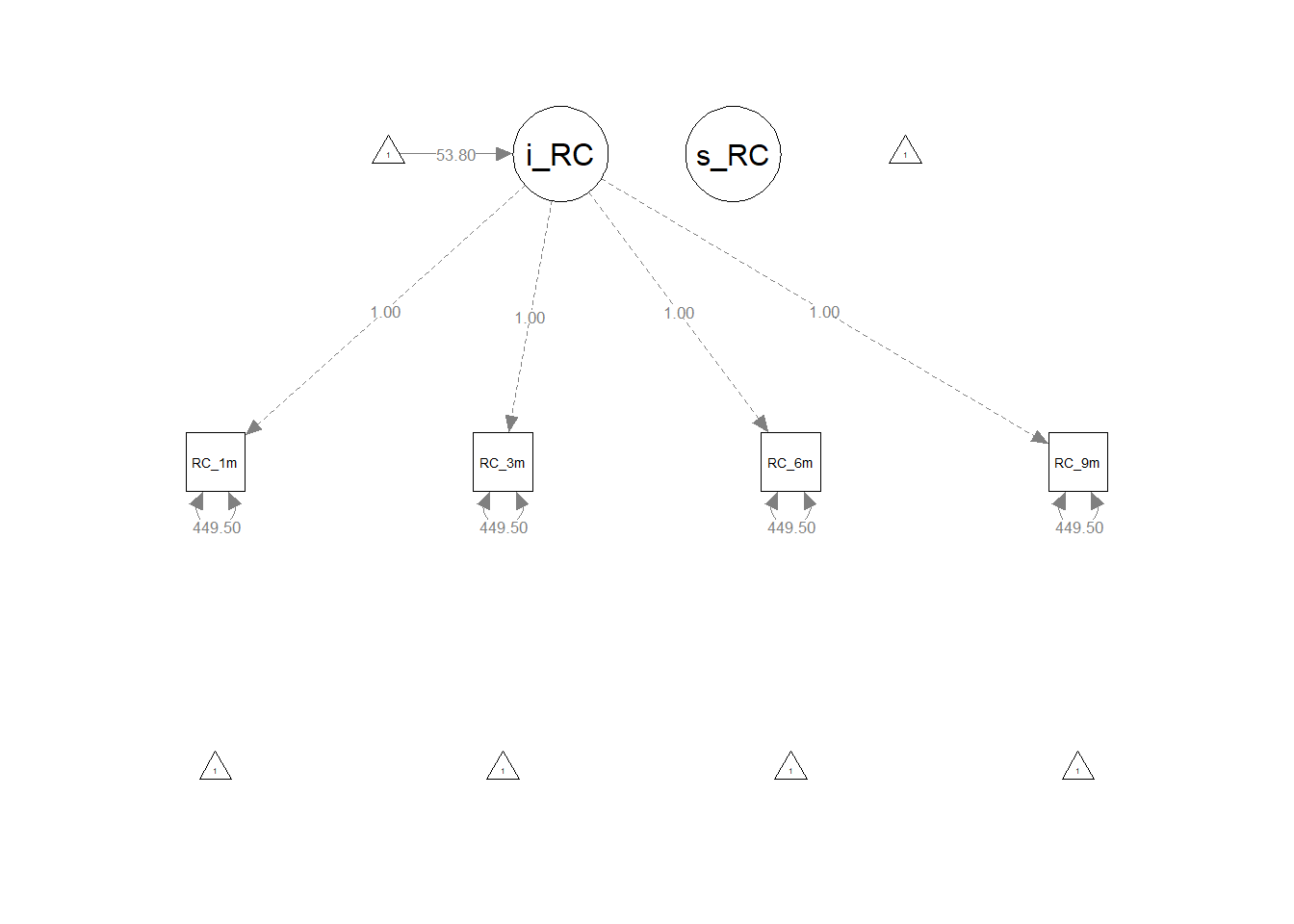

59.2.6.1 Estimate Baseline Model

We will begin with evaluating a very simple model that we’ll refer to as the baseline model. For this model, we will freely estimate the mean of the intercept latent factor, constrain the (residual) error variances to be zero, and constrain all remaining parameters from our unconditional unconstrained model (from the previous section) to be zero. In other words, we will evaluate an intercept-only model in which intercepts aren’t allowed to vary between employees and in which the residual error variances constrained (i.e., homogeneity of variances). We will directly specify all necessary model parameters (even those that would be estimated by default), as in subsequent models we will be relaxing some of the constraints we put in place in this baseline model.

- Specify a name for the model object (e.g.,

lgm_mod1), followed by the<-assignment operator. - To the right of the

<-assignment operator and within quotation marks (" "): - Specify a name for the intercept latent factor (e.g.,

i_RC), followed by the=~operator, which is used to indicate how a latent factor is measured.

- After the

=~operator, specify each observed variable associated with the intercept latent factor, and to separate the observed variables, insert the+operator. In this example, the four observed variables are:RC_1m,RC_3m,RC_6m, andRC_9m. These are our observed variables, which conceptually are influenced by the underlying latent factor. - To set the

i_RClatent factor as the intercept, we also need to constrain each observed variable’s factor loading to 1 by preceding each observed variable by1*. As a result, the end result should look like this:i_RC =~ 1*RC_1m + 1*RC_3m + 1*RC_6m + 1*RC_9m.

- Specify a name for the slope latent factor (e.g.,

s_RC), followed by the=~operator, which is used to indicate how a latent factor is measured.

- After the

=~operator, specify each observed variable associated with the slope latent factor, which will be the same observed variables that serve as indicators for the intercept latent factor. - Because we will not be freely estimating the slope in this baseline model, we will constrain all

s_RClatent factor loadings to zero, which will look like this:s_RC =~ 0*RC_1m + 0*RC_3m + 0*RC_6m + 0*RC_9m.

- Freely estimate the mean of the intercept factor by specifying the name of the latent factor (

i_RC) followed by the~operator and 1. Specifying~ 1after a latent factor freely estimates the mean (or intercept) of that factor. - Because we are setting our slope to be zero in this model, we need to constrain the mean of the slope factor to zero by specifying the name of the latent factor (

s_RC) followed by the~operator and0*1. Specifyings_RC ~ 0*1constrains the mean (or intercept) of the latent factor to zero. - Constrain the intercept latent factor variance to zero, which means we won’t allow intercepts to vary between employees. To do so, specify the variance of the latent factor (e.g,

i_RC ~~ i_RC), except insert0*to the right of the~~(variance) operator to constrain the variance to zero. The end result should look like this:i_RC ~~ 0*i_RC. - Constrain the slope latent factor variance to zero as well. To do so, specify the following:

s_RC ~~ 0*s_RC. - Constrain the covariance between the intercept and slope latent factors to zero by specifying the following:

i_RC ~~ 0*s_RC. - Constrain the (residual) error variances to be equal by first specifying the variance of each observed variable (e.g.,

RC_1m ~~ RC_1m); however, we will apply equality constraints by inserting the same letter or word immediately after the~~(covariance) operator. I’ve chosen to use the lettereas the equality constraint. For example, applying the equality constraint to the first observed variable will look like this:RC_1m ~~ e*RC_1m. - Specify a name for the fitted model object (e.g.,

lgm_fit1), followed by the<-assignment operator. - To the right of the

<-assignment operator, type the name of thegrowthfunction, and within the function parentheses include the following arguments.

- As the first argument, insert the name of the model object that we specified above (

lgm_mod1). - As the second argument, insert the name of the data frame object to which the indicator variables in our model belong. That is, after

data=, insert the name of the original wide-format data frame object (df). - Note: The

growthfunction includes model estimation defaults, which explains why we had relatively few model specifications.

- Specify the name of the

summaryfunction from base R.

- As the first argument, specify the name of the fitted model object that we created above (

lgm_fit1). - As the second argument, set

fit.measures=TRUEto obtain the model fit indices (e.g., CFI, TLI, RMSEA, SRMR). - As the third argument, set

standardized=TRUEto request the standardized parameter estimates for the model.

- Specify the name of the

semPathsfunction.

- As the first argument, insert the name of the fitted LGM object (

lgm_fit1). - As the second argument, specify

what="est"to display just the unstandardized parameter estimates. - As the third argument, specify

weighted=FALSEto request that the visualization not weight the edges (e.g., lines) and other plot features. - As the fourth argument, specify

nCharNodes=0in order to use the full names of latent and observed indicator variables instead of abbreviating them.

# Specify baseline model & assign to object

lgm_mod1 <- "

# Specify and constrain intercept factor loadings

i_RC =~ 1*RC_1m + 1*RC_3m + 1*RC_6m + 1*RC_9m

# Specify and constrain slope factor loadings to zero (no growth)

s_RC =~ 0*RC_1m + 0*RC_3m + 0*RC_6m + 0*RC_9m

# Freely estimate mean of intercept latent factor

i_RC ~ 1

# Constrain mean of slope latent factor to zero (average slope set to zero)

s_RC ~ 0*1

# Constrain intercept latent factor variance to zero (don't allow to vary)

i_RC ~~ 0*i_RC

# Constrain slope latent factor variance to zero (don't allow to vary)

s_RC ~~ 0*s_RC

# Constrain covariance between intercept & slope latent factors to zero

i_RC ~~ 0*s_RC

# Constrain (residual) error variances to be equal (homogeneity of variances)

RC_1m ~~ e*RC_1m

RC_3m ~~ e*RC_3m

RC_6m ~~ e*RC_6m

RC_9m ~~ e*RC_9m

"

# Estimate LGM & assign to fitted model object

lgm_fit1 <- growth(lgm_mod1, # name of specified model object

data=df) # name of wide-format data frame object

# Print summary of model results

summary(lgm_fit1, # name of fitted model object

fit.measures=TRUE, # request model fit indices

standardized=TRUE) # request standardized estimates## lavaan 0.6.15 ended normally after 17 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 5

## Number of equality constraints 3

##

## Number of observations 650

##

## Model Test User Model:

##

## Test statistic 3062.245

## Degrees of freedom 12

## P-value (Chi-square) 0.000

##

## Model Test Baseline Model:

##

## Test statistic 1226.041

## Degrees of freedom 6

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.000

## Tucker-Lewis Index (TLI) -0.250

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -11629.825

## Loglikelihood unrestricted model (H1) -10098.702

##

## Akaike (AIC) 23263.649

## Bayesian (BIC) 23272.603

## Sample-size adjusted Bayesian (SABIC) 23266.253

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.625

## 90 Percent confidence interval - lower 0.607

## 90 Percent confidence interval - upper 0.644

## P-value H_0: RMSEA <= 0.050 0.000

## P-value H_0: RMSEA >= 0.080 1.000

##

## Standardized Root Mean Square Residual:

##

## SRMR 0.877

##

## Parameter Estimates:

##

## Standard errors Standard

## Information Expected

## Information saturated (h1) model Structured

##

## Latent Variables:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC =~

## RC_1m 1.000 0.000 0.000

## RC_3m 1.000 0.000 0.000

## RC_6m 1.000 0.000 0.000

## RC_9m 1.000 0.000 0.000

## s_RC =~

## RC_1m 0.000 0.000 0.000

## RC_3m 0.000 0.000 0.000

## RC_6m 0.000 0.000 0.000

## RC_9m 0.000 0.000 0.000

##

## Covariances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC ~~

## s_RC 0.000 NaN NaN

##

## Intercepts:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC 53.805 0.416 129.403 0.000 Inf Inf

## s_RC 0.000 NaN NaN

## .RC_1m 0.000 0.000 0.000

## .RC_3m 0.000 0.000 0.000

## .RC_6m 0.000 0.000 0.000

## .RC_9m 0.000 0.000 0.000

##

## Variances:

## Estimate Std.Err z-value P(>|z|) Std.lv Std.all

## i_RC 0.000 NaN NaN

## s_RC 0.000 NaN NaN

## .RC_1m (e) 449.503 12.467 36.056 0.000 449.503 1.000

## .RC_3m (e) 449.503 12.467 36.056 0.000 449.503 1.000

## .RC_6m (e) 449.503 12.467 36.056 0.000 449.503 1.000

## .RC_9m (e) 449.503 12.467 36.056 0.000 449.503 1.000# Visualize the LGM as a path diagram

semPaths(lgm_fit1, # name of fitted model object

what="est", # display unstandardized parameter estimates

weighted=FALSE, # do not weight plot features

nCharNodes=0) # do not abbreviate names

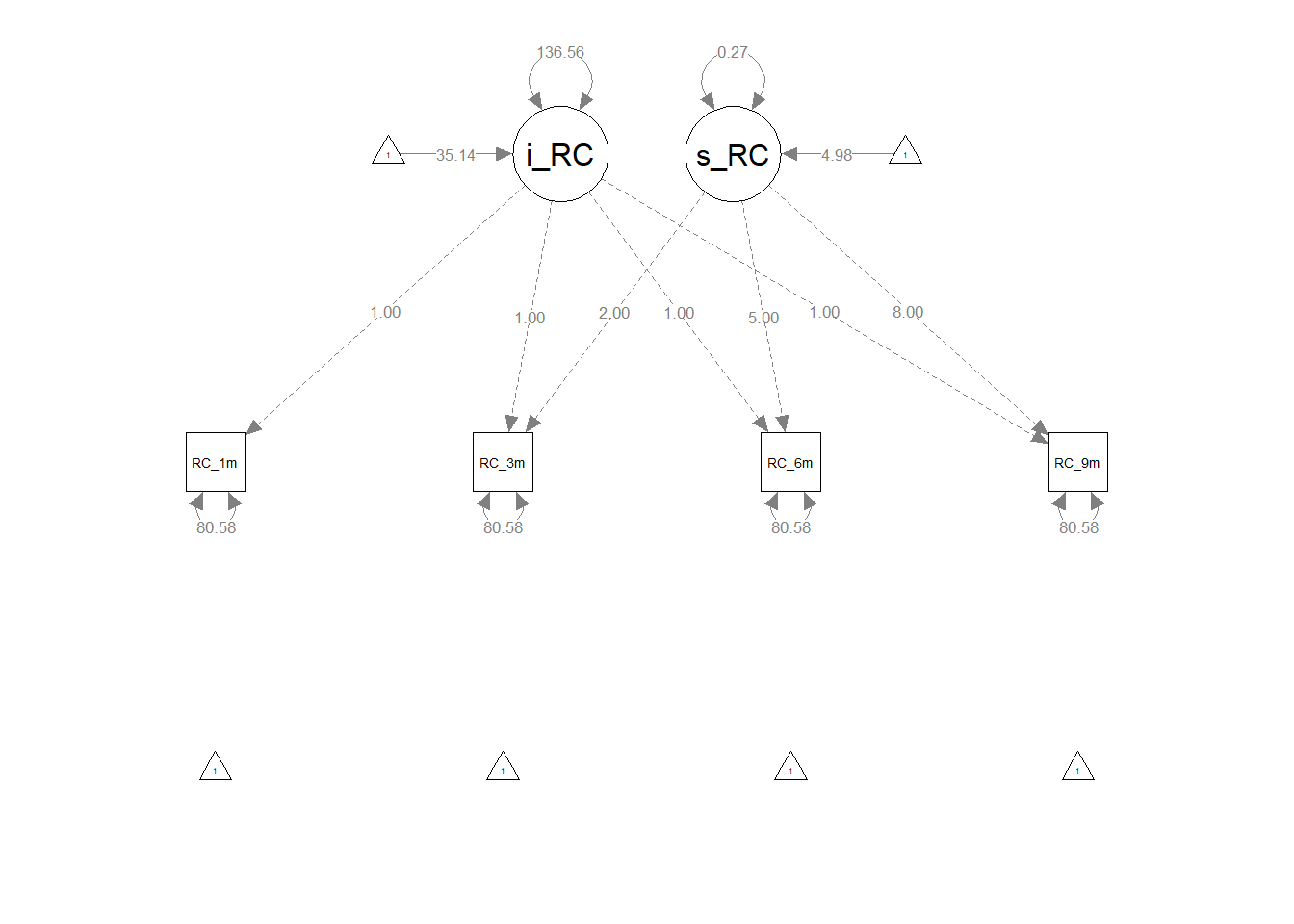

Like we did with the unconditional unconstrained model in the previous section, we typically begin by evaluating the model fit, and if the model fit appears acceptable, then we go on to evaluate the parameter estimates. To save space, however, we will just summarize the baseline model fit indices in the table below.

| Model | \(\chi^{2}\) | df | p | CFI | TLI | RMSEA | SRMR |

|---|---|---|---|---|---|---|---|

| Baseline Model | 3062.245 | 12 | < .001 | .000 | -2.500 | .625 | .877 |

As you can see above, the baseline model fits the data very poorly, which means that relaxing some of the constraints should improve the model’s fit to the data.

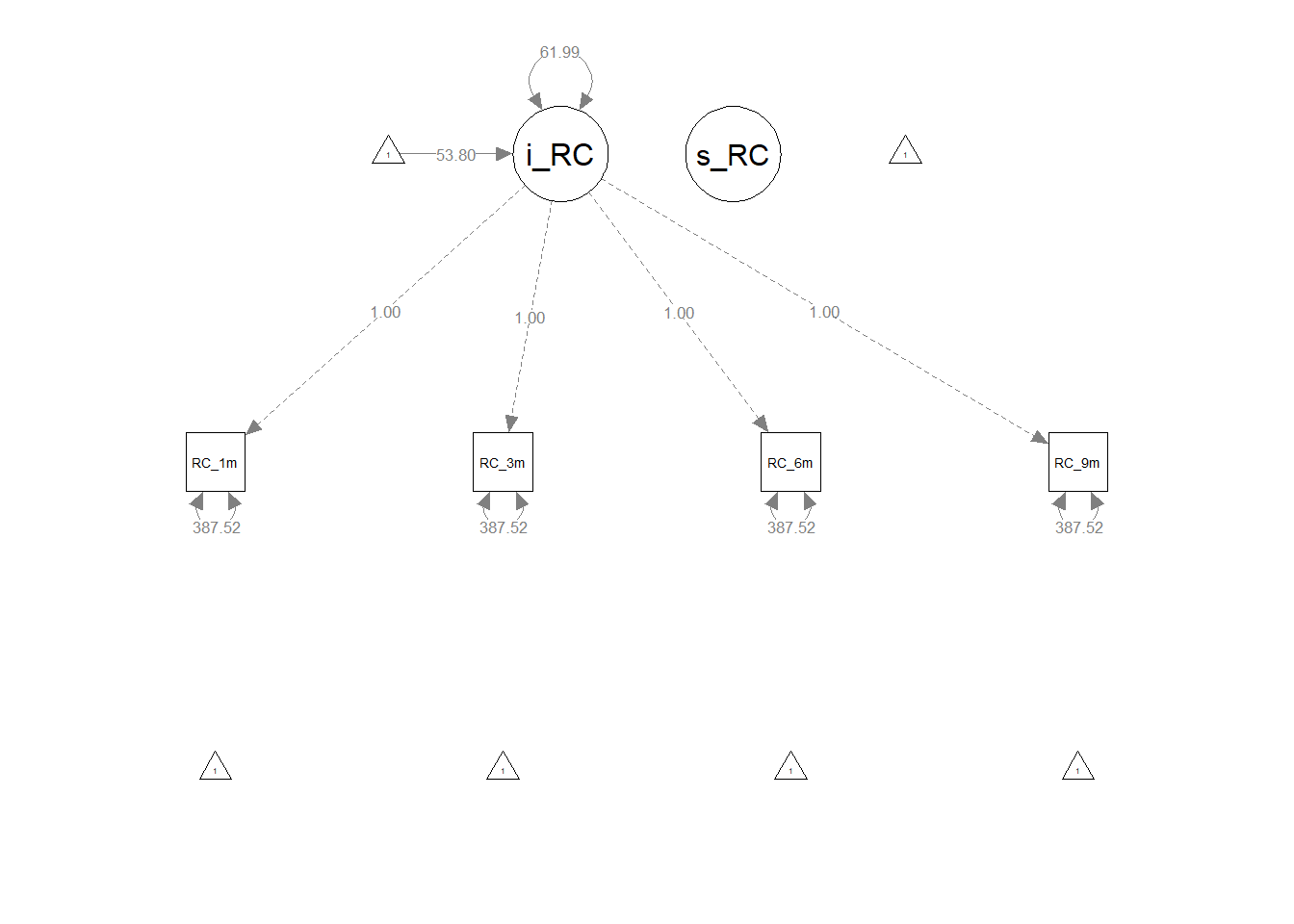

59.2.6.2 Freely Estimate Variance of Intercept Latent Factor

We will treat the baseline model as our nested model, and in this section, we will compare the baseline model to a more complex model (i.e., more freely estimated parameters). Specifically, we will specify a model in which we freely estimate the variance of the intercept latent factor. We’ll begin with the same model specifications as the baseline model, except we will relax the constraint on the variance of the intercept latent factor (i_RC) in order to freely estimate it. As indicated by the model specification annotation with three hashtags (###) instead of the typical one hashtag, we will freely estimate the variance of the intercept latent factor by removing 0*, such that the resulting line is now: i_RC ~~ i_RC. Beyond that, we will change the model specification object name to lgm_mod2 and the fitted model object to lgm_fit2 for subsequent model comparison purposes.

# Specify freely estimated intercept factor variance & assign to object

lgm_mod2 <- "

# Specify and constrain intercept factor loadings

i_RC =~ 1*RC_1m + 1*RC_3m + 1*RC_6m + 1*RC_9m

# Specify and constrain slope factor loadings to zero (no growth)

s_RC =~ 0*RC_1m + 0*RC_3m + 0*RC_6m + 0*RC_9m

# Freely estimate mean of intercept latent factor

i_RC ~ 1

# Constrain mean of slope latent factor to zero (average slope set to zero)

s_RC ~ 0*1

### Freely estimate intercept latent factor variance (allow to vary)

i_RC ~~ i_RC

# Constrain slope latent factor variance to zero (don't allow to vary)

s_RC ~~ 0*s_RC

# Constrain covariance between intercept & slope latent factors to zero

i_RC ~~ 0*s_RC

# Constrain (residual) error variances to be equal (homogeneity of variances)

RC_1m ~~ e*RC_1m

RC_3m ~~ e*RC_3m

RC_6m ~~ e*RC_6m

RC_9m ~~ e*RC_9m

"

# Estimate LGM & assign to fitted model object

lgm_fit2 <- growth(lgm_mod2, # name of specified model object

data=df) # name of wide-format data frame object

# Print summary of model results

summary(lgm_fit2, # name of fitted model object

fit.measures=TRUE, # request model fit indices

standardized=TRUE) # request standardized estimates## lavaan 0.6.15 ended normally after 21 iterations

##

## Estimator ML

## Optimization method NLMINB

## Number of model parameters 6

## Number of equality constraints 3

##

## Number of observations 650

##

## Model Test User Model:

##

## Test statistic 2997.931

## Degrees of freedom 11

## P-value (Chi-square) 0.000

##

## Model Test Baseline Model:

##

## Test statistic 1226.041

## Degrees of freedom 6

## P-value 0.000

##

## User Model versus Baseline Model:

##

## Comparative Fit Index (CFI) 0.000

## Tucker-Lewis Index (TLI) -0.335

##

## Loglikelihood and Information Criteria:

##

## Loglikelihood user model (H0) -11597.668

## Loglikelihood unrestricted model (H1) -10098.702

##

## Akaike (AIC) 23201.336

## Bayesian (BIC) 23214.767

## Sample-size adjusted Bayesian (SABIC) 23205.242

##

## Root Mean Square Error of Approximation:

##

## RMSEA 0.646

## 90 Percent confidence interval - lower 0.627

## 90 Percent confidence interval - upper 0.666