Chapter 48 Identifying Predictors of Turnover Using Logistic Regression

In this chapter, we will learn how to estimate a (binary) logistic regression model in order to identify potential predictors of employee voluntary turnover, when voluntary turnover is operationalized as a dichotomous (i.e., binary) variable (e.g., stay vs. quit).

48.1 Conceptual Overview

Logistic regression (logit model) is part of the family of generalized linear models (GLMs). Assuming statistical assumptions have been satisfied, a binary logistic regression model is appropriate when the outcome variable of interest is dichotomous (i.e., binary) and when the predictor variable(s) of interest is/are continuous (interval, ratio) or categorical (nominal, ordinal). Unlike ordinary least squares (OLS) estimation, which was covered previously in the context of simple linear regression and multiple linear regression, logistic regression coefficients are typically estimated using maximum likelihood (ML). There are also extensions of the logistic regression like multinomial and ordinal logistic regression, where these extensions are appropriate when the categorical outcome variable is nominal or ordinal with three or more levels/categories.

Logistic regression can be used to determine the odds that a dichotomous event occurs (e.g., stay vs. quit) given higher or lower values/levels on one or more predictor variables, where odds refers to the probability of an event occurring (p) relative to the probability of the event not occurring (1 - p). As such, an odds value of 1 can be interpreted as 1 to 1 odds; or in other words, the probability of the event occurring is equal to the probability of the event not occurring, which would be akin to flipping a fair coin. For example, if we find that the probability of quitting is .75 (p = .75; i.e., event occurring), then by extension, the probability of not quitting is .25 (1 - p = .25; i.e., event not occurring). Given these probabilities, the odds of quitting are 3 (.75 / .25 = 3), and the odds of not quitting are 1/3 or .33, which is calculated as: .25 / (1 - .25). A logit transformation of the odds of quitting and of the odds of not quitting will be symmetrical, where a logit transformation refers to taking the natural log (\(\ln\)) of both values (i.e., logarithmic transformation). For example, a logit transformation of 3 and 1/3 yields 1.10 and -1.10, which are symmetrical: \(\ln(3) = 1.10\) and \(\ln(1/3) = -1.10\).

48.1.1 Review of Logistic Regression

Just as there is a distinction between simple and multiple linear regression models, we can also draw a distinction between simple and multiple logistic regression models. When there is a single predictor variable and a dichotomous outcome variable, we can apply what is referred to as a simple logistic regression model. More specifically, a simple logistic regression refers to the bivariate linear association between a predictor variable and a dichotomous outcome variable that has undergone a logit transformation, as shown in the equation below.

\(logit(p) = \log(odds) = \ln(\frac{p}{1-p}) = b_0 + b_1(X_1)\)

where \(logit(p)\) represents the logit transformation of the outcome, \(\log(odds)\) represents the log(arithmic) odds, \(\ln\) represents the natural log, \(p\) represents the probability of an event occurring, \(b_0\) represents the intercept value, and \(b_1\) represents the regression coefficient (i.e., slope, weight) of the association between the predictor variable \(X_1\) and the logit transformation of the outcome variable.

And when we have two or more predictor variables and a single dichotomous outcome variable, we estimate what is called a multiple logistic regression model, where an example of a multiple logistic regression model follows.

\(logit(p) = \log(odds) = \ln(\frac{p}{1-p}) = b_0 + b_1(X_1) + b_2(X_2)\)

where \(b_2\) represents the regression coefficient (i.e., slope, weight) of the association between the second predictor variable \(X_2\) and the logit transformation of the outcome variable.

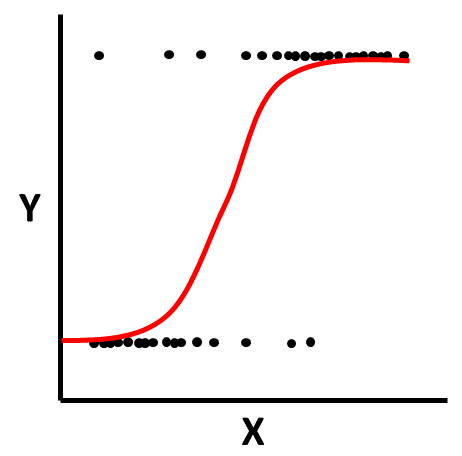

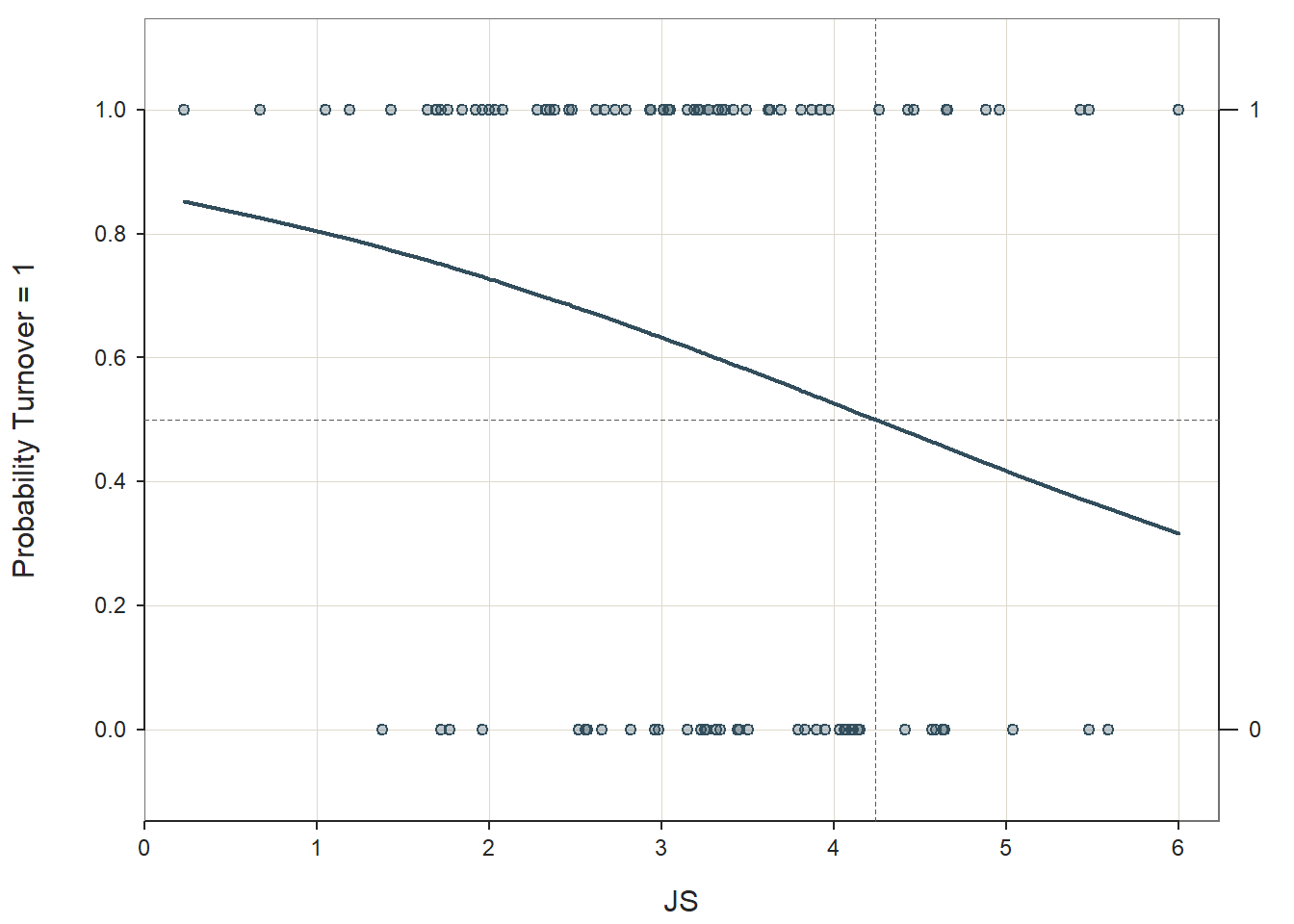

Logistic Function: Logistic regression is predicated on the logistic function. The scatter plot figure shown below illustrates the logistic function when there is a continuous (interval, ratio) predictor variable called \(X\) and a dichotomous outcome variable called \(Y\). Because a linear function would not not closely approximate the association between these two variables, we instead use a logistic function, which is a sigmoidal (or sigmoid) function and takes the visual form of an S-curve.

Just like simple and multiple linear regression models, regression coefficients are estimated in simple logistic regression models, but these coefficients are not perhaps as easily interpretable in their original form because the outcome variable undergoes a logarithmic transformation, as noted above. The regression coefficients in a logistic regression model represent the change in log odds (i.e., logit transformation) for every one unit change in the predictor variable.

Odds Ratio: To make a statistically significant regression coefficient (\(b_i\)) easier to interpret, we often convert the coefficient to an odds ratio. To do so, we exponentiate the coefficient using Euler’s number (\(e\)). Euler’s number (\(e\)) is an irrational number and mathematical constant that is approximately equal to 2.71828.

\(e^{b_i}\)

An odds ratio that is less than 1.00 indicates a negative association between the predictor variable and outcome variable, and an odds ratio that is greater than 1.00 indicates a positive association. An odds ratio equal to 1.00 indicates that there is no association between the variables.

Note: In the case of a multiple logistic regression model where we have two or more predictor variables, we would describe an odds ratios more accurately as an adjusted odds ratio because we are statistically controlling for the other predictor variable(s) in the model.

Example of Interpreting a Negative Association: As an example, let’s imagine in a simple logistic regression model we find that the coefficient for the association between job satisfaction (continuous predictor variable) and voluntary turnover (dichotomous outcome variable; i.e., stay = 0 vs. quit = 1) is -.42 – that is, we find a negative association. If we interpret the coefficient in its original form, we might say something like: “For every one unit increase in job satisfaction, there is as .42 unit reduction in log odds of quitting.” Such language will not typically be well-received by organizational stakeholders; however, if we convert the coefficient to an odds ratio by exponentiating it, we might find it easier to explain the finding to ourselves and others.

\(e^{-.42} = .66\)

In this example, the odds ratio of .66 is less than 1.00, which reflects back to us that the association is indeed negative, which we already knew from the original log odds coefficient value of -.42. We can interpret this odds ratio as follows: “For every unit increase in job satisfaction, the odds of quitting is reduced by 34% (1 - .66 = .34).” Alternatively, we can interpret the odds ratio from a different vantage point if we compute its reciprocal, which is 1.52 (1 / .66 = 1.52); in doing so, we can interpret the finding as: “For every unit increase in job satisfaction, the odds of quitting is reduced by 1 in 1.52.” Or, we could frame the finding in terms of not quitting: “For every unit increase in job satisfaction, the odds of not quitting is 1.52 times greater – or has a 1.52 times higher likelihood.”

Example of Interpreting a Positive Association: Now that we’ve worked through an example of a negative association, let’s practice interpreting a positive association. Let’s imagine a different simple logistic regression model in which we find that the coefficient for the association between turnover intentions (continuous predictor variable) and voluntary turnover (dichotomous outcome variable; i.e., stay = 0 vs. quit = 1) is .89. When interpreting the coefficient in its original form, we might say: “For every one unit increase in turnover intentions, there is as .89 unit increase in log odds of quitting.” Just as we did before, we can exponentiate the coefficient to find the odds ratio.

\(e^{.99} = 2.44\)

Because the odds ratio of 2.44 is greater than 1.00, it confirms to us that the association between turnover intentions and voluntary turnover is positive. We can interpret this finding as: “For every unit increase in turnover intentions, the odds of quitting is 2.44 times greater – or has a 2.44 times higher likelihood.”

As another example, let’s imagine that we observe an odds ratio of 1.29 with respect to negative affectivity in relation to voluntary turnover. We could interpret this finding as: “For every unit increase in negative affectivity, the odds of quitting is 1.29 times greater.” Or, because 1.29 times greater is equivalent to saying that there was a 29% increase, we could say: “For every unit increase in negative affectivity, the odds of quitting increases by 29%.”

Predicted Probabilities: Our logistic regression coefficients can also be used to predict the probability of the event occurring for different value(s) of the predictor variable(s). The equations that follow can help us to understand algebraically how we can determine the probability (\(p\)) of an event occurring based on the coefficients we might estimate for simple logistic regression model.

\(\ln(\frac{p}{1-p}) = b_0 + b_1(X_1)\)

\(\frac{p}{1-p} = e^{b_0 + b_1(X_1)}\)

\(p = \frac{e^{b_0 + b_1(X_1)}}{1+e^{b_0 + b_1(X_1)}}\)

where \(e\) represents Euler’s number.

As an example, let’s first imagine that our estimated intercept (\(b_0\)) is .32 and our estimated regression coefficient associated with the predictor variable (\(b_1\)) is -.09. Next, let’s imagine that someone has a score of 3 on the predictor variable (\(X_1\)). If we plug those values into the equation, we will find that the probability of a person with a score of 3 on the predictor variable is .51.

\(p = \frac{e^{b_0 + b_1(X_1)}}{1+e^{b_0 + b_1(X_1)}} = \frac{e^{.32 + (-.09 \times 3)}}{1+e^{.32 + (-.09 \times 3)}} = .51\)

If we set a probability threshold of .50 for experiencing the event, then we would classify anyone who scores a 3 on the predictor variable as having been predicted to experience the event in question. If however, the computed probability had been less than the probability threshold of .50, then we would have classified any associated with cases as having been predicted to not experience the event in question.

48.1.1.1 Statistical Assumptions

The statistical assumptions that should be met prior to running and/or interpreting estimates from a simple or multiple logistic regression model include:

- Cases are randomly sampled from the population, such that the variable scores for one individual are independent of the variable scores of another individual;

- Data are free of bivariate/multivariate outliers;

- The association between any continuous predictor variable(s) and the logit transformation of the outcome variable is linear;

- The outcome variable is dichotomous;

- For a multiple logistic regression model, there is no (multi)collinearity between predictor variables.

The fifth statistical assumption refers to the concept of collinearity (multicollinearity). This can be a tricky concept to understand, so let’s take a moment to unpack it. When two or more predictor variables are specified in a regression model, as is the case with multiple logistic regression, we need to be wary of collinearity. Collinearity refers to the extent to which predictor variables correlate with each other. Some level of intercorrelation between predictor variables is to be expected and is acceptable; however, if collinearity becomes substantial, it can affect the weights – and even the signs – of the regression coefficients in our model, which can be problematic from an interpretation standpoint. As such, we should avoid including predictors in a multiple linear regression model that correlate highly with one another. The tolerance statistic is commonly computed and serves as an indicator of collinearity. The tolerance statistic is computed by computing the shared variance (R2) of just the predictor variables in a single model (excluding the outcome variable), and subtracting that R2 value from 1 (i.e., 1 - R2). We typically grow concerned when the tolerance statistic falls below .20 and closer to .00. Ideally, we want the tolerance statistic to approach 1.00, as this indicates that there are lower levels of collinearity. From time to time, you might also see the variance inflation factor (VIF) reported as an indicator of collinearity; the VIF is just the reciprocal of the tolerance (i.e., 1/tolerance), and in my opinion, it is redundant to report and interpret both the tolerance and VIF. My recommendation is to focus just on the tolerance statistic when inferring whether the statistical assumption of no collinearity might have been violated.

Finally, with respect to the assumption that cases are randomly sampled from population, we will assume in this chapter’s data that this is not an issue. If we were to suspect, however, that there were some clustering or nesting of cases in units/groups (e.g., by supervisors, units, or facilities) with respect to our outcome variable, then we would need to run some type of multilevel model (e.g., multilevel logit model), which is beyond the scope of this tutorial. An intraclass correlation (ICC) can be used to diagnose such nesting or cluster. Failing to account for clustering or nesting in the data can bias estimates of standard errors, which ultimately influences the p-values and inferences of statistical significance.

48.1.1.2 Statistical Signficance

Using null hypothesis significance testing (NHST), we interpret a p-value that is less than .05 (or whatever two- or one-tailed alpha level we set) to meet the standard for statistical significance, meaning that we reject the null hypothesis that the regression coefficient is equal to zero. In other words, if a regression coefficient’s p-value is less than .05, we conclude that the regression coefficient differs from zero to a statistically significant extent. In contrast, if the regression coefficient’s p-value is equal to or greater than .05, then we fail to reject the null hypothesis that the regression coefficient is equal to zero. Put differently, if a regression coefficient’s p-value is equal to or greater than .05, we conclude that the regression coefficient does not differ from zero to a statistically significant extent, leading us to conclude that there is no association between the predictor variable and the outcome variable in the population. Keep in mind that in the context of a multiple logistic regression model, the association between each predictor variable and the logit transformation of the outcome variable must be interpreted with statistical control in mind, as we are effectively testing whether each predictor variable shows evidence of incremental validity in the presence of any other predictor variables.

When setting an alpha threshold, such as the conventional two-tailed .05 level, sometimes the question comes up regarding whether borderline p-values signify significance or nonsignificance. For our purposes, let’s be very strict in our application of the chosen alpha level. For example, if we set our alpha level at .05, p = .049 would be considered statistically significant, and p = .050 would be considered statistically nonsignificant.

Because our regression model estimates are based on data from a sample that is drawn from an underlying population, sampling error will affect the extent to which our sample is representative of the population from which its drawn. That is, a regression coefficient estimate (b) is a point estimate of the population parameter that is subject to sampling error. Fortunately, confidence intervals can give us a better idea of what the true population parameter value might be. If we apply an alpha level of .05 (two-tailed), then the equivalent confidence interval (CI) is a 95% CI. In terms of whether a regression coefficient is statistically significant, if the lower and upper limits of 95% CI do not include zero, then this tells us the same thing as a p-value that is less than .05. Strictly speaking, a 95% CI indicates that if we were to hypothetically draw many more samples from the underlying population and construct CIs for each of those samples, then the true parameter (i.e., true value of the regression coefficient in the population) would likely fall within the lower and upper bounds of 95% of the estimated CIs. In other words, the 95% CI gives us an indication of plausible values for the population parameter while taking into consideration sampling error. A wide CI (i.e., large difference between the lower and upper limits) signifies more sampling error, and a narrow CI signifies less sampling error.

Note: In a logistic regression model, we may also construct confidence intervals around the odds ratios.

48.1.1.3 Practical Significance

In its original form, a logistic regression coefficient is not an effect size; that is, it doesn’t provide an indication of practical significance. The odds ratio, however, can be conceptualized as an effect size. With that being said, there are some caveats. First, an odds ratio that is computed directly from an unstandardized logistic regression coefficient needs to be interpreted based on the raw scaling of the predictor variable, as the interpretation of the odds ratio has to do with the change in odds for unit change in the predictor variable. If we wish to compare odds ratios within or between models, we need to take this scaling issue into account. Second, in the case of a multiple logistic regression model, statistical control is at play, which means that the odds ratios are more accurately described as adjusted odds ratios.

There are different thresholds we can apply when interpreting the magnitude of an odds ratio, and below I provide some thresholds that we will use in this tutorial. With that being said, thresholds for qualitatively interpreting effect sizes should be context dependent, and there are other thresholds we might apply.

| Odds Ratio > 1 | Odds Ratio < 1 | Description |

|---|---|---|

| 1.2 | .8 | Small |

| 2.5 | .4 | Medium |

| 4.3 | .2 | Large |

At the model level, we can’t compute a true R2 when estimating a logistic regression model. We can, however, compute a pseudo-R2, There are different formulas available for computing pseudo-R2 (e.g., Cox & Snell, McFadden), and in this chapter we’ll focus on the following Nagelkerke (1991) formula:

\(pseudo-R^2 = \frac{1 - (\frac{L(M_{null})}{L(M_{full})})^{2/N}}{1-L(M_{null})^{2/N}}\)

where \(L(M_{null})\) is the likelihood of the outcome variable given a null, intercept-only model, \(L(M_{full})\) is the likelihood of the outcome variable given the predictor variable(s) in the model, and \(N\) is the sample size.

It’s important to note, though, that as the name implies, a pseudo-R2 is not the same thing as a true R2. Thus, while we need to be cautious in our interpretations.

In addition to pseudo-R2, we can also describe the classification accuracy of the model by using a confusion matrix (or classification table). A confusion matrix presents the percentage of correctly predicted values on the outcome variable; if a probability for a case based on the model is equal to or greater than .50, then it would be classified as a probability of 1, and all else would be classified as 0. For example, using a confusion matrix, we can make statements like: “The model correctly classified 55.9% of the employees as either stay or quit.”

To indicate how well your model fit the data and performed, I recommend reporting either pseudo-R2, model classification accuracy, or both.

Note: Typically, we only interpret the practical significance of an effect if the effect was found to be statistically significant. The logic is that if an effect (e.g., association, difference) is not statistically significant, then we should treat it as no different than zero, and thus it wouldn’t make sense to the interpret the size of something that statistically has no effect.

48.1.1.4 Sample Write-Up

A voluntary turnover study was conducted based on a sample 99 employees from the past year, some of whom quit the company and some of whom stayed. The focal outcome variable is turnover behavior (quit vs. stay), and because it is dichotomous, we used logistic regression. We were, specifically, interested in the extent to which employees’ self-reported job satisfaction, negative affectivity, and turnover intentions were associated with their decisions to quit or stay, and thus all three were was used as continuous predictor variables in our multiple logistic regression model. In total, due to missing data, 95 employees were included in our analysis. Results indicated that job satisfaction was not associated with turnover behavior to a statistically significant extent (b = -.233, p = .293, 95% CI[-.667, .201]). Negative affectivity, however, was positively and significantly associated with turnover behavior (b = 1.195, p = .017, 95% CI[.216, 2.174]). For every one unit increase in negative affectivity, the odds of quitting were 3.304 times greater, when controlling for the other predictor variables in the model. Similarly, turnover intentions were also positively and significantly associated with turnover behavior (b = .897, p = .005, 95% CI[.276, 1.517]). For every one unit increase in turnover intentions, the odds of quitting were 2.451 times greater, when controlling for other predictor variables in the model. Both of these significant associations can be described as medium in magnitude. Overall, based on our estimated multiple logistic regression model, we were able to correct classify 78.9% of employees from our sample using the estimated multiple logistic regression model. Finally, the estimated Nagelkerke pseudo-R2 was .073. We can cautiously conclude that job satisfaction explains 7.3% of the variance in voluntary turnover.

48.2 Tutorial

This chapter’s tutorial demonstrates how to estimate simple and multiple logistic regression models using R.

48.2.1 Video Tutorials

As usual, you have the choice to follow along with the written tutorial in this chapter or to watch the video tutorial below.

Link to video tutorial: https://youtu.be/O7gRceyeyT8

48.2.2 Functions & Packages Introduced

| Function | Package |

|---|---|

Logit |

lessR |

log |

base R |

PseudoR2 |

DescTools |

exp |

base R |

glm |

base R |

merge |

base R |

data.frame |

base R |

mutate |

dplyr |

ifelse |

base R |

c |

base R |

predict |

base R |

detach |

base R |

48.2.3 Initial Steps

If you haven’t already, save the file called “Turnover.csv” into a folder that you will subsequently set as your working directory. Your working directory will likely be different than the one shown below (i.e., "H:/RWorkshop"). As a reminder, you can access all of the data files referenced in this book by downloading them as a compressed (zipped) folder from the my GitHub site: https://github.com/davidcaughlin/R-Tutorial-Data-Files; once you’ve followed the link to GitHub, just click “Code” (or “Download”) followed by “Download ZIP”, which will download all of the data files referenced in this book. For the sake of parsimony, I recommend downloading all of the data files into the same folder on your computer, which will allow you to set that same folder as your working directory for each of the chapters in this book.

Next, using the setwd function, set your working directory to the folder in which you saved the data file for this chapter. Alternatively, you can manually set your working directory folder in your drop-down menus by going to Session > Set Working Directory > Choose Directory…. Be sure to create a new R script file (.R) or update an existing R script file so that you can save your script and annotations. If you need refreshers on how to set your working directory and how to create and save an R script, please refer to Setting a Working Directory and Creating & Saving an R Script.

Next, read in the .csv data file called “Turnover.csv” using your choice of read function. In this example, I use the read_csv function from the readr package (Wickham, Hester, and Bryan 2024). If you choose to use the read_csv function, be sure that you have installed and accessed the readr package using the install.packages and library functions. Note: You don’t need to install a package every time you wish to access it; in general, I would recommend updating a package installation once ever 1-3 months. For refreshers on installing packages and reading data into R, please refer to Packages and Reading Data into R.

# Install readr package if you haven't already

# [Note: You don't need to install a package every

# time you wish to access it]

install.packages("readr")# Access readr package

library(readr)

# Read data and name data frame (tibble) object

td <- read_csv("Turnover.csv")## Rows: 99 Columns: 6

## ── Column specification ────────────────────────────────────────────────────────────────────────────────────────────────────────────

## Delimiter: ","

## chr (1): ID

## dbl (5): Turnover, JS, OC, TI, NAff

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.## [1] "ID" "Turnover" "JS" "OC" "TI" "NAff"## spc_tbl_ [99 × 6] (S3: spec_tbl_df/tbl_df/tbl/data.frame)

## $ ID : chr [1:99] "EMP559" "EMP561" "EMP571" "EMP589" ...

## $ Turnover: num [1:99] 1 1 1 1 1 1 0 1 1 1 ...

## $ JS : num [1:99] 4.96 1.72 1.64 3.01 3.04 3.81 1.38 3.92 2.35 1.69 ...

## $ OC : num [1:99] 5.32 1.47 0.87 2.15 1.94 3.81 0.83 3.88 3.03 2.82 ...

## $ TI : num [1:99] 0.51 4.08 2.65 4.17 3.27 3.01 3.18 1.7 2.44 2.58 ...

## $ NAff : num [1:99] 1.87 2.48 2.84 2.43 2.76 3.67 2.3 2.8 2.71 2.07 ...

## - attr(*, "spec")=

## .. cols(

## .. ID = col_character(),

## .. Turnover = col_double(),

## .. JS = col_double(),

## .. OC = col_double(),

## .. TI = col_double(),

## .. NAff = col_double()

## .. )

## - attr(*, "problems")=<externalptr>## # A tibble: 6 × 6

## ID Turnover JS OC TI NAff

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 EMP559 1 4.96 5.32 0.51 1.87

## 2 EMP561 1 1.72 1.47 4.08 2.48

## 3 EMP571 1 1.64 0.87 2.65 2.84

## 4 EMP589 1 3.01 2.15 4.17 2.43

## 5 EMP592 1 3.04 1.94 3.27 2.76

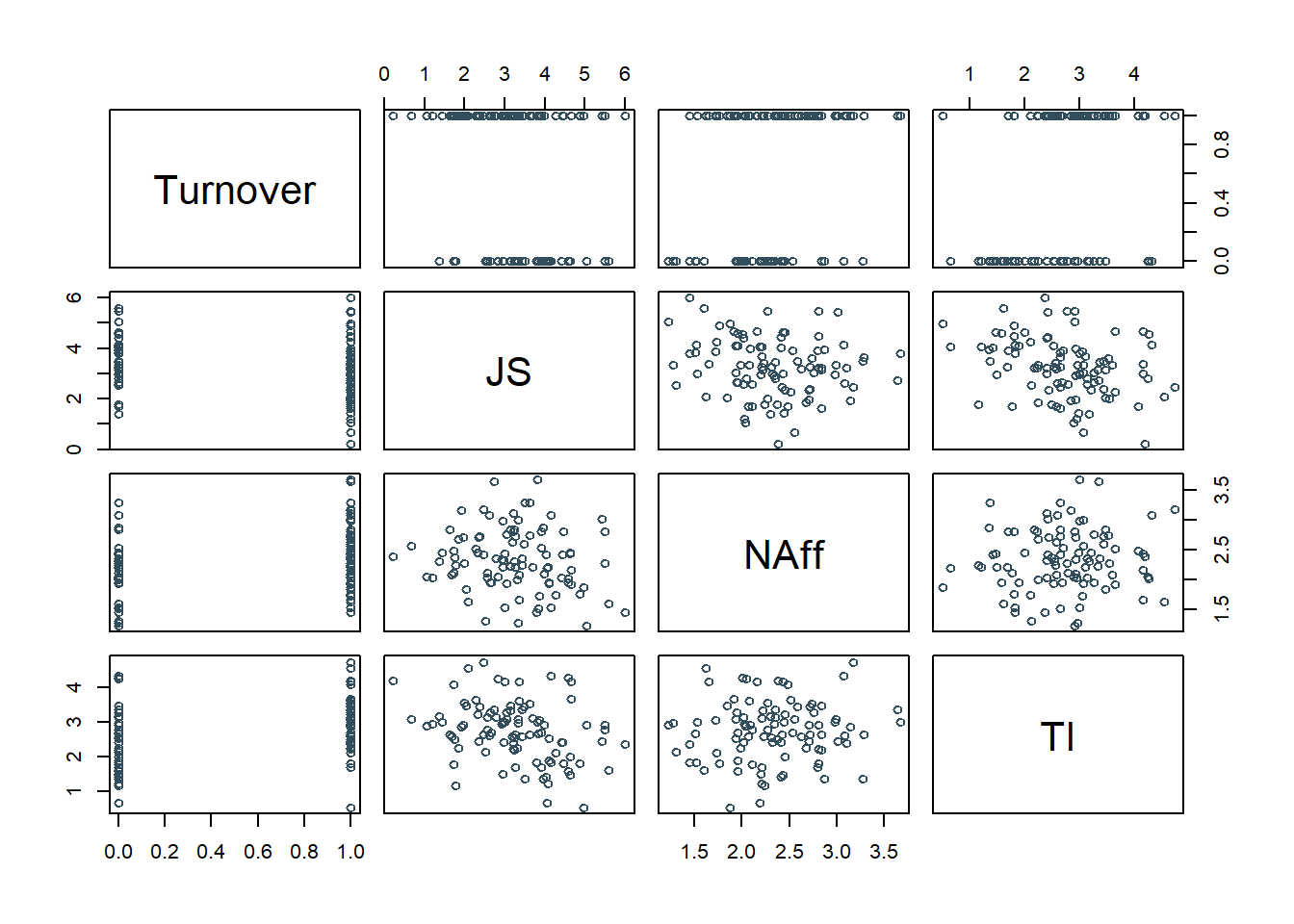

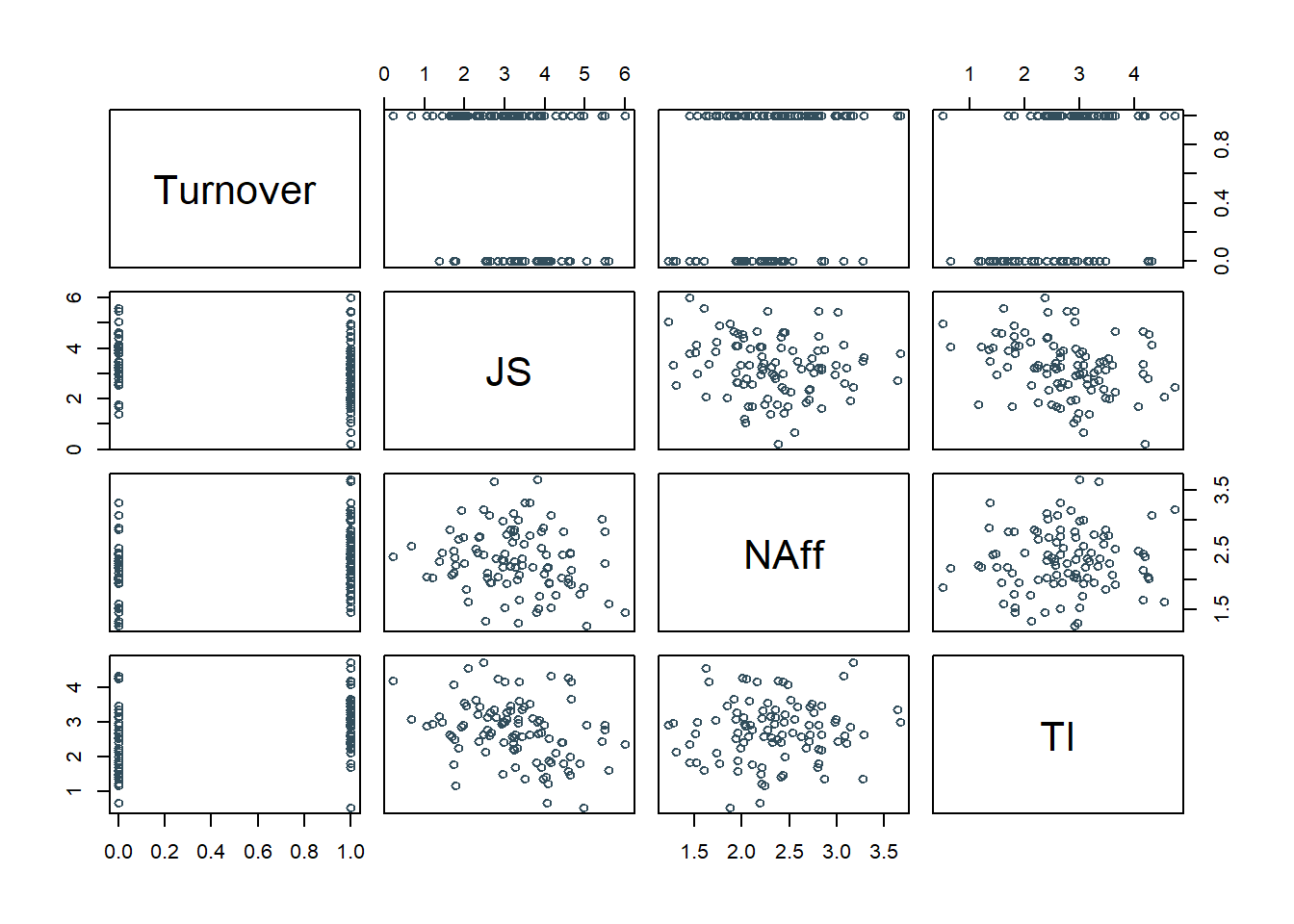

## 6 EMP601 1 3.81 3.81 3.01 3.67There are 5 variables and 99 cases (i.e., employees) in the td data frame: ID, Turnover, JS, OC, TI, and NAff. Per the output of the str (structure) function above, all of the variables are of type numeric (continuous: interval/ratio), except for the ID variable, which is of type character. ID is the unique employee identifier variable. Imagine that these data were collected as part of a turnover study within an organization to determine the drivers/predictors of turnover based on a sample of employees who stayed and leaved during the past year. The variables JS, OC, TI, and NAff were collected as part of an annual survey and were later joined with the Turnover variable. Survey respondents rated each survey item using a 7-point response scale, ranging from strongly disagree (0) to strongly agree (6). JS contains the average of each employee’s responses to 10 job satisfaction items. OC contains the average of each employee’s responses to 7 organizational commitment items. TI contains the average of each employee’s responses to 3 turnover intentions items, where higher scores indicate higher levels of turnover intentions. NAff contains the average of each employee’s responses to 10 negative affectivity items. Turnover is a variable that indicates whether these individuals left the organization during the prior year, with 1 = quit and 0 = stayed. Note: If the Turnover variable were to include the character values of quit and stay instead of 1 and 0, the functions covered in this tutorial would automatically convert the character values to 0 and 1 (behind the scenes), where 0 would be assigned to the character value that comes first alphabetically.

48.2.4 Estimate Simple Logistic Regression Model

We’ll begin by specifying a simple logistic regression model, which means the model will include just one predictor variable. Let’s begin by regressing Turnover on JS using the Logit function from the lessR package (Gerbing, Business, and University 2021). If you haven’t already, install and access the lessR package.

As the first argument in the Logit function, specify the logistic regression model, wherein the dichotomous outcome variable is typed to the left of the ~ symbol, and the predictor variable is typed to the right of the ~ symbol. As the second argument, type data= followed by the name of the data frame to which both of the variables belong (td). Let’s begin by specifying JS as the predictor variable.

##

## Response Variable: Turnover

## Predictor Variable 1: JS

##

## Number of cases (rows) of data: 99

## Number of cases retained for analysis: 98

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 1.8554 0.6883 2.695 0.007 0.5063 3.2044

## JS -0.4378 0.1958 -2.236 0.025 -0.8216 -0.0540

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 6.3939 1.6591 24.6415

## JS 0.6455 0.4397 0.9475

##

##

## -- Model Fit

##

## Null deviance: 131.746 on 97 degrees of freedom

## Residual deviance: 126.341 on 96 degrees of freedom

##

## AIC: 130.3413

##

## Number of iterations to convergence: 4

##

##

## ANALYSIS OF RESIDUALS AND INFLUENCE

## Data, Fitted, Residual, Studentized Residual, Dffits, Cook's Distance

## [sorted by Cook's Distance]

## [res_rows = 20 out of 98 cases (rows) of data]

## --------------------------------------------------------------------

## JS Turnover fitted residual rstudent dffits cooks

## 69 6.00 1 0.3162 0.6838 1.5688 0.3725 0.08496

## 7 1.38 0 0.7775 -0.7775 -1.7682 -0.2877 0.06241

## 73 5.48 1 0.3673 0.6327 1.4476 0.2949 0.04889

## 58 5.43 1 0.3724 0.6276 1.4363 0.2877 0.04618

## 12 1.72 0 0.7507 -0.7507 -1.6920 -0.2486 0.04353

## 31 1.77 0 0.7466 -0.7466 -1.6810 -0.2429 0.04117

## 13 1.96 0 0.7305 -0.7305 -1.6393 -0.2219 0.03314

## 1 4.96 1 0.4217 0.5783 1.3332 0.2239 0.02609

## 33 4.88 1 0.4302 0.5698 1.3162 0.2138 0.02353

## 84 4.66 1 0.4540 0.5460 1.2703 0.1875 0.01757

## 63 4.65 1 0.4551 0.5449 1.2682 0.1863 0.01733

## 61 2.52 0 0.6797 -0.6797 -1.5199 -0.1668 0.01693

## 97 5.59 0 0.3562 -0.3562 -0.9554 -0.2021 0.01693

## 70 5.48 0 0.3673 -0.3673 -0.9731 -0.1985 0.01648

## 74 2.56 0 0.6758 -0.6758 -1.5115 -0.1635 0.01615

## 75 2.57 0 0.6749 -0.6749 -1.5095 -0.1626 0.01596

## 67 2.65 0 0.6671 -0.6671 -1.4929 -0.1563 0.01454

## 80 5.04 0 0.4131 -0.4131 -1.0457 -0.1813 0.01431

## 77 4.46 1 0.4757 0.5243 1.2296 0.1656 0.01336

## 39 4.43 1 0.4790 0.5210 1.2235 0.1625 0.01282

##

##

## PREDICTION

##

## Probability threshold for classification : 0.5

##

##

## Data, Fitted Values, Standard Errors

## [sorted by fitted value]

## [pred_all=TRUE to see all intervals displayed]

## --------------------------------------------------------------------

## JS Turnover label fitted std.err

## 69 6.00 1 0 0.3162 0.1215

## 97 5.59 0 0 0.3562 0.1120

## 70 5.48 0 0 0.3673 0.1090

## 73 5.48 1 0 0.3673 0.1090

##

## ... for the rows of data where fitted is close to 0.5 ...

##

## JS Turnover label fitted std.err

## 39 4.43 1 0 0.4790 0.07497

## 83 4.41 0 0 0.4812 0.07431

## 64 4.26 1 0 0.4976 0.06946

## 27 4.15 0 1 0.5097 0.06609

## 14 4.14 0 1 0.5107 0.06579

##

## ... for the last 4 rows of sorted data ...

##

## JS Turnover label fitted std.err

## 66 1.19 1 1 0.7916 0.07790

## 48 1.05 1 1 0.8015 0.07904

## 88 0.67 1 1 0.8266 0.08096

## 24 0.23 1 1 0.8525 0.08116

## --------------------------------------------------------------------

##

##

## ----------------------------

## Specified confusion matrices

## ----------------------------

##

## Probability threshold for predicting : 0.5

## Corresponding cutoff threshold for JS: 4.238

##

## Baseline Predicted

## ---------------------------------------------------

## Total %Tot 0 1 %Correct

## ---------------------------------------------------

## 1 59 60.2 10 49 83.1

## Turnover 0 39 39.8 8 31 20.5

## ---------------------------------------------------

## Total 98 58.2

##

## Accuracy: 58.16

## Sensitivity: 83.05

## Precision: 61.25

Note: In some instances, you might receive an error message like the one shown below. You can ignore such a message, as it just indicates that you have a very poor-performing model that results in predicted outcome-variable classifications that are all the same (e.g., all of the predicted values are 0). If you receive such a message, you can proceed forward with your interpretation of the output.

\(\color{red}{\text{Error:}}\) \(\color{red}{\text{All predicted values are 0.}}\) \(\color{red}{\text{Something is wrong here.}}\)

The output generates the model coefficient estimates, the odds ratios and their confidence intervals, model fit information (i.e., AIC), outlier detection, forecasts, and a confusion matrix. At the top of the output, we get information about which variables were included in our model (which we probably already knew), the number of cases (e.g., employees) in the data, and the number of cases retained for the analysis after excluding cases with missing data (N = 98).

48.2.4.1 Test Statistical Assumptions

To determine whether it’s appropriate to interpret the results of a simple logistic regression model, we need to first test the following statistical assumptions.

Cases Are Randomly Sampled from the Population: As mentioned in the statistical assumptions section, we will assume that the cases (i.e., employees) were randomly sampled from the population, and thus conclude that this assumption has been satisfied.

Outcome Variable Is Dichotomous: We already know that the outcome variable called Turnover is dichotomous (1 = quit, 0 = stayed), which means we have satisfied this assumption.

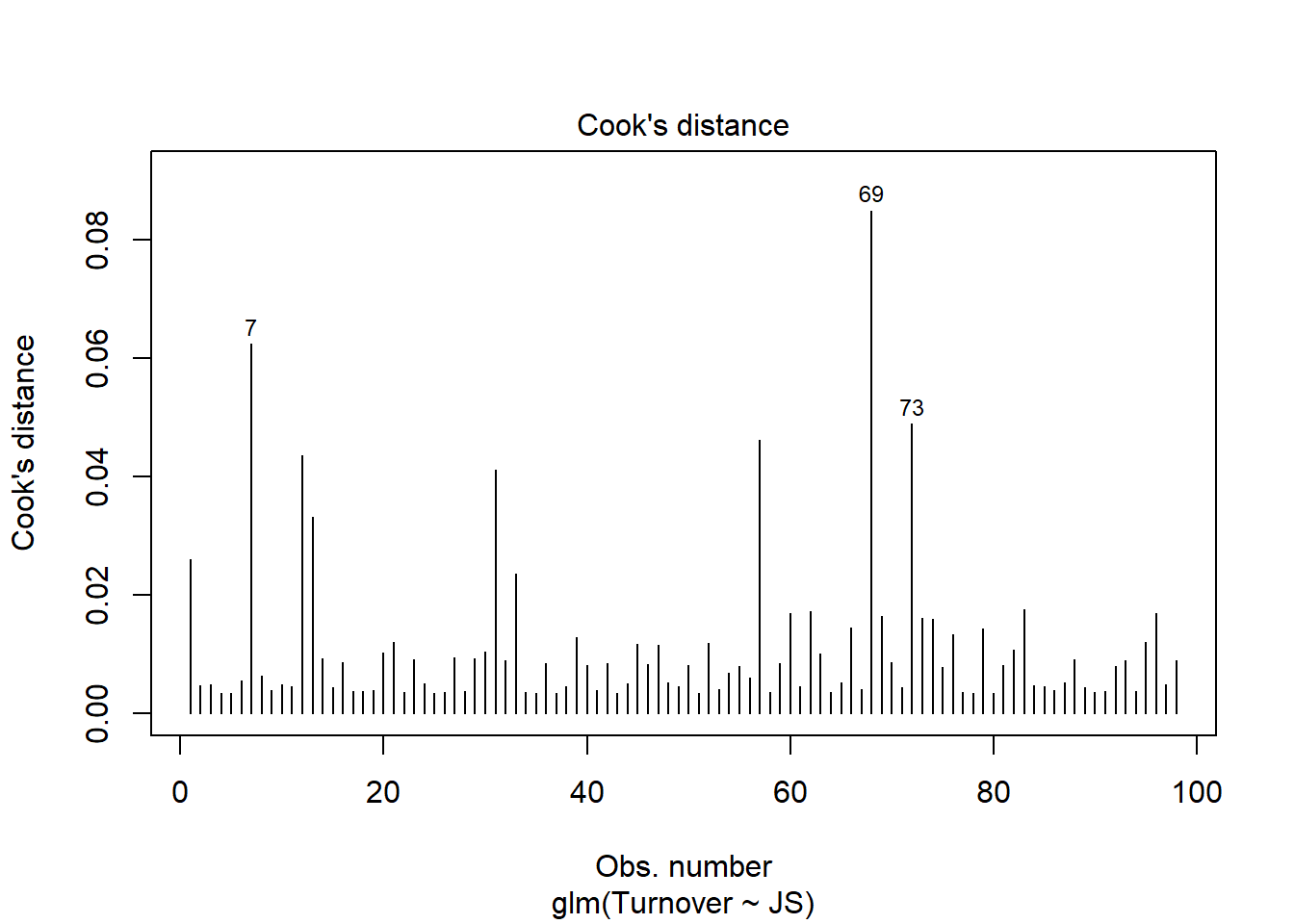

Data Are Free of Bivariate Outliers: To determine whether the data are free of bivariate outliers, let’s take a look at the text output section called Analysis of Residuals and Influence. We should find a table with a unique identifiers column (that shows the row number in your data frame object), the observed (actual) predictor and outcome variable values, the fitted (predicted) outcome variable values, the residual (error) between the fitted values and the observed outcome variable values, and the following three outlier/influence statistics: Studentized residual (rstdnt), number of standard error units that a fitted value shifts when the flagged case is removed (dffits), and Cook’s distance (cooks). The case associated with row number 66 has the highest Cook’s distance value (.085), followed by the cases associated with row numbers 67 and 71, which have Cook’s distance values of .062 and .049. A liberal threshold Cook’s distance is 1, which means that we would grow concerned if any of these values exceeded 1, whereas a more conservative threshold is 4 divided by the sample size (4 / 98 = .041). As a sensitivity analysis, we may want to estimate our model once more after removing the cases associated with row numbers 66, 66, and 71 from our data frame; however, these Cook’s distance values don’t look too concerning or out of the ordinary, and thus I wouldn’t recommend removing the associated cases. In general, we should be wary of removing outliers or influential cases and should do so only when we have a very strong justification for doing so.

Association Between Any Continuous Predictor Variable and Logit Transformation of Outcome Variable Is Linear: To test the assumption of linearity between a continuous predictor variable and the logit transformation of the outcome variable, we can add the interaction between the predictor variable and its logarithmic (i.e., natural log) transformation. [Note: We do not perform the following test/approach for categorical predictor variables.] We will use an approach that is commonly referred to as the Box-Tidwell approach (Hosmer and Lemeshow 2000). To apply this approach, we need to add the interaction term between our predictor variable JS and its logarithmic transformation to our logistic regression model – but not the main effect for the logarithmic transformation of JS. In our regression model formula, specify the dichotomous outcome variable Turnover to the left of the ~ operator. To the right of the ~ operator, type the name of the predictor variable JS followed by the + operator. After the + operator, type the name of the predictor variable JS, followed by the : operator and the log function from base R with the predictor variable JS as its sole parenthetical argument.

# Box-Tidwell diagnostic test of linearity between continuous predictor variable and

# logit transformation of outcome variable

Logit(Turnover ~ JS + JS:log(JS), data=td)##

## Response Variable: Turnover

## Predictor Variable 1: JS

##

## Number of cases (rows) of data: 99

## Number of cases retained for analysis: 98

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 5.4461 3.2062 1.699 0.089 -0.8379 11.7300

## JS -2.9840 2.1693 -1.376 0.169 -7.2357 1.2677

## JS:log(JS) 1.1696 0.9778 1.196 0.232 -0.7467 3.0860

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 231.8457 0.4326 124248.7675

## JS 0.0506 0.0007 3.5527

## JS:log(JS) 3.2208 0.4739 21.8896

##

##

## -- Model Fit

##

## Null deviance: 131.746 on 97 degrees of freedom

## Residual deviance: 124.621 on 95 degrees of freedom

##

## AIC: 130.6211

##

## Number of iterations to convergence: 5

##

##

## ANALYSIS OF RESIDUALS AND INFLUENCE

## Data, Fitted, Residual, Studentized Residual, Dffits, Cook's Distance

## [sorted by Cook's Distance]

## [res_rows = 20 out of 98 cases (rows) of data]

## --------------------------------------------------------------------

## JS Turnover fitted residual rstudent dffits cooks

## 7 1.38 0 0.8639 -0.8639 -2.105 -0.4827 0.158262

## 69 6.00 1 0.5290 0.4710 1.221 0.5442 0.090322

## 12 1.72 0 0.8029 -0.8029 -1.852 -0.3440 0.063819

## 31 1.77 0 0.7936 -0.7936 -1.821 -0.3267 0.055946

## 97 5.59 0 0.5044 -0.5044 -1.239 -0.3947 0.049267

## 73 5.48 1 0.4993 0.5007 1.224 0.3561 0.039962

## 70 5.48 0 0.4993 -0.4993 -1.221 -0.3554 0.039735

## 58 5.43 1 0.4972 0.5028 1.224 0.3418 0.036908

## 13 1.96 0 0.7577 -0.7577 -1.713 -0.2696 0.034590

## 80 5.04 0 0.4853 -0.4853 -1.174 -0.2378 0.017543

## 1 4.96 1 0.4840 0.5160 1.225 0.2327 0.017368

## 33 4.88 1 0.4830 0.5170 1.225 0.2184 0.015319

## 61 2.52 0 0.6572 -0.6572 -1.476 -0.1788 0.012429

## 74 2.56 0 0.6506 -0.6506 -1.462 -0.1758 0.011886

## 75 2.57 0 0.6490 -0.6490 -1.459 -0.1751 0.011761

## 84 4.66 1 0.4823 0.5177 1.221 0.1854 0.011051

## 63 4.65 1 0.4823 0.5177 1.221 0.1842 0.010899

## 67 2.65 0 0.6363 -0.6363 -1.433 -0.1701 0.010877

## 96 2.82 0 0.6108 -0.6108 -1.384 -0.1623 0.009530

## 21 4.64 0 0.4824 -0.4824 -1.159 -0.1737 0.009334

##

##

## PREDICTION

##

## Probability threshold for classification : 0.5

##

##

## Data, Fitted Values, Standard Errors

## [sorted by fitted value]

## [pred_all=TRUE to see all intervals displayed]

## --------------------------------------------------------------------

## JS Turnover label fitted std.err

## 84 4.66 1 0 0.4823 0.08526

## 63 4.65 1 0 0.4823 0.08471

## 21 4.64 0 0 0.4824 0.08416

## 53 4.63 0 0 0.4824 0.08363

##

## ... for the rows of data where fitted is close to 0.5 ...

##

## JS Turnover label fitted std.err

## 70 5.48 0 0 0.4993 0.15604

## 73 5.48 1 0 0.4993 0.15604

## 55 3.97 1 1 0.5005 0.06559

## 16 3.95 0 1 0.5015 0.06547

## 8 3.92 1 1 0.5031 0.06531

##

## ... for the last 4 rows of sorted data ...

##

## JS Turnover label fitted std.err

## 66 1.19 1 1 0.8945 0.08584

## 48 1.05 1 1 0.9147 0.08191

## 88 0.67 1 1 0.9582 0.06150

## 24 0.23 1 1 0.9874 0.02972

## --------------------------------------------------------------------

##

##

## ----------------------------

## Specified confusion matrices

## ----------------------------

##

## Probability threshold for predicting : 0.5

## Corresponding cutoff threshold for JS: 1.825

##

## Baseline Predicted

## ---------------------------------------------------

## Total %Tot 0 1 %Correct

## ---------------------------------------------------

## 1 59 60.2 9 50 84.7

## Turnover 0 39 39.8 14 25 35.9

## ---------------------------------------------------

## Total 98 65.3

##

## Accuracy: 65.31

## Sensitivity: 84.75

## Precision: 66.67

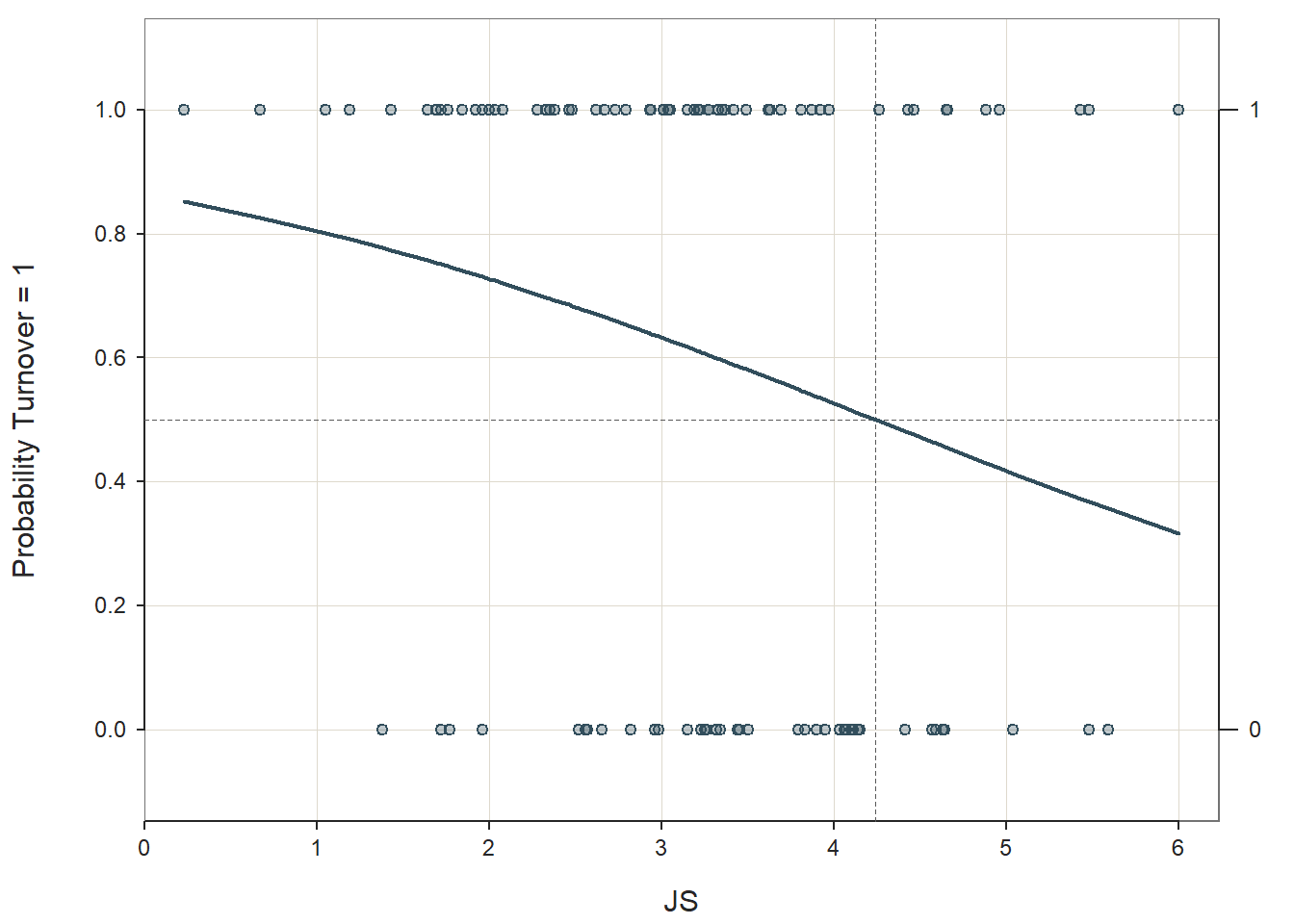

Because the interaction term (JS:log(JS)) regression coefficient of 1.1696 in the Model Coefficients table is nonsignificant (p = .232), we have no reason to believe that the association between the continuous predictor variable and the logit transformation of the outcome variable is nonlinear. If the interaction term had been statistically significant, then we might have evidence that the assumption was violated, and one potential solution would be to estimate a polynomial model of some kind to better fit the data; for more information on estimating nonlinear associations, check out Chapter 7 (“Curvilinear Effects in Logistic Regression”) from Osborne (2015). Finally, we only apply this test when the predictor variable in question is continuous (interval, ratio). In practice, however, note that for reasons of parsimony, we sometimes we might choose to estimate a linear model over a nonlinear/polynomial model when the former fits the data reasonably well.

Important Note: If the variable for which you are applying the Box-Tidwell approach described above has one or more cases with a score of zero, then you will receive an error message when you run the model with the log function. The reason for the error is that the log of zero or any negative value is (mathematically) undefined. There are many reasons why your variable might have cases with scores equal to zero, some of which include: (a) zero is a naturally occurring value for the scale on which the variable is based, or (b) the variable was grand-mean centered or standardized, such that the mean is now equal to zero. There are some approaches to dealing with this issue and neither approach I will show you is going to be perfect, but each approach will give you an approximate understanding of whether violation of the linearity assumption might be an issue. First, we can add a positive numeric constant to every score on the continuous predictor variable in question that will result in the new lowest score being 1. Why 1 you might ask? Well, the rationale is somewhat arbitrary; the log of 1 is zero, and there is something nice about grounding the lowest logarithmic value at 0. Due note, however, that the magnitude of the linear transformation will have some effect on the p-value associated with the Box-Tidwell interaction term. Second, if there are proportionally very few cases with scores of zero on the predictor variable in question, we can simply subset those cases out for the analysis.

Just for the sake of demonstration, let’s transform the JS variable so that its lowest score is equal to zero. This will give us an opportunity to test the two approaches I described above. Simply, create a new variable (JS_0) that is equal to JS minus the minimum value of JS.

# ONLY FOR DEMONSTRATION PURPOSES: Create new predictor variable where lowest score is zero

td$JS_0 <- td$JS - min(td$JS, na.rm=TRUE)Now that we have a variable called JS_0 with at least one score equal to zero, let’s try the try the Box-Tidwell test. I’m going to add the argument brief=TRUE to reduce the amount of output generated by the function.

# Box-Tidwell diagnostic test of linearity between continuous predictor variable and

# logit transformation of outcome variable [with predictor containing zero value(s)]

Logit(Turnover ~ JS_0 + JS_0:log(JS_0), data=td, brief=TRUE)If you ran the script above, you likely got an error message that looked like this:

\(\color{red}{\text{Error in glm.fit(x = c(1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, : NA/NaN/Inf in 'x'}}\)

The reason we got this error message is because the log of zero is undefined, and because we had at least one case with a value of zero on JS_0, it broke down the operations. If you see a message like that, then proceed with one or both of the following approaches (which I described above).

Using the first approach, create a new variable in which the JS_0 variable is linearly transformed such that the lowest score is 1. The equation below simply adds 1 and the absolute value of the minimum value to each score on the JS_0 variable, which results in the lowest score on the new variable (JS_1) being 1. As verification, I include the min function from base R.

# Linear transformation that results in lowest score being 1

td$JS_1 <- td$JS_0 + abs(min(td$JS_0, na.rm=TRUE)) + 1

# Verify that new lowest score is 1

min(td$JS_1, na.rm=TRUE)## [1] 1It worked! Now, using this new transformed variable, enter it into the Box-Tidwell test.

# Box-Tidwell diagnostic test of linearity between continuous predictor variable and

# logit transformation of outcome variable [with transformed predictor = 1]

Logit(Turnover ~ JS_1 + JS_1:log(JS_1), data=td, brief=TRUE)##

## Response Variable: Turnover

## Predictor Variable 1: JS_1

##

## Number of cases (rows) of data: 99

## Number of cases retained for analysis: 98

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 8.0985 5.0081 1.617 0.106 -1.7171 17.9141

## JS_1 -4.0594 2.9774 -1.363 0.173 -9.8950 1.7762

## JS_1:log(JS_1) 1.5097 1.2264 1.231 0.218 -0.8939 3.9133

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 3289.6100 0.1796 60256846.1183

## JS_1 0.0173 0.0001 5.9072

## JS_1:log(JS_1) 4.5254 0.4090 50.0661

##

##

## -- Model Fit

##

## Null deviance: 131.746 on 97 degrees of freedom

## Residual deviance: 124.575 on 95 degrees of freedom

##

## AIC: 130.5747

##

## Number of iterations to convergence: 4There is no error message this time, and now we can see that the interaction term between the predictor variable and the log of the predictor variable (JS_1:log(JS_1)) is nonsignificant (b = 1.510, p = .218). Thus, we don’t see evidence that the assumption of linearity has been violated.

We could (also) use the second approach if we have proportionally very few values that are zero (or less than zero). To do so, we would just use the rows= argument to specify that we want to drop cases for which the predictor variable is equal to or less than zero. Note that we’re back to using the variable called JS_0 that forced to have at least one score equal to zero (solely for the purposes of demonstration in this tutorial).

# Box-Tidwell diagnostic test of linearity between continuous predictor variable and

# logit transformation of outcome variable [with only cases with scores greater than zero]

Logit(Turnover ~ JS_0 + JS_0:log(JS_0), data=td, rows=(JS_0 > 0), brief=TRUE)##

## Response Variable: Turnover

## Predictor Variable 1: JS_0

##

## Number of cases (rows) of data: 97

## Number of cases retained for analysis: 97

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 4.6652 2.8216 1.653 0.098 -0.8650 10.1953

## JS_0 -2.6157 1.9936 -1.312 0.189 -6.5230 1.2916

## JS_0:log(JS_0) 1.0392 0.9280 1.120 0.263 -0.7796 2.8579

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 106.1854 0.4211 26777.8281

## JS_0 0.0731 0.0015 3.6387

## JS_0:log(JS_0) 2.8269 0.4586 17.4256

##

##

## -- Model Fit

##

## Null deviance: 130.725 on 96 degrees of freedom

## Residual deviance: 124.616 on 94 degrees of freedom

##

## AIC: 130.6162

##

## Number of iterations to convergence: 4We lost one case because that person had a score of zero on the JS_0 continuous predictor variable, and we see that the interaction term (JS_0:log(JS_0)) is non significant (b = 1.0392, p = .263).

To summarize, the two approaches we just implemented would only be used when testing the statistical assumption of linearity using the Box-Tidwell approach, and only if your continuous predictor variable has scores that are equal to or less than zero. Now that we’ve met the assumption of linearity, we’re finally ready to interpret the model results!

48.2.4.2 Interpret Model Results

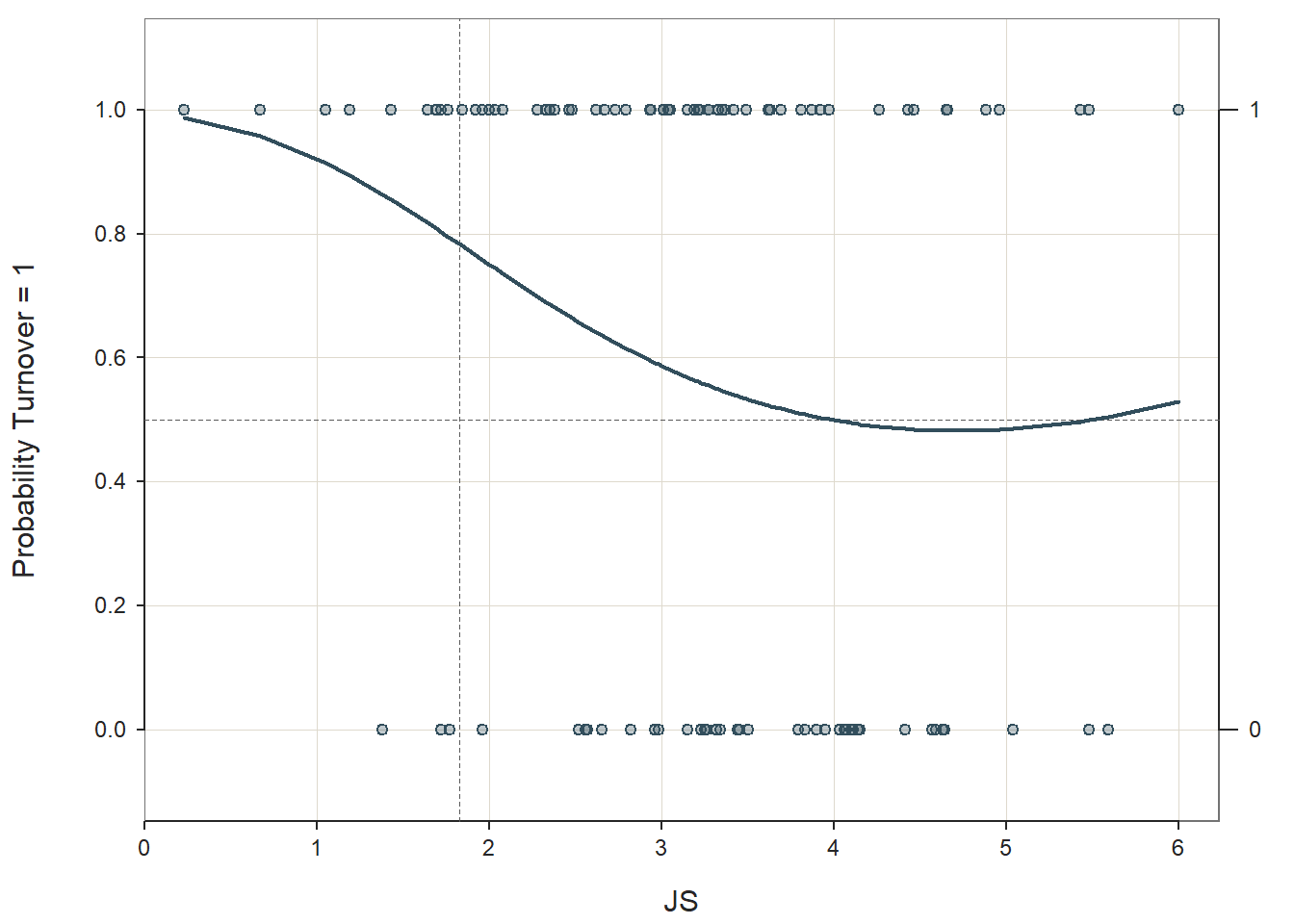

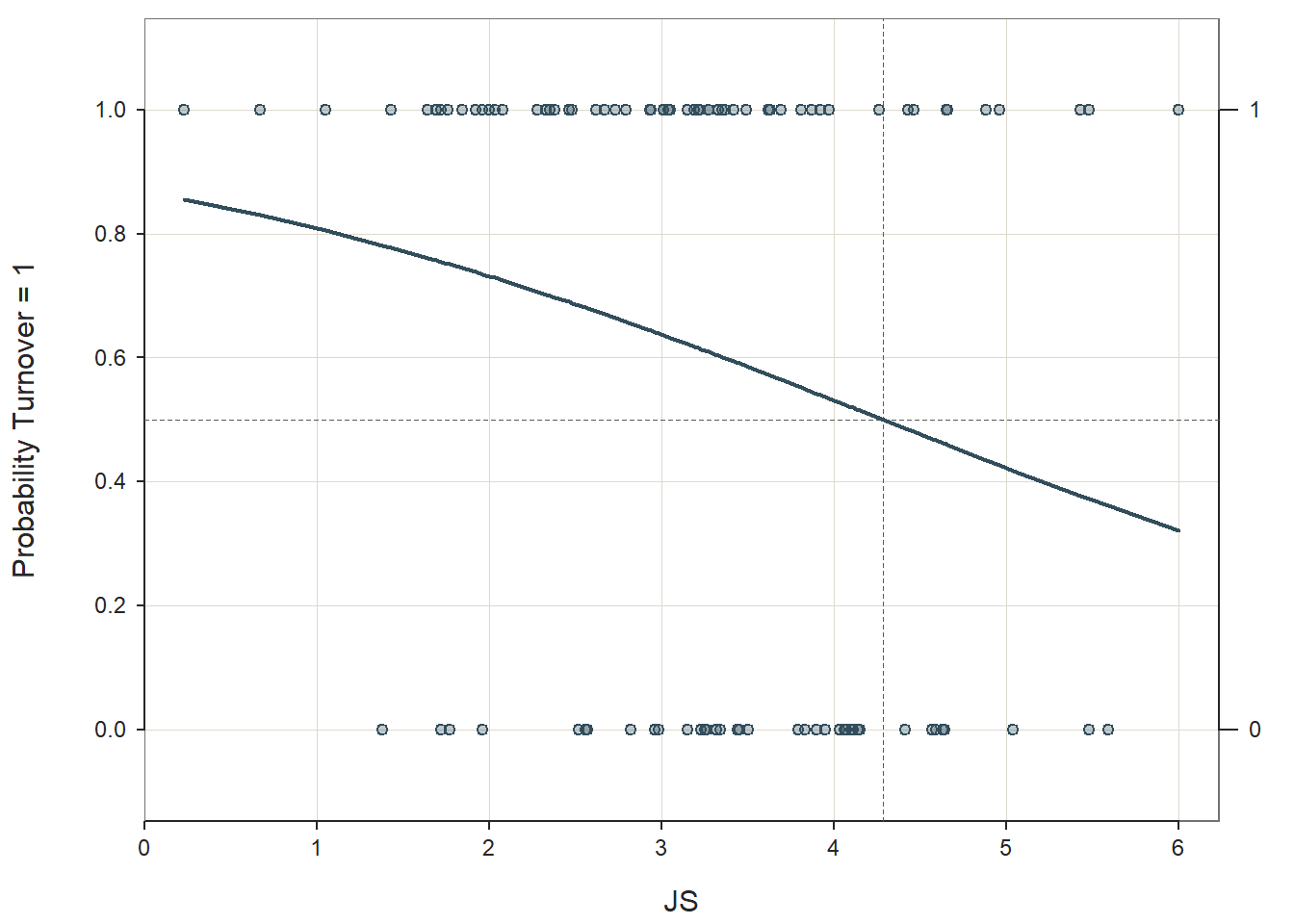

Basic Analysis: The Basic Analysis section of the original output first displays a table called the Model Coefficients, which includes the regression coefficients (slopes, weights) and their standard errors, z-values, p-values, and lower and upper limits of their 95% confidence intervals. Typically, the intercept value and its significance test are not of interest, unless we wish to use the value to specify the regression model equation. The estimate of the regression coefficient for the predictor variable (JS) in relation to the outcome variable (Turnover) is often of substantive interest. Here, we see that the unstandardized regression coefficient for JS is -.438, and its associated p-value is less than .05 (b = -.438, p = .025). Given that the p-value is less than our conventional two-tailed alpha level of .05, we reject the null hypothesis that the regression coefficient is equal to zero, which means that we conclude that the regression coefficient is statistically significantly different from zero. Further, the 95% confidence interval ranges from -.822 to -.054 (i.e., 95% CI[-.822, -.054]), which indicates that the true population parameter for association likely falls somewhere between those two values. The conceptual interpretation of logistic regression coefficients is not as straightforward as traditional linear regression coefficients, though. We can, however, interpret the significant regression coefficient as follows: For every one unit increase in the predictor variable (JS), the logistic function decreases by .438 units – or that for every one unit increase in the predictor variable (JS) the logit transformation of the outcome variable decreases by .438. Using the intercept and predictor variable coefficient estimates, we can write out the equation for the regression model as follows:

\(\ln(\frac{p}{1-p}) = 1.855 -.438 \times JS_{observed}\)

where \(p\) refers to, in this example, as the probability of quitting. If you recall from earlier in the tutorial, we can also interpret our findings with respect to \(\log(odds)\).

\(\log(odds) = \ln(\frac{p}{1-p}) = 1.855 -.438 \times JS_{observed}\)

To that end, to aid our interpretation of the significant finding, we can move our attention to the table called Odds ratios and confidence intervals. To convert our regression coefficient to an odds ratio, the Logit function has already exponentiated it. Behind the scenes, this is what happened:

\(e^{-.438} = .646\)

We could also do this manually by using the exp function from base R. Any difference between the Logit output and the output below is attributable to rounding.

# For the sake of demonstration:

# Exponentiate logistic regression coefficient to convert to odds ratio

# Note that the Logit function already does this for us

exp(-.438)## [1] 0.6453258In the Odds ratios and confidence intervals table, we see that indeed the odds ratio is approximately .646. Because the odds ratio is less than 1, it implies a negative association between the predictor and outcome variables, which we already knew from the negative regression coefficient on which it is based. Interpreting an odds ratio that is less than 1 takes some getting used to. To aid our interpretation, subtract the odds ratio value of .646 from 1 which yields .354 (i.e., 1 - .646 = .354). Now, using that difference value, we can say something like: The odds of quitting are reduced by 35.4% (\(100 \times .354\)) for every one unit increase in job satisfaction (JS). Alternatively, we could take the reciprocal of .646, which is 1.548 (1 / .646), and interpret the effect in terms of not quitting (i.e., staying): The odds of not quitting are 1.548 times as likely for every one unit increase in job satisfaction (JS). If you have never worked with odds before, keep practicing the interpretation and it will come to you at some point. Note that the odds ratio (OR) is a type of effect size, and thus we can compare odds ratios and describe them qualitatively using descriptive language. There are different rules of thumb, and for the sake of parsimony, I provide rules of thumb for when odds ratios are greater than 1 and less than 1.

| Odds Ratio > 1 | Odds Ratio < 1 | Description |

|---|---|---|

| 1.2 | .8 | Small |

| 2.5 | .4 | Medium |

| 4.3 | .2 | Large |

In the Model Fit table, note that we don’t have an estimate of R-squared (R2) like we would with a traditional linear regression model. There are ways to compute what are often referred to as pseudo-R-squared (R2) values, but for now let’s focus on what is produced in the Logit function output. As you can see, we get the null deviance and residual deviance values (and their degrees of freedom) as well as the Akaike information criterion (AIC) value. By themselves, these values are not very meaningful; however, they can be used to compare nested models, which is beyond the scope of this tutorial. For our purposes, we will assess the model’s fit to the data by looking at the Specified Confusion Matrices table at the end of the output. This table makes model fit assessments fairly intuitive. First, in the baseline section (which is akin to a null model without any predictors), the confusion matrix provides information about actual the counts and percentages of employees who stayed and quit the organization, which were 39 (39.8%) and 59 (60.2%), respectively. [Remember that for the Turnover variable, 0 = stayed and 1 = quit in our data.] In the predicted section, the table provides information about who would be predicted to stay and who would be predicted to quit based on our logistic regression model. Anyone who has a predicted probability of .50 or higher is predicted to quit, and anyone who has a predicted probability that is less than .50 is predicted to stay. Further, a cross-tabulation is shown in which the rows represent actual/observed turnover behavior (0 = stay, 1 = quit), and the columns represent predicted turnover behavior (0 = stay, 1 = quit). Thus, this cross-tabulation helps us understand how accurate our model predictions were relative to the observed data, thereby providing us with an indication of how well the model fit the data. Of those who actually stayed (0), we were only able to predict their turnover behavior with 20.5% accuracy using our model (compared to our baseline of 39.8%). Of those who actually quit (1), our model fared much better, as we were able to predict that outcome with 83.1% accuracy (compared to our baseline of 60.2%). Overall, we tend to be most interested in the overall percentage of correct classifications, which is 58.2% – so not a monumental amount of prediction accuracy when using just JS (job satisfaction) as a predictor in the model. If we were to add additional predictor variables to the model, our hope would be that our percentage of correct predictions would increase to a notable extent.

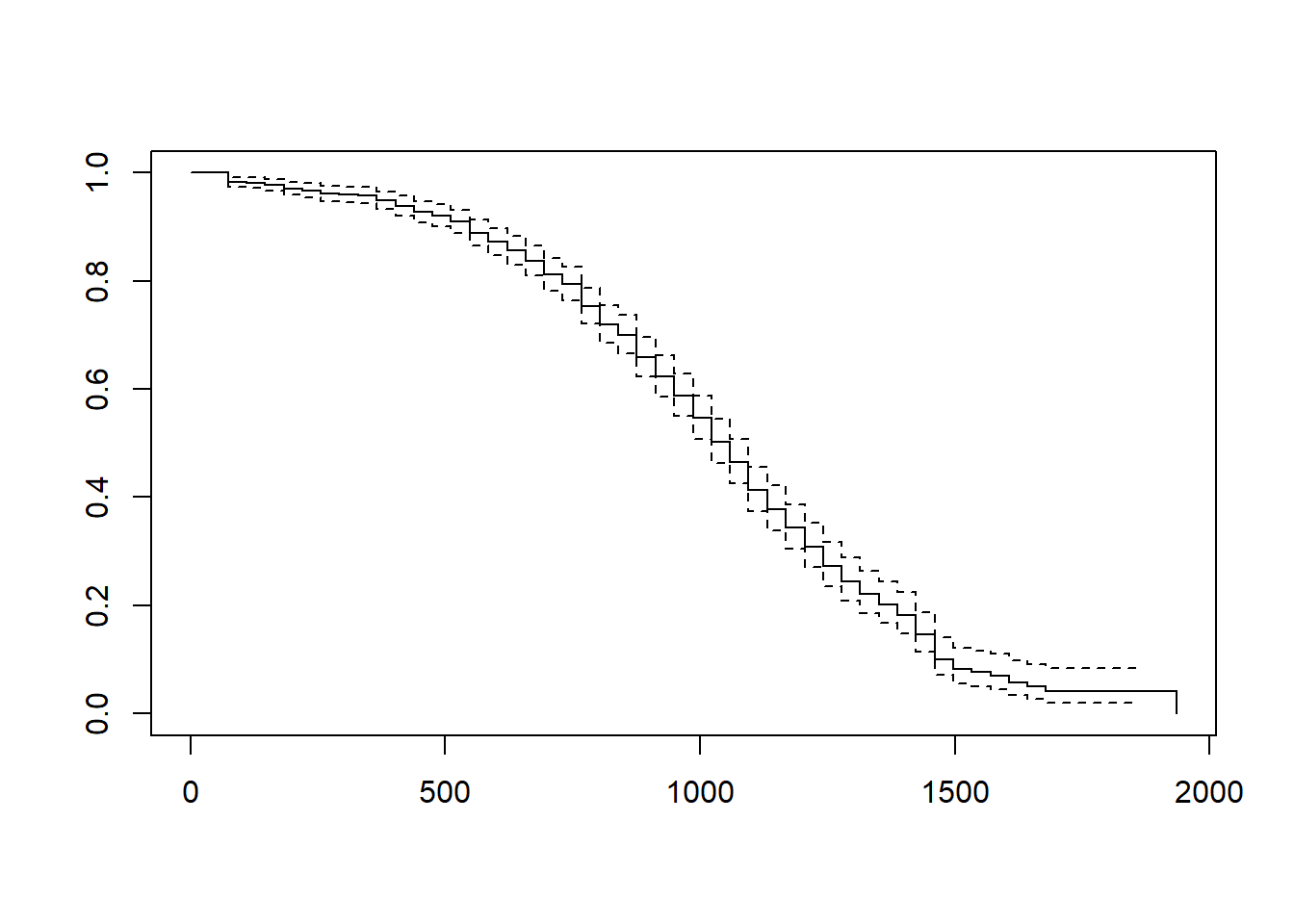

Forecasts: In the output section called Forecasts, information about the actual outcome and the predicted and fitted values are presented (along with the standard error). This section moves us toward what would be considered true predictive analytics and machine learning; however, because we only have a single dataset to train our model and test it, we’re not performing true predictive analytics. As such, we won’t pay much attention to interpreting this section of the output in this tutorial. With that said, if you’re curious, feel free to read on. When performing true predictive analytics, we typically divide our data into at least two datasets. Often, we have at least one training dataset that we use to “train” or estimate a given model; often, we have more than one training dataset, though. After training the model on one or more training datasets, we then evaluate the model on a test dataset that should contain data from an entirely different set of cases than the training dataset(s). As a more rigorous approach, we can instead use a validation dataset to evaluate the training dataset(s), and after we’ve picked the model that performs best on the validation set, we then pass the model along to the test dataset to see if we can confirm the results.

Nagelkerke pseudo-R2: To compute Nagelkerke’s pseudo-R2, we will need to install and access the DescTools package (Andri et mult. al. 2021) so that we can use its PseudoR2 function.

To use the function, we’ll need to re-estimate our simple logistic regression model using the glm function from base R. To request a logistic regression model as a specific type of generalized linear model, we’ll add the family=binomial argument. Using the <- assignment operator, we will assign the resulting estimated model to an object that we’ll arbitrarily call model1.

# Estimate simple logistic regression model and assign to object

model1 <- glm(Turnover ~ JS, data=td, family=binomial)In the PseudoR2 function, we will specify the name of the model object (model1) as the first argument. As the second argument, type "Nagel" to request pseudo-R2 calculated using Nagelkerke’s formula.

## Nagelkerke

## 0.07258382The estimated Nagelkerke pseudo-R2 is .073. Remember, a pseudo-R2 is not the exact same thing as a true R2, so we should interpret it with caution. With caution, we can conclude that JS explains 7.3% of the variance in Turnover.

Because the DescTools package also has a function called Logit, let’s detach the package before moving forward so that we don’t inadvertently attempt to use the Logit function from DescTools as opposed to the one from lessR.

Technical Write-Up of Results: A turnover study was conducted based on a sample of 99 employees from the past year, some of whom quit the company and some of whom stayed. Turnover behavior (quit vs. stay) (Turnover) is our outcome of interest, and because it is dichotomous, we used logistic regression. We, specifically, were interested in the extent to which employees’ self-reported job satisfaction is associated with their decisions to quit or stay, and thus job satisfaction (JS) was used as continuous predictor variable in our simple logistic regression. In total, due to missing data, 98 employees were included in our analysis. Results indicated that, indeed, job satisfaction was associated with turnover behavior to a statistically significant extent, and the association was negative (b = -.438, p = .025, 95% CI[-.822, -.054]). That is, the odds of quitting were reduced by 35.4% for every one unit increase in job satisfaction (OR = .646), which was a small-medium effect. Overall, using our estimate simple logistic regression model, we were able to predict actual turnover behavior in our sample with 58.2% accuracy, which suggests there is quite a bit of room for improvement. Finally, the estimated Nagelkerke pseudo-R2 was .073. We can cautiously conclude that job satisfaction explains 7.3% of the variance in voluntary turnover.

Dealing with Bivariate Outliers: If you recall above, we found that the cases associated with row numbers 66 and 67 in this sample may be potential bivariate outliers. I tend to be quite wary of eliminating cases that are members of the population of interest and who seem to have plausible data (i.e., cleaned). As such, I am typically reluctant to jettison a case, unless the case appears to have a dramatic influence on the estimated regression line (i.e., has a Cook’s distance value greater than 1.0). If you were to decide to remove cases 66 and 67, here’s what you would do. First, look at the data frame (using the View function) and determine which cases row numbers 66 and 67 are associated with; because we have a unique identifier variable (ID) in our data frame, we can see that they are associated with ID equal to EMP861 and EMP862, respectively. Next, with respect to estimating the logistic regression model, the model should be specified just as it was earlier in the tutorial, but now let’s add an additional argument: rows=(!ID %in% c("EMP861","EMP862")); the rows argument subsets the data frame within the Logit function by whatever logical/conditional statement you provide. In this instance, we indicate that we want to retain every case in which ID is not (!) within the vector containing EMP861 and EMP862. Please consider revisiting the chapter on filtering (subsetting) data if you would like to see the full list of logical operators or to review how to filter out cases from a data frame before specifying the model.

# Simple logistic regression model with outlier/influential cases removed

Logit(Turnover ~ JS, data=td, rows=(!ID %in% c("EMP861","EMP862")))##

## Response Variable: Turnover

## Predictor Variable 1: JS

##

## Number of cases (rows) of data: 97

## Number of cases retained for analysis: 96

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 1.8799 0.7067 2.660 0.008 0.4948 3.2649

## JS -0.4388 0.1997 -2.198 0.028 -0.8301 -0.0475

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 6.5528 1.6402 26.1785

## JS 0.6448 0.4360 0.9536

##

##

## -- Model Fit

##

## Null deviance: 128.887 on 95 degrees of freedom

## Residual deviance: 123.664 on 94 degrees of freedom

##

## AIC: 127.6641

##

## Number of iterations to convergence: 4

##

##

## ANALYSIS OF RESIDUALS AND INFLUENCE

## Data, Fitted, Residual, Studentized Residual, Dffits, Cook's Distance

## [sorted by Cook's Distance]

## [res_rows = 20 out of 96 cases (rows) of data]

## --------------------------------------------------------------------

## JS Turnover fitted residual rstudent dffits cooks

## 69 6.00 1 0.3202 0.6798 1.5612 0.3758 0.08581

## 7 1.38 0 0.7815 -0.7815 -1.7807 -0.2965 0.06696

## 73 5.48 1 0.3717 0.6283 1.4394 0.2963 0.04900

## 12 1.72 0 0.7549 -0.7549 -1.7039 -0.2564 0.04677

## 58 5.43 1 0.3769 0.6231 1.4281 0.2889 0.04624

## 31 1.77 0 0.7509 -0.7509 -1.6928 -0.2507 0.04425

## 13 1.96 0 0.7349 -0.7349 -1.6508 -0.2291 0.03562

## 1 4.96 1 0.4264 0.5736 1.3247 0.2239 0.02592

## 33 4.88 1 0.4350 0.5650 1.3076 0.2136 0.02334

## 61 2.52 0 0.6844 -0.6844 -1.5305 -0.1720 0.01814

## 97 5.59 0 0.3605 -0.3605 -0.9631 -0.2060 0.01765

## 84 4.66 1 0.4589 0.5411 1.2617 0.1870 0.01736

## 74 2.56 0 0.6806 -0.6806 -1.5221 -0.1685 0.01729

## 70 5.48 0 0.3717 -0.3717 -0.9809 -0.2021 0.01715

## 63 4.65 1 0.4600 0.5400 1.2596 0.1858 0.01713

## 75 2.57 0 0.6797 -0.6797 -1.5200 -0.1676 0.01708

## 80 5.04 0 0.4178 -0.4178 -1.0538 -0.1840 0.01480

## 77 4.46 1 0.4807 0.5193 1.2209 0.1649 0.01316

## 96 2.82 0 0.6553 -0.6553 -1.4682 -0.1483 0.01283

## 39 4.43 1 0.4840 0.5160 1.2149 0.1618 0.01262

##

##

## PREDICTION

##

## Probability threshold for classification : 0.5

##

##

## Data, Fitted Values, Standard Errors

## [sorted by fitted value]

## [pred_all=TRUE to see all intervals displayed]

## --------------------------------------------------------------------

## JS Turnover label fitted std.err

## 69 6.00 1 0 0.3202 0.1234

## 97 5.59 0 0 0.3605 0.1134

## 70 5.48 0 0 0.3717 0.1103

## 73 5.48 1 0 0.3717 0.1103

##

## ... for the rows of data where fitted is close to 0.5 ...

##

## JS Turnover label fitted std.err

## 39 4.43 1 0 0.4840 0.07517

## 83 4.41 0 0 0.4862 0.07449

## 64 4.26 1 1 0.5027 0.06955

## 27 4.15 0 1 0.5147 0.06614

## 14 4.14 0 1 0.5158 0.06584

##

## ... for the last 4 rows of sorted data ...

##

## JS Turnover label fitted std.err

## 7 1.38 0 1 0.7815 0.07718

## 48 1.05 1 1 0.8052 0.08013

## 88 0.67 1 1 0.8300 0.08191

## 24 0.23 1 1 0.8556 0.08194

## --------------------------------------------------------------------

##

##

## ----------------------------

## Specified confusion matrices

## ----------------------------

##

## Probability threshold for predicting : 0.5

## Corresponding cutoff threshold for JS: 4.284

##

## Baseline Predicted

## ---------------------------------------------------

## Total %Tot 0 1 %Correct

## ---------------------------------------------------

## 1 58 60.4 9 49 84.5

## Turnover 0 38 39.6 8 30 21.1

## ---------------------------------------------------

## Total 96 59.4

##

## Accuracy: 59.38

## Sensitivity: 84.48

## Precision: 62.03

48.2.4.3 Optional: Compute Predicted Probabilities Based on Sample Data

The Logit function makes it easy to compute the probabilities of the even occurring based on the sample observations. In fact, all we have to do is add the pred_all=TRUE argument to the function.

# Simple logistic regression model with predicted probabilities

Logit(Turnover ~ JS, data=td, pred_all=TRUE)##

## Response Variable: Turnover

## Predictor Variable 1: JS

##

## Number of cases (rows) of data: 99

## Number of cases retained for analysis: 98

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 1.8554 0.6883 2.695 0.007 0.5063 3.2044

## JS -0.4378 0.1958 -2.236 0.025 -0.8216 -0.0540

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 6.3939 1.6591 24.6415

## JS 0.6455 0.4397 0.9475

##

##

## -- Model Fit

##

## Null deviance: 131.746 on 97 degrees of freedom

## Residual deviance: 126.341 on 96 degrees of freedom

##

## AIC: 130.3413

##

## Number of iterations to convergence: 4

##

##

## ANALYSIS OF RESIDUALS AND INFLUENCE

## Data, Fitted, Residual, Studentized Residual, Dffits, Cook's Distance

## [sorted by Cook's Distance]

## [res_rows = 20 out of 98 cases (rows) of data]

## --------------------------------------------------------------------

## JS Turnover fitted residual rstudent dffits cooks

## 69 6.00 1 0.3162 0.6838 1.5688 0.3725 0.08496

## 7 1.38 0 0.7775 -0.7775 -1.7682 -0.2877 0.06241

## 73 5.48 1 0.3673 0.6327 1.4476 0.2949 0.04889

## 58 5.43 1 0.3724 0.6276 1.4363 0.2877 0.04618

## 12 1.72 0 0.7507 -0.7507 -1.6920 -0.2486 0.04353

## 31 1.77 0 0.7466 -0.7466 -1.6810 -0.2429 0.04117

## 13 1.96 0 0.7305 -0.7305 -1.6393 -0.2219 0.03314

## 1 4.96 1 0.4217 0.5783 1.3332 0.2239 0.02609

## 33 4.88 1 0.4302 0.5698 1.3162 0.2138 0.02353

## 84 4.66 1 0.4540 0.5460 1.2703 0.1875 0.01757

## 63 4.65 1 0.4551 0.5449 1.2682 0.1863 0.01733

## 61 2.52 0 0.6797 -0.6797 -1.5199 -0.1668 0.01693

## 97 5.59 0 0.3562 -0.3562 -0.9554 -0.2021 0.01693

## 70 5.48 0 0.3673 -0.3673 -0.9731 -0.1985 0.01648

## 74 2.56 0 0.6758 -0.6758 -1.5115 -0.1635 0.01615

## 75 2.57 0 0.6749 -0.6749 -1.5095 -0.1626 0.01596

## 67 2.65 0 0.6671 -0.6671 -1.4929 -0.1563 0.01454

## 80 5.04 0 0.4131 -0.4131 -1.0457 -0.1813 0.01431

## 77 4.46 1 0.4757 0.5243 1.2296 0.1656 0.01336

## 39 4.43 1 0.4790 0.5210 1.2235 0.1625 0.01282

##

##

## PREDICTION

##

## Probability threshold for classification : 0.5

##

##

## Data, Fitted Values, Standard Errors

## [sorted by fitted value]

## --------------------------------------------------------------------

## JS Turnover label fitted std.err

## 69 6.00 1 0 0.3162 0.12145

## 97 5.59 0 0 0.3562 0.11198

## 70 5.48 0 0 0.3673 0.10900

## 73 5.48 1 0 0.3673 0.10900

## 58 5.43 1 0 0.3724 0.10758

## 80 5.04 0 0 0.4131 0.09555

## 1 4.96 1 0 0.4217 0.09291

## 33 4.88 1 0 0.4302 0.09023

## 84 4.66 1 0 0.4540 0.08274

## 63 4.65 1 0 0.4551 0.08240

## 21 4.64 0 0 0.4561 0.08206

## 53 4.63 0 0 0.4572 0.08172

## 45 4.59 0 0 0.4616 0.08036

## 47 4.57 0 0 0.4638 0.07968

## 77 4.46 1 0 0.4757 0.07597

## 39 4.43 1 0 0.4790 0.07497

## 83 4.41 0 0 0.4812 0.07431

## 64 4.26 1 0 0.4976 0.06946

## 27 4.15 0 1 0.5097 0.06609

## 14 4.14 0 1 0.5107 0.06579

## 29 4.11 0 1 0.5140 0.06491

## 23 4.10 0 1 0.5151 0.06462

## 89 4.07 0 1 0.5184 0.06376

## 99 4.06 0 1 0.5195 0.06348

## 94 4.03 0 1 0.5228 0.06265

## 55 3.97 1 1 0.5293 0.06105

## 16 3.95 0 1 0.5315 0.06054

## 8 3.92 1 1 0.5348 0.05978

## 42 3.90 0 1 0.5369 0.05929

## 57 3.87 1 1 0.5402 0.05858

## 40 3.83 0 1 0.5446 0.05767

## 6 3.81 1 1 0.5467 0.05724

## 50 3.79 0 1 0.5489 0.05681

## 98 3.69 1 1 0.5597 0.05489

## 86 3.63 1 1 0.5662 0.05390

## 11 3.62 1 1 0.5672 0.05375

## 76 3.50 0 1 0.5801 0.05222

## 54 3.49 1 1 0.5812 0.05211

## 93 3.45 0 1 0.5854 0.05174

## 56 3.44 0 1 0.5865 0.05166

## 87 3.42 1 1 0.5886 0.05151

## 28 3.37 1 1 0.5939 0.05119

## 18 3.35 1 1 0.5960 0.05109

## 82 3.34 0 1 0.5971 0.05105

## 92 3.33 1 1 0.5981 0.05101

## 46 3.32 0 1 0.5992 0.05097

## 22 3.27 1 1 0.6044 0.05085

## 59 3.27 1 1 0.6044 0.05085

## 36 3.26 0 1 0.6055 0.05084

## 60 3.25 0 1 0.6065 0.05083

## 71 3.23 0 1 0.6086 0.05082

## 78 3.22 1 1 0.6096 0.05082

## 91 3.21 1 1 0.6107 0.05083

## 35 3.19 1 1 0.6127 0.05085

## 32 3.15 0 1 0.6169 0.05094

## 37 3.15 1 1 0.6169 0.05094

## 43 3.05 1 1 0.6272 0.05141

## 5 3.04 1 1 0.6282 0.05147

## 4 3.01 1 1 0.6313 0.05168

## 79 3.01 1 1 0.6313 0.05168

## 20 2.98 0 1 0.6343 0.05192

## 30 2.96 0 1 0.6364 0.05209

## 25 2.94 1 1 0.6384 0.05228

## 81 2.93 1 1 0.6394 0.05237

## 96 2.82 0 1 0.6504 0.05359

## 51 2.79 1 1 0.6534 0.05397

## 26 2.73 1 1 0.6593 0.05478

## 34 2.67 1 1 0.6652 0.05565

## 67 2.65 0 1 0.6671 0.05595

## 65 2.62 1 1 0.6700 0.05642

## 75 2.57 0 1 0.6749 0.05721

## 74 2.56 0 1 0.6758 0.05738

## 61 2.52 0 1 0.6797 0.05804

## 95 2.48 1 1 0.6835 0.05871

## 17 2.46 1 1 0.6853 0.05905

## 41 2.38 1 1 0.6928 0.06044

## 9 2.35 1 1 0.6956 0.06096

## 19 2.33 1 1 0.6975 0.06131

## 68 2.28 1 1 0.7021 0.06220

## 72 2.08 1 1 0.7201 0.06571

## 15 2.03 1 1 0.7245 0.06657

## 90 2.00 1 1 0.7271 0.06708

## 13 1.96 0 1 0.7305 0.06775

## 38 1.96 1 1 0.7305 0.06775

## 62 1.92 1 1 0.7340 0.06842

## 49 1.84 1 1 0.7407 0.06971

## 31 1.77 0 1 0.7466 0.07079

## 85 1.76 1 1 0.7474 0.07095

## 2 1.72 1 1 0.7507 0.07154

## 12 1.72 0 1 0.7507 0.07154

## 10 1.69 1 1 0.7532 0.07198

## 3 1.64 1 1 0.7572 0.07270

## 44 1.43 1 1 0.7737 0.07541

## 7 1.38 0 1 0.7775 0.07598

## 66 1.19 1 1 0.7916 0.07790

## 48 1.05 1 1 0.8015 0.07904

## 88 0.67 1 1 0.8266 0.08096

## 24 0.23 1 1 0.8525 0.08116

## --------------------------------------------------------------------

##

##

## ----------------------------

## Specified confusion matrices

## ----------------------------

##

## Probability threshold for predicting : 0.5

## Corresponding cutoff threshold for JS: 4.238

##

## Baseline Predicted

## ---------------------------------------------------

## Total %Tot 0 1 %Correct

## ---------------------------------------------------

## 1 59 60.2 10 49 83.1

## Turnover 0 39 39.8 8 31 20.5

## ---------------------------------------------------

## Total 98 58.2

##

## Accuracy: 58.16

## Sensitivity: 83.05

## Precision: 61.25

In the output under the Forecasts section, you should see a section called Data, Fitted Values, Standard Errors. This section now contains the predicted probabilities and associated classifications for all cases in the sample. The column labeled fitted contains the predicted probabilities, and the column labeled predict contains the probabilities when applied to a default probability threshold of .50, such that 1 indicates that the probability was .50 or greater (i.e., event predicted to occur) 0 indicates the probability was less than .50 (i.e., event not predicted to occur).

If desired, we can also append the predicted probabilities (i.e., fitted values) to our existing data frame by referencing the row names (i.e., row numbers) of each object when we merge.

- Let’s overwrite the existing

tddata frame object by using the<-assignment operator. - Specify the name of the

mergefunction from base R. For more information on this function, please refer to this chapter supplement from the chapter on joining data frames.

- As the first argument in the

mergefunction, specifyx=followed by the name of thetddata frame object. - As the second argument in the

mergefunction, specifyy=followed by thedata.framefunction from base R. As the sole argument within thedata.framefunction specify a name for the new variable that will contain the predicted probabilities based onJSscores (prob_JS), followed by the=operator and our simple logistic regression model from above with$fitted.valuesto the end. This will extract just the fitted values from the output and then convert the vector to a data frame object. - As the third argument in the

mergefunction, typeby="row.names", which will match rows from thexandydata frame objects based on their respective row names (i.e., row numbers). - As the fourth argument in the

mergefunction, typeall=TRUEto request a full merge, such that all rows with data will be retained from both data frame objects when merging.

# Simple logistic regression model with predicted probabilities

# added as new variable in existing data frame object

td <- merge(x=td,

y=data.frame(

prob_JS = Logit(Turnover ~ JS, data=td, pred_all=TRUE)$fitted.values

),

by="row.names",

all=TRUE)##

## Response Variable: Turnover

## Predictor Variable 1: JS

##

## Number of cases (rows) of data: 99

## Number of cases retained for analysis: 98

##

##

## BASIC ANALYSIS

##

## -- Estimated Model of Turnover for the Logit of Reference Group Membership

##

## Estimate Std Err z-value p-value Lower 95% Upper 95%

## (Intercept) 1.8554 0.6883 2.695 0.007 0.5063 3.2044

## JS -0.4378 0.1958 -2.236 0.025 -0.8216 -0.0540

##

##

## -- Odds Ratios and Confidence Intervals

##

## Odds Ratio Lower 95% Upper 95%

## (Intercept) 6.3939 1.6591 24.6415

## JS 0.6455 0.4397 0.9475

##

##

## -- Model Fit

##

## Null deviance: 131.746 on 97 degrees of freedom

## Residual deviance: 126.341 on 96 degrees of freedom

##

## AIC: 130.3413

##

## Number of iterations to convergence: 4

##

##

## ANALYSIS OF RESIDUALS AND INFLUENCE

## Data, Fitted, Residual, Studentized Residual, Dffits, Cook's Distance

## [sorted by Cook's Distance]

## [res_rows = 20 out of 98 cases (rows) of data]

## --------------------------------------------------------------------

## JS Turnover fitted residual rstudent dffits cooks

## 69 6.00 1 0.3162 0.6838 1.5688 0.3725 0.08496

## 7 1.38 0 0.7775 -0.7775 -1.7682 -0.2877 0.06241

## 73 5.48 1 0.3673 0.6327 1.4476 0.2949 0.04889

## 58 5.43 1 0.3724 0.6276 1.4363 0.2877 0.04618